Language: Scala and Python

Requirements:

- Spark 2.4.X (Or Greater)

Author: Ian Brooks

Follow [LinkedIn - Ian Brooks PhD] (https://www.linkedin.com/in/ianrbrooksphd/)

The goal is to use Open Source tools to build a Natural Language Processing (NLP) model for Inverse Document Frequency / Term Frequency (IDF/TF) for the purpose of discovering documents that are related to each other. In this project, the IDF/TF model will be used as to create features, from text strings in the original documents, that represent that weight of each word term in the corpus of the text documents.

The data will be sourced from SBIR's funding awards from 2019, and the hashing features will only be trained on the words in the abstract section of each of awards.

Addtional Information: The Small Business Innovation Research (SBIR) program is a highly competitive program that encourages domestic small businesses to engage in Federal Research/Research and Development (R/R&D) that has the potential for commercialization.

In information retrieval, tf–idf, TFIDF, or TFIDF, short for term frequency–inverse document frequency, is a numerical statistic that is intended to reflect how important a word is to a document in a collection or corpus.

It is often used as a weighting factor in searches of information retrieval, text mining, and user modeling. The tf–idf value increases proportionally to the number of times a word appears in the document and is offset by the number of documents in the corpus that contain the word, which helps to adjust for the fact that some words appear more frequently in general. tf–idf is one of the most popular term-weighting schemes today.

The purpose of using TF/IDF for feature engineering is to help the downstream ML models understand the weight or imporpantace of a word or search term. This will allow these models to find documents that are related to each other when they are trained on these values. The TF/IDF model tokenizes the text terms, which looks like the following image.

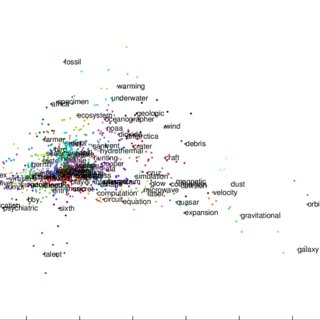

Using the provided PySpark code, the results of Term Frequency hashing are displayed in the following image. The project is using the text terms provided by the abstracts from the original documents. The tokenized values are listed in the column called features.

Once these features have been created, they can be used to train downstream Machine Learning models or Hashing based models that are designed to find similar documentd. This project will be providing an example of Unsuperivsed ML K-Means Clustering and Locality-Sensitive Hashing (LSH) - MinHash. They were selected because they are both provided in Aapche Spark ML Lib.

./downloadData.shDocument Clustering is common technque used to find similar documents based on key words or other search features. This project will demonstrate Clustering (K-Means), and find the documents that cluster with each other. The model is trainined on the Term Frequency Hashing values, and this unsupervised ML approach is well suited for this dataset, since there is no labeled data.

Once the model has been trained, the results from K-Means will display the documents and their assigned cluster. Keep in mind, these documents clustered together based on 2 factors: the words in their abstracts and the value of K used when the model was trained. In K-Mean, K is the predetermined number of clusters that will be used as buckets.

In the following image, the results show the first 20 documents and their cluster assignments.

In the following image, the results displays the documents that were assigned to one particular cluster. This example is using cluster number lucky 13.

- Near-duplicate detection

- Audio similarity identification

- Nearest neighbor search

- Audio fingerprint

- Digital video fingerprinting

This project also demonstrates the use of Locality-Sensitive Hashing (LSH), which is an algorithmic technique that hashes similar input items into the same "buckets" with high probability. Since LSH places similar items end up in the same buckets, this technique can be used for data clustering and nearest neighbor search. This project will use it for document clustering.

After the LSH model has been built, we can enter search terms and find matching values. In this example, I have used the search terms: high, heat, and metal.

In the following image, you can see the documents that are the most common based on the search terms that were entered.

- Apache Spark

- Term Frequency–Inverse Document Frequency

- PySpark: CountVectorizer|HashingTF

- A Friendly Introduction to Text Clustering

- K-Means Clustering

- Text clustering with K-means and tf-idf

- Spark API- Feature Extractors

- Locality-Sensitive Hashing - LSH

- Scalable Jaccard similarity using MinHash and Spark

- Spark - MinHash for Jaccard Distance

- Detecting Abuse at Scale: Locality Sensitive Hashing at Uber Engineering

- Read multiline JSON in Apache Spark

- Effectively Pre-processing the Text Data Part 1: Text Cleaning