Fast & Low Memory requirement & Enhanced implementation of Local Context Focus.

Build from LC-ABSA / LCF-ABSA / LCF-BERT and LCF-ATEPC.

Provide tutorials of training and usages of ATE and APC models.

PyTorch Implementations (CPU & CUDA supported).

- PyABSA use the FindFile to find the target file which means you can specify a dataset/checkpoint by keywords instead of using absolute path. e.g.,

dataset = 'laptop' # instead of './SemEeval/LAPTOP' case doesn't matter

checkpoint = 'lcfs' # any checkpoint whose absoulte path contains lcfs- PyABSA use the AutoCuda to support automatic cuda assignment, but you can still set a preferred device.

auto_device=True # to auto assign a cuda device for training / inference

auto_device='cuda:1' # to specify a prefered device

auto_device='cpu' # to specify a prefered device- PyABSA support auto label fixing which means you can set the labels to any number (greater than -999), e.g., sentiment labels = {-9. -1, 0, 199}

- Other features are available to be found

If you are willing to support PyABSA project, please star this repository as your contribution.

- Installation

- Package Overview

- Quick-Start

- Model Support

- Dataset Support

- Make Contributions

- All Examples

- Notice for LCF-BERT & LCF-ATEPC

Please do not install the version without corresponding release note to avoid installing a test version.

To use PyABSA, install the latest version from pip or source code:

pip install -U pyabsa

git clone https://github.com/yangheng95/PyABSA --depth=1

cd PyABSA

python setup.py install

| pyabsa | package root (including all interfaces) |

| pyabsa.functional | recommend interface entry |

| pyabsa.functional.checkpoint | checkpoint manager entry, inference model entry |

| pyabsa.functional.dataset | datasets entry |

| pyabsa.functional.config | predefined config manager |

| pyabsa.functional.trainer | training module, every trainer return a inference model |

from pyabsa.functional import Trainer

from pyabsa.functional import APCConfigManager

from pyabsa.functional import ABSADatasetList

# Get model list for Bert-based APC models

from pyabsa.functional import APCModelList

# Get model list for Bert-based APC baseline models

# from pyabsa.functional import BERTBaselineAPCModelList

# Get model list for GloVe-based APC baseline models

# from pyabsa.functional import GloVeAPCModelList

# Choose a Bert-based APC models param_dict

apc_config_english = APCConfigManager.get_apc_config_english()

# Choose a Bert-based APC baseline models param_dict

# apc_config_english = APCConfigManager.get_apc_config_bert_baseline()

# Choose a GloVe-based APC baseline models param_dict

# apc_config_english = APCConfigManager.get_apc_config_glove()

# Specify a Bert-based APC model

apc_config_english.model = APCModelList.SLIDE_LCFS_BERT

# Specify a Bert-based APC baseline model

# apc_config_english.model = BERTBaselineAPCModelList.ASGCN_BERT

# Specify a GloVe-based APC baseline model

# apc_config_english.model = GloVeAPCModelList.ASGCN

apc_config_english.similarity_threshold = 1

apc_config_english.max_seq_len = 80

apc_config_english.dropout = 0.5

apc_config_english.log_step = 5

apc_config_english.num_epoch = 10

apc_config_english.evaluate_begin = 4

apc_config_english.l2reg = 0.0005

apc_config_english.seed = {1, 2, 3}

apc_config_english.cross_validate_fold = -1

dataset_path = ABSADatasetList.SemEval #or set your local dataset

sent_classifier = Trainer(config=apc_config_english,

dataset=dataset_path, # train set and test set will be automatically detected

checkpoint_save_mode=1, # = None to avoid save model

auto_device=True # automatic choose CUDA or CPU

)

# batch inferring_tutorials returns the results, save the result if necessary using save_result=True

inference_dataset = ABSADatasetList.SemEval # or set your local dataset

results = sent_classifier.batch_infer(target_file=inference_dataset,

print_result=True,

save_result=True,

ignore_error=True,

)

Apple is unmatched in product quality , aesthetics , craftmanship , and customer service .

product quality --> Positive Real: Positive (Correct)

Apple is unmatched in product quality , aesthetics , craftmanship , and customer service .

aesthetics --> Positive Real: Positive (Correct)

Apple is unmatched in product quality , aesthetics , craftmanship , and customer service .

craftmanship --> Positive Real: Positive (Correct)

Apple is unmatched in product quality , aesthetics , craftmanship , and customer service .

customer service --> Positive Real: Positive (Correct)

It is a great size and amazing windows 8 included !

windows 8 --> Positive Real: Positive (Correct)

I do not like too much Windows 8 .

Windows 8 --> Negative Real: Negative (Correct)

Took a long time trying to decide between one with retina display and one without .

retina display --> Neutral Real: Neutral (Correct)

It 's so nice that the battery last so long and that this machine has the snow lion !

battery --> Positive Real: Positive (Correct)

It 's so nice that the battery last so long and that this machine has the snow lion !

snow lion --> Positive Real: Positive (Correct)

Check the detailed usages in APC examples directory.

from pyabsa.functional import ATEPCModelList

from pyabsa.functional import Trainer, ATEPCTrainer

from pyabsa.functional import ABSADatasetList

from pyabsa.functional import ATEPCConfigManager

config = ATEPCConfigManager.get_atepc_config_english()

atepc_config_english = ATEPCConfigManager.get_atepc_config_english()

atepc_config_english.num_epoch = 10

atepc_config_english.evaluate_begin = 4

atepc_config_english.log_step = 100

atepc_config_english.model = ATEPCModelList.LCF_ATEPC

laptop14 = ABSADatasetList.Laptop14

aspect_extractor = ATEPCTrainer(config=atepc_config_english,

dataset=laptop14

)

from pyabsa import ATEPCCheckpointManager

examples = ['相比较原系列锐度高了不少这一点好与不好大家有争议',

'这款手机的大小真的很薄,但是颜色不太好看, 总体上我很满意啦。'

]

aspect_extractor = ATEPCCheckpointManager.get_aspect_extractor(checkpoint='chinese',

auto_device=True # False means load model on CPU

)

inference_source = pyabsa.ABSADatasetList.SemEval

atepc_result = aspect_extractor.extract_aspect(inference_source=inference_source,

save_result=True,

print_result=True, # print the result

pred_sentiment=True, # Predict the sentiment of extracted aspect terms

)

Sentence with predicted labels:

关(O) 键(O) 的(O) 时(O) 候(O) 需(O) 要(O) 表(O) 现(O) 持(O) 续(O) 影(O) 像(O) 的(O) 短(B-ASP) 片(I-ASP) 功(I-ASP) 能(I-ASP) 还(O) 是(O) 很(O) 有(O) 用(O) 的(O)

{'aspect': '短 片 功 能', 'position': '14,15,16,17', 'sentiment': '1'}

Sentence with predicted labels:

相(O) 比(O) 较(O) 原(O) 系(O) 列(O) 锐(B-ASP) 度(I-ASP) 高(O) 了(O) 不(O) 少(O) 这(O) 一(O) 点(O) 好(O) 与(O) 不(O) 好(O) 大(O) 家(O) 有(O) 争(O) 议(O)

{'aspect': '锐 度', 'position': '6,7', 'sentiment': '0'}

Sentence with predicted labels:

It(O) was(O) pleasantly(O) uncrowded(O) ,(O) the(O) service(B-ASP) was(O) delightful(O) ,(O) the(O) garden(B-ASP) adorable(O) ,(O) the(O) food(B-ASP) -LRB-(O) from(O) appetizers(B-ASP) to(O) entrees(B-ASP) -RRB-(O) was(O) delectable(O) .(O)

{'aspect': 'service', 'position': '7', 'sentiment': 'Positive'}

{'aspect': 'garden', 'position': '12', 'sentiment': 'Positive'}

{'aspect': 'food', 'position': '16', 'sentiment': 'Positive'}

{'aspect': 'appetizers', 'position': '19', 'sentiment': 'Positive'}

{'aspect': 'entrees', 'position': '21', 'sentiment': 'Positive'}

Sentence with predicted labels:

Check the detailed usages in ATE examples directory.

PyABSA will check the latest available checkpoints before and load the latest checkpoint from Google Drive. To view available checkpoints, you can use the following code and load the checkpoint by name:

from pyabsa import available_checkpoints

checkpoint_map = available_checkpoinbertts()

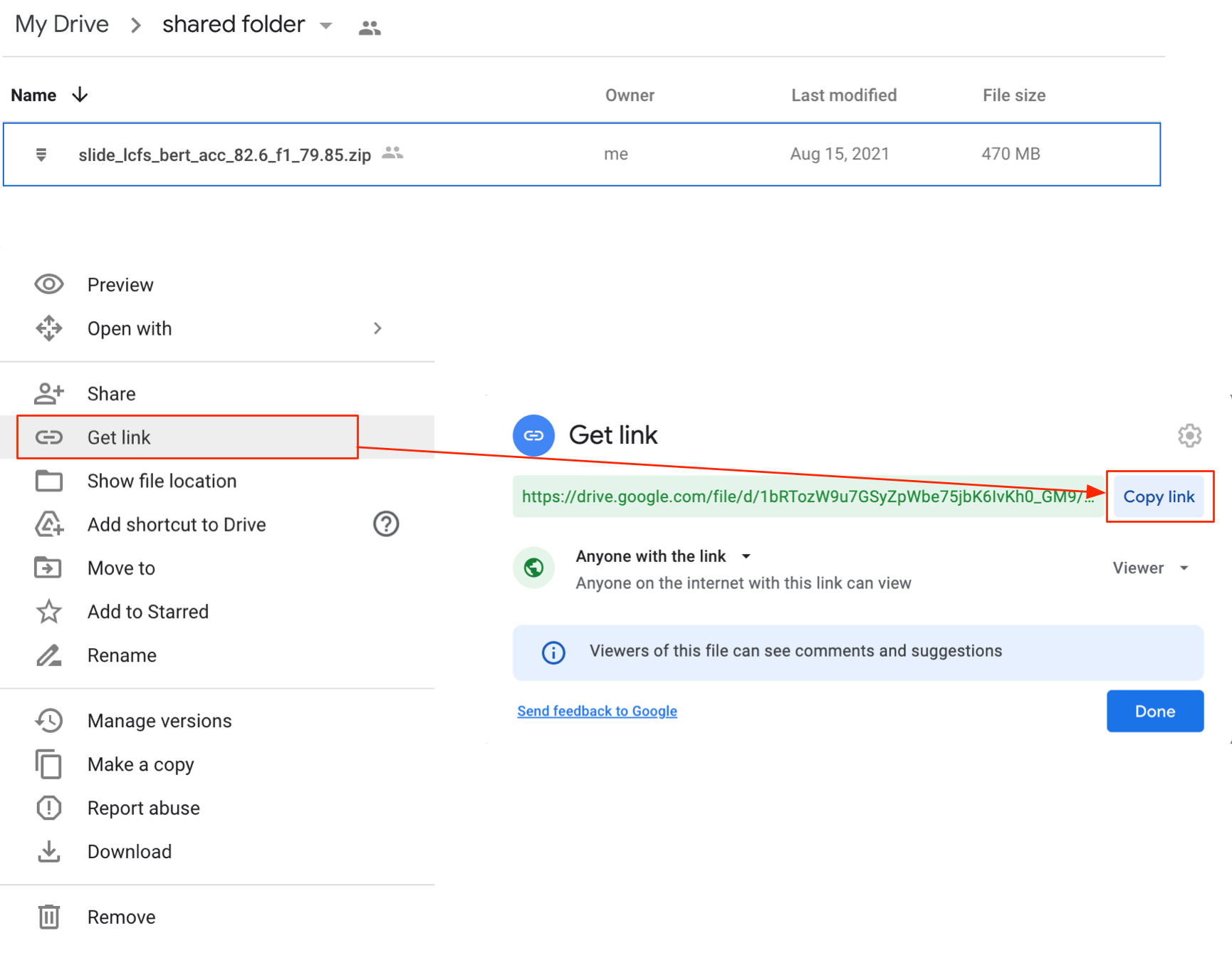

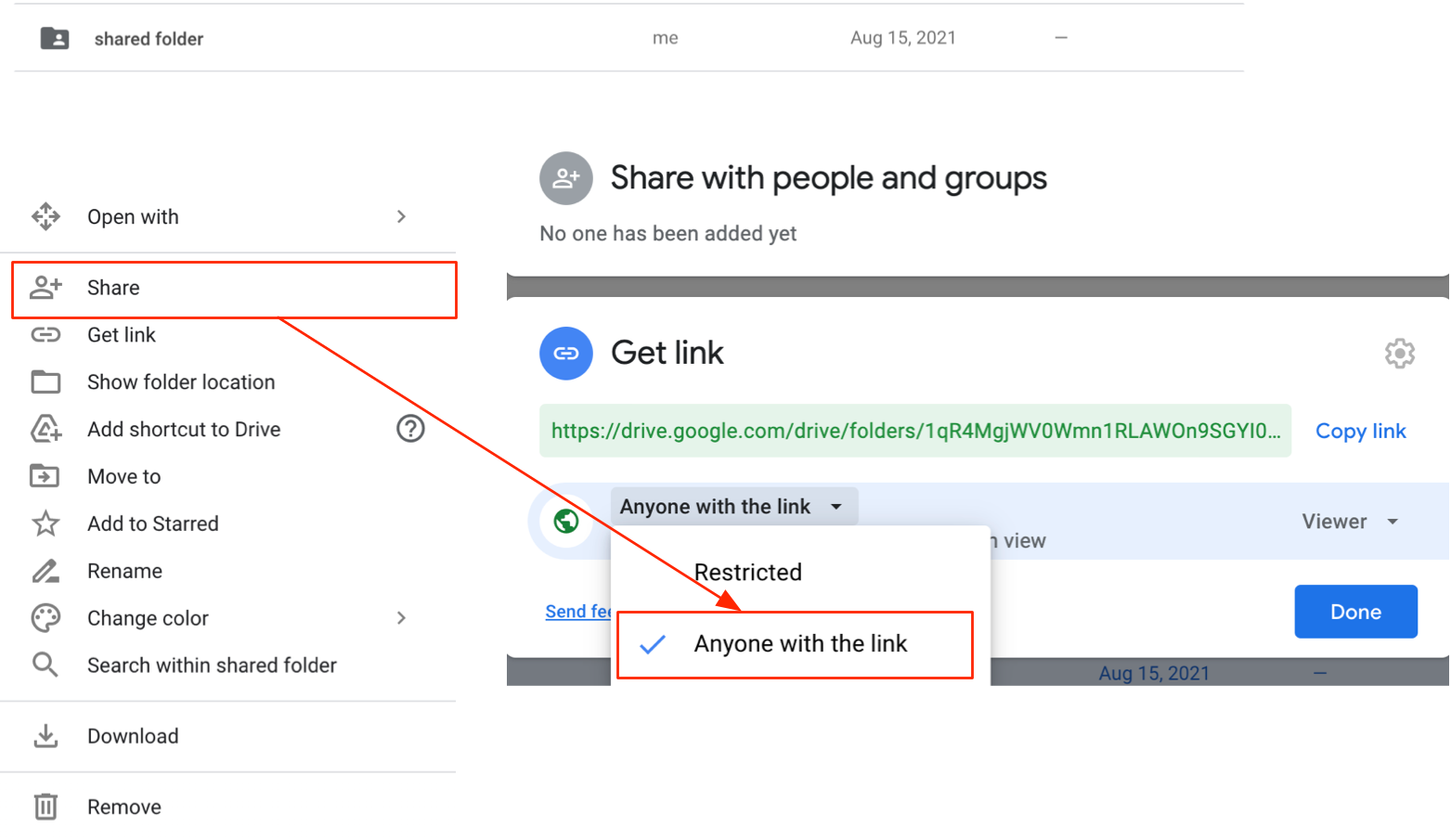

If you can not access to Google Drive, you can download our checkpoints and load the unzipped checkpoint manually. 如果您无法访问谷歌Drive,您可以下载我们预训练的模型,并手动解压缩并加载模型。 模型下载地址 提取码:ABSA

For resource limitation, we do not provide diversities of checkpoints, we hope you can share your checkpoints with those who have not enough resource to train their model.

-

Upload your zipped checkpoint to Google Drive in a shared folder.

-

Register the checkpoint in the checkpoint_map, then make a pull request. We will update the checkpoints index as soon as we can, Thanks for your help!

"checkpoint name": {

"id": "your checkpoint link",

"model": "model name",

"dataset": "trained dataset",

"description": "trained equipment",

"version": "used pyabsa version",

"author": "name (email)"

}

import os

from pyabsa import APCCheckpointManager, ABSADatasetList

os.environ['PYTHONIOENCODING'] = 'UTF8'

sentiment_map = {0: 'Negative', 1: 'Neutral', 2: 'Positive', -999: ''}

sent_classifier = APCCheckpointManager.get_sentiment_classifier(checkpoint='dlcf-dca-bert1', #or set your local checkpoint

auto_device='cuda', # Use CUDA if available

sentiment_map=sentiment_map

)

# batch inferring_tutorials returns the results, save the result if necessary using save_result=True

inference_datasets = ABSADatasetList.Laptop14 # or set your local dataset

results = sent_classifier.batch_infer(target_file=inference_datasets,

print_result=True,

save_result=True,

ignore_error=True,

)

import os

from pyabsa import ABSADatasetList

from pyabsa import ATEPCCheckpointManager

os.environ['PYTHONIOENCODING'] = 'UTF8'

sentiment_map = {0: 'Negative', 1: "Neutral", 2: 'Positive', -999: ''}

aspect_extractor = ATEPCCheckpointManager.get_aspect_extractor(checkpoint='Laptop14', # or your local checkpoint

auto_device=True # False means load model on CPU

)

# inference_dataset = ABSADatasetList.SemEval # or set your local dataset

atepc_result = aspect_extractor.extract_aspect(inference_source=inference_dataset,

save_result=True,

print_result=True, # print the result

pred_sentiment=True, # Predict the sentiment of extracted aspect terms

)

from pyabsa.functional import APCCheckpointManager

from pyabsa.functional import Trainer

from pyabsa.functional import APCConfigManager

from pyabsa.functional import ABSADatasetList

from pyabsa.functional import APCModelList

apc_config_english = APCConfigManager.get_apc_config_english()

apc_config_english.model = APCModelList.SLIDE_LCF_BERT

apc_config_english.evaluate_begin = 2

apc_config_english.similarity_threshold = 1

apc_config_english.max_seq_len = 80

apc_config_english.dropout = 0.5

apc_config_english.log_step = 5

apc_config_english.l2reg = 0.0001

apc_config_english.dynamic_truncate = True

apc_config_english.srd_alignment = True

# Ensure the corresponding checkpoint of trained model

checkpoint_path = APCCheckpointManager.get_checkpoint('slide-lcf-bert')

dataset_path = ABSADatasetList.SemEval #or set your local dataset

sent_classifier = Trainer(config=apc_config_english,

dataset=dataset_path,

from_checkpoint=checkpoint_path,

checkpoint_save_mode=1,

auto_device=True

)

More datasets are available at ABSADatasets.

- Laptop14

- Restaurant14

- Restaurant15

- Restaurant16

- Phone

- Car

- Camera

- Notebook

- MAMS

- Multilingual (The sum of the above datasets.)

- TShirt

- Television

Basically, you don't have to download the datasets, as the datasets will be downloaded automatically.

Except for the following models, we provide a template model involving LCF vec, you can develop your model based on the LCF-APC model template or LCF-ATEPC model template.

- LCF-ATEPC

- LCF-ATEPC-LARGE (Dual BERT)

- FAST-LCF-ATEPC

- LCFS-ATEPC

- LCFS-ATEPC-LARGE (Dual BERT)

- FAST-LCFS-ATEPC

- BERT-BASE

- SLIDE-LCF-BERT (Faster & Performs Better than LCF/LCFS-BERT)

- SLIDE-LCFS-BERT (Faster & Performs Better than LCF/LCFS-BERT)

- LCF-BERT (Reimplemented & Enhanced)

- LCFS-BERT (Reimplemented & Enhanced)

- FAST-LCF-BERT (Faster with slightly performance loss)

- FAST_LCFS-BERT (Faster with slightly performance loss)

- LCF-DUAL-BERT (Dual BERT)

- LCFS-DUAL-BERT (Dual BERT)

- BERT-BASE

- BERT-SPC

- LCA-Net

- DLCF-DCA-BERT *

- AOA_BERT

- ASGCN_BERT

- ATAE_LSTM_BERT

- Cabasc_BERT

- IAN_BERT

- LSTM_BERT

- MemNet_BERT

- MGAN_BERT

- RAM_BERT

- TD_LSTM_BERT

- TC_LSTM_BERT

- TNet_LF_BERT

We expect that you can help us improve this project, and your contributions are welcome. You can make a contribution in many ways, including:

- Share your custom dataset in PyABSA and ABSADatasets

- Integrates your models in PyABSA. (You can share your models whether it is or not based on PyABSA. if you are interested, we will help you)

- Raise a bug report while you use PyABSA or review the code (PyABSA is a individual project driven by enthusiasm so your help is needed)

- Give us some advice about feature design/refactor (You can advise to improve some feature)

- Correct/Rewrite some error-messages or code comment (The comments are not written by native english speaker, you can help us improve documents)

- Create an example script in a particular situation (Such as specify a SpaCy model, pretrainedbert type, some hyperparameters)

- Star this repository to keep it active

The LCF is a simple and adoptive mechanism proposed for ABSA. Many models based on LCF has been proposed and achieved SOTA performance. Developing your models based on LCF will significantly improve your ABSA models. If you are looking for the original proposal of local context focus, please redirect to the introduction of LCF. If you are looking for the original codes of the LCF-related papers, please redirect to LC-ABSA / LCF-ABSA or LCF-ATEPC.

This work build from LC-ABSA/LCF-ABSA and LCF-ATEPC, and other impressive works such as PyTorch-ABSA and LCFS-BERT.

MIT

Thanks goes to these wonderful people (emoji key):

XuMayi 💻 |

YangHeng 📆 |

brtgpy 🔣 |

This project follows the all-contributors specification. Contributions of any kind welcome!