Semantics-Augmented Set Abstraction (SASA)

By Chen Chen, Zhe Chen, Jing Zhang, and Dacheng Tao

This repository is the code release of the paper SASA: Semantics-Augmented Set Abstraction for Point-based 3D Object Detection, accepted by AAAI 2022.

Introduction

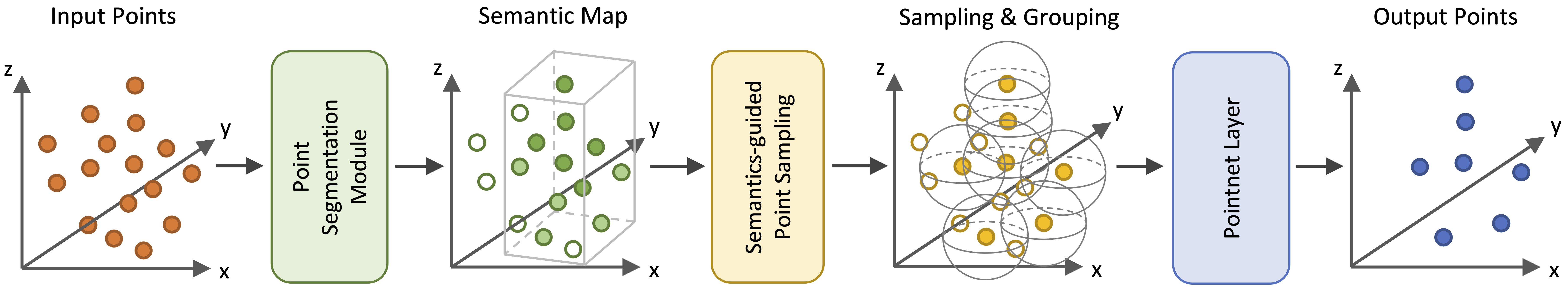

TL; DR. We develop a new set abstraction module named Semantics-Augmented Set Abstraction (SASA) for point-based 3D detectors. It could largely enhance the feature learning by helping extracted point representations better focus on meaningful foreground regions.

Abstract. Although point-based networks are demonstrated to be accurate for 3D point cloud modeling, they are still falling behind their voxel-based competitors in 3D detection. We observe that the prevailing set abstraction design for down-sampling points may maintain too much unimportant background information that can affect feature learning for detecting objects. To tackle this issue, we propose a novel set abstraction method named Semantics-Augmented Set Abstraction (SASA). Technically, we first add a binary segmentation module as the side output to help identify foreground points. Based on the estimated point-wise foreground scores, we then propose a semantics-guided point sampling algorithm to help retain more important foreground points during down-sampling. In practice, SASA shows to be effective in identifying valuable points related to foreground objects and improving feature learning for point-based 3D detection. Additionally, it is an easy-to-plug-in module and able to boost various point-based detectors, including single-stage and two-stage ones. Extensive experiments validate the superiority of SASA, lifting point-based detection models to reach comparable performance to state-of-the-art voxel-based methods.

Main Results

Here we present experimental results evaluated on the KITTI validation set and the corresponding pretrained models.

| Method | Easy | Moderate | Hard | download |

|---|---|---|---|---|

| 3DSSD | 91.53 | 83.12 | 82.07 | Google Drive |

| 3DSSD + SASA | 92.34 | 85.91 | 83.08 | Google Drive |

| PointRCNN | 91.80 | 82.35 | 80.21 | Google Drive |

| PointRCNN + SASA | 92.25 | 82.80 | 82.23 | Google Drive |

Getting Started

Requirements

- Linux

- Python >= 3.6

- PyTorch >= 1.3

- CUDA >= 9.0

- CMake >= 3.13.2

spconv v1.2

Installation

a. Clone this repository.

git clone https://github.com/blakechen97/SASA.git

cd SASAb. Install spconv library.

git clone https://github.com/traveller59/spconv.git

cd spconv

git checkout v1.2.1

git submodule update --init --recursive

python setup.py bdist_wheel

pip install ./dist/spconv-1.2.1-cp36-cp36m-linux_x86_64.whl # wheel file name may be different

cd ..c. Install pcdet toolbox.

pip install -r requirements.txt

python setup.py developData Preparation

a. Prepare datasets.

SASA

├── data

│ ├── kitti

│ │ ├── ImageSets

│ │ ├── training

│ │ │ ├──calib & velodyne & label_2 & image_2 & (optional: planes)

│ │ ├── testing

│ │ ├── calib & velodyne & image_2

│ ├── nuscenes

│ │ ├── v1.0-trainval (or v1.0-mini if you use mini)

│ │ │ ├── samples

│ │ │ ├── sweeps

│ │ │ ├── maps

│ │ │ ├── v1.0-trainval

├── pcdet

├── tools

b. Generate data infos.

# KITTI dataset

python -m pcdet.datasets.kitti.kitti_dataset create_kitti_infos tools/cfgs/dataset_configs/kitti_dataset.yaml

# nuScenes dataset

pip install nuscenes-devkit==1.0.5

python -m pcdet.datasets.nuscenes.nuscenes_dataset --func create_nuscenes_infos \

--cfg_file tools/cfgs/dataset_configs/nuscenes_dataset.yaml \

--version v1.0-trainvalTraining

- Train with a single GPU:

python train.py --cfg_file ${CONFIG_FILE}- Train with multiple GPUs:

sh scripts/dist_train.sh ${NUM_GPUS} --cfg_file ${CONFIG_FILE}Testing

- Test a pretrained model with a single GPU:

python test.py --cfg_file ${CONFIG_FILE} --ckpt ${CKPT}Please check GETTING_STARTED.md to learn more usage of OpenPCDet.

Acknowledgement

This project is built with OpenPCDet (version 0.3), a powerful toolbox for LiDAR-based 3D object detection. Please refer to OpenPCDet.md and the official github repository for more information.

License

This project is released under the Apache 2.0 license.

Citation

If you find this project useful in your research, please consider cite:

@article{chen2022sasa,

title={SASA: Semantics-Augmented Set Abstraction for Point-based 3D Object Detection},

author={Chen, Chen and Chen, Zhe and Zhang, Jing and Tao, Dacheng},

journal={arXiv preprint arXiv:2201.01976},

year={2022}

}