- Python environment 3.6,但是用py3.6根本安装不了这个requirement啊,试试3.7吧

conda env create -f environment.yaml

conda activate makeittalk

- ffmpeg (https://ffmpeg.org/download.html)

conda install ffmpeg

winehq-stablefor cartoon face warping in Ubuntu (https://wiki.winehq.org/Ubuntu). Tested on Ubuntu16.04, wine==5.0.3.

sudo apt-get update

sudo apt install software-properties-common

sudo dpkg --add-architecture i386

wget -nc https://dl.winehq.org/wine-builds/winehq.key

sudo apt-key add winehq.key

sudo apt-add-repository 'deb https://dl.winehq.org/wine-builds/ubuntu/ xenial main'

sudo apt update

sudo apt install --install-recommends winehq-stable

最后一步的install有问题的话试一试sudo apt-get install wine吧,或者换成清华源试一试吧,换成清华源也许会出现公匙出问题的情况,无解

Download the following pre-trained models to examples/ckpt folder for testing your own animation.

等提示没有模型的时候再放置模型吧,下述模型估计都得放置

| Model | Link to the model |

|---|---|

| Voice Conversion | Link |

| Speech Content Module | Link |

| Speaker-aware Module | Link |

| Image2Image Translation Module | Link |

| Non-photorealistic Warping (.exe) | Link |

在src/approaches/train_image_translation.py中可以指定帧率

- Download pre-trained embedding [here] and save to

examples/dumpfolder.

-

crop your portrait image into size

256x256and put it underexamplesfolder with.jpgformat. Make sure the head is almost in the middle (check existing examples for a reference). -

put test audio files under

examplesfolder as well withtmp.wavformat. -

animate!

python main_end2end.py --jpg Obama_256.jpg

- use addition args

--amp_lip_x <x> --amp_lip_y <y> --amp_pos <pos>to amply lip motion (in x/y-axis direction) and head motion displacements, default values are<x>=2., <y>=2., <pos>=.5

-

put test audio files under

examplesfolder as well with.wavformat. -

animate one of the existing puppets

| Puppet Name | wilk | smiling_person | sketch | color | cartoonM | danbooru1 |

|---|---|---|---|---|---|---|

| Image |  |

|

|

|

|

|

python main_end2end_cartoon.py --jpg <cartoon_puppet_name_with_extension> --jpg_bg <puppet_background_with_extension>

-

--jpg_bgtakes a same-size image as the background image to create the animation, such as the puppet's body, the overall fixed background image. If you want to use the background, make sure the puppet face image (i.e.--jpgimage) is inpngformat and is transparent on the non-face area. If you don't need any background, please also create a same-size image (e.g. a pure white image) to hold the argument place. -

use addition args

--amp_lip_x <x> --amp_lip_y <y> --amp_pos <pos>to amply lip motion (in x/y-axis direction) and head motion displacements, default values are<x>=2., <y>=2., <pos>=.5

-

put the cartoon image under

examples_cartoon -

install conda environment

foa_env_py2(tested on python 2) for Face-of-art (https://github.com/papulke/face-of-art). Download the pre-trained weight here and put it underexamples/ckpt. Activate the environment.

source activate foa_env_py2

- create necessary files to animate your cartoon image, i.e.

<your_puppet>_open_mouth.txt,<your_puppet>_close_mouth.txt,<your_puppet>_open_mouth_norm.txt,<your_puppet>_scale_shift.txt,<your_puppet>_delauney.txt

python main_gen_new_puppet.py <your_puppet_with_file_extension>

-

in details, it takes 3 steps

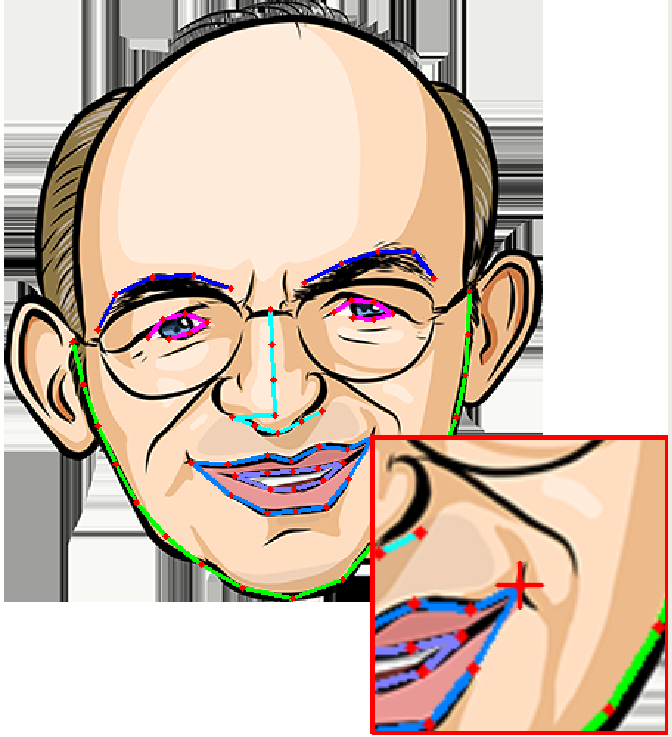

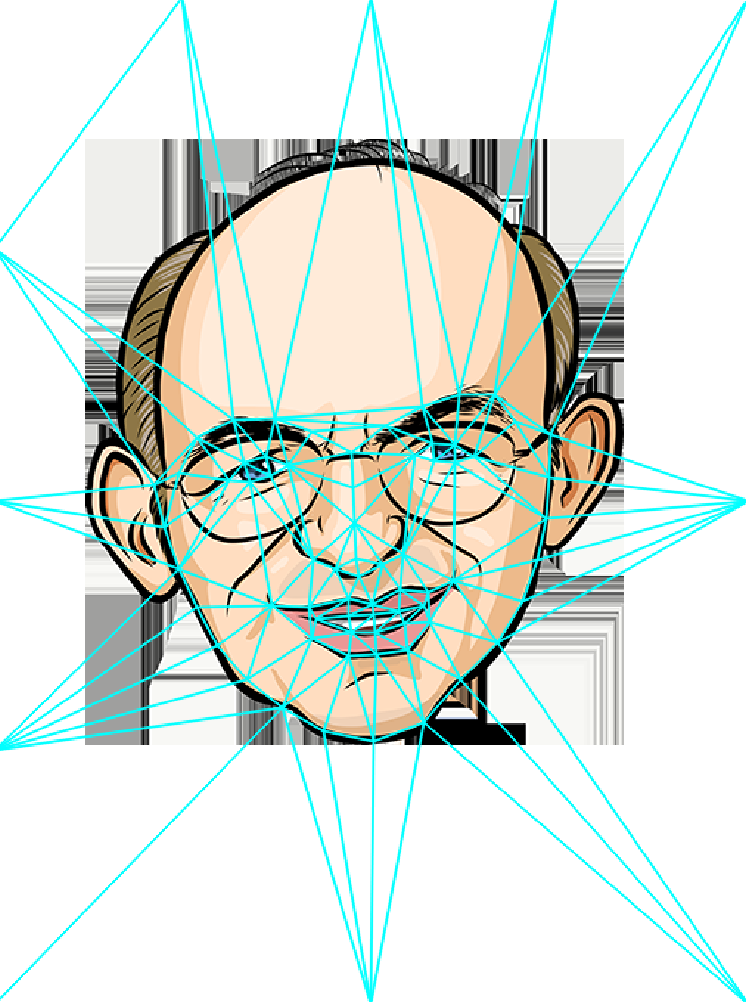

- Face-of-art automatic cartoon landmark detection.

- If it's wrong or not accurate, you can use our tool to drag and refine the landmarks.

- Estimate the closed mouth landmarks to serve as network input.

- Delauney triangulate the image with landmarks.

-

check puppet name

smiling_person_example.pngfor an example.

|

|

|

|---|---|---|

| Landmark Adjustment Tool | Closed lips estimation | Delaunay Triangulation |

Todo...

-

Create dataset root directory

<root_dir> -

Dataset: Download preprocessed dataset [here], and put it under

<root_dir>/dump. -

Train script: Run script below. Models will be saved in

<root_dir>/ckpt/<train_instance_name>.python main_train_content.py --train --write --root_dir <root_dir> --name <train_instance_name>

Todo...

Todo...

We would like to thank Timothy Langlois for the narration, and Kaizhi Qian for the help with the voice conversion module. We thank Jakub Fiser for implementing the real-time GPU version of the triangle morphing algorithm. We thank Daichi Ito for sharing the caricature image and Dave Werner for Wilk, the gruff but ultimately lovable puppet.

This research is partially funded by NSF (EAGER-1942069) and a gift from Adobe. Our experiments were performed in the UMass GPU cluster obtained under the Collaborative Fund managed by the MassTech Collaborative.