Is "ssd_mobilenet_v2_fpnlite" not supported?

Kim-Dae-Ho opened this issue · comments

Hello.

I converted the model into tflite after using it as follows and put it in Unity TensorFlow.

ssd_mobilenet_v2_quantized was only operated on TensorFlow 1.15, so I learned it.

This model works well in Unity TensorFlow even when converted to tflite.

ssd_mobilenet_v2_fpnlite_320x320_coco17_tpu-8 was only operated in the TensorFlow 2.x environment, so I learned it.

This model is converted to tflite, but when put into Unity Tensorflow, the console window displays "Failed TensorflowLite Operation" in red and object recognition behavior is not possible.

Is this tf-lite-unity-sample project file not supported for series named ssd_movilenet_v2_fpnlite, which is a model dedicated to TensorFlow 2.x?

Hi @Kim-Dae-Ho could you share the link to the model file?

Also please refer to the similar issues, such as

#237 (comment)

The SSD sample only supports the quantized int8 model. You need to modify the code a bit to run the float16 or float32 model.

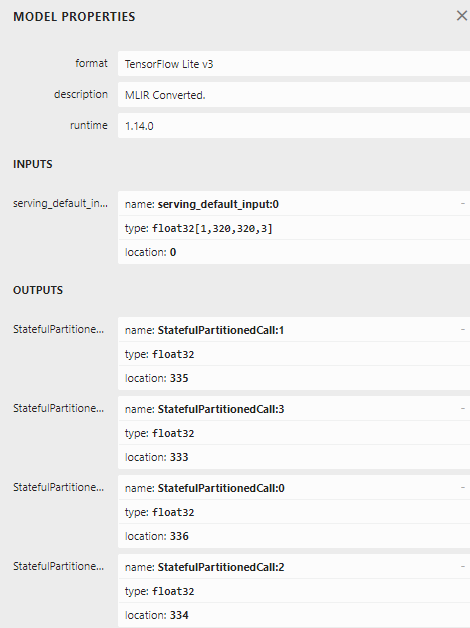

After converting ssd_mobilenet_v2_fpnlite_320x320_coco17_tpu-8 to tflite, I checked with Netron.

Both INPUTS and OUTPUTS came out as float32.

When converting to tflite, it seems that the type was forcibly converted to float32.

In my opinion, ssd_mobilenet_v2_fpnlite_320x320_coco17_tpu-8 does not seem to be a quantization model.

If so, is it not possible to use this model because it is not an int8 model?

On the other hand, ssd_mobilenet_v2_quantized is unit8 for INPUTS. The OUTPUTS came out as float32, but it works very well.

And you said that you need to modify the code a little to run the float16 or float32 model, does that mean that you need to modify the code of this Unity tf-lite-unity-sample?

Or do you mean that we need to modify the tflite conversion method?

int8 is hardcoded in the C# code here: You need to modify the code to support it

After converting the OUTPUTS method of the tflite model differently and modifying the C# script source code for unity-tensorflowlite you told me, the functionality seems to have worked well on the SSD_mobilenet_v2 fpnlite 320 model.

Thank you.

Thanks to you, I was able to gain an understanding of the neural network structure of the model through this opportunity.

Have a pleasant time.

@aptx4850 could you please provide details of how you resolved the issue?