A PyTorch implementation of the paper Specifying Object Attributes and Relations in Interactive Scene Generation

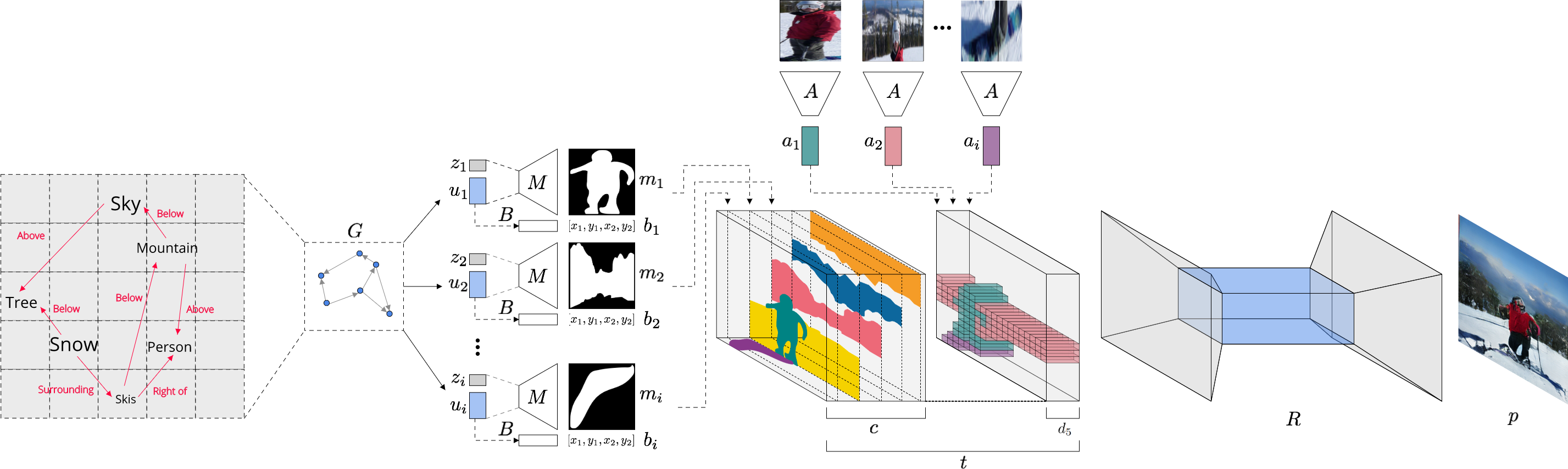

Specifying Object Attributes and Relations in Interactive Scene Generation

Oron Ashual1, Lior Wolf1,2

1 Tel-Aviv University, 2 Facebook AI Research

The IEEE International Conference on Computer Vision (ICCV), 2019, (Oral)

All code was developed and tested on Ubuntu 18.04 with Python 3.6 (Anaconda) and PyTorch 1.0.

conda create -n scene_generation python=3.7

conda activate scene_generationcd ~

git clone https://github.com/ashual/scene_generation.git

cd scene_generationconda install --file requirements.txt -c conda-forge -c pytorch- install a PyTorch version which will fit your CUDA TOOLKIT

Note: we didn't train our models with COCO panoptic dataset, the coco_panoptic.py code is for the sake of the community only.

cd ~

git clone https://github.com/cocodataset/cocoapi.git

cd cocoapi/PythonAPI/

python setup.py install

cd ~/scene_generation$ python train.pypython scripts/encode_features --checkpoint TRAINED_MODEL_CHECKPOINTpython scripts/sample_images.py --checkpoint TRAINED_MODEL_CHECKPOINT --batch_size 32 --output_dir OUTPUT_DIR Download these files into models/

The GUI was built as POC. Use it at your own risk:

python scripts/gui/simple-server.py --checkpoint YOUR_MODEL_CHECKPOINT --output_dir [DIR_NAME] --draw_scene_graphs 0Results were measured by sample images from the validation set and then running these 3 official scripts:

- FID - https://github.com/bioinf-jku/TTUR (Tensorflow implementation)

- Inception - https://github.com/openai/improved-gan/blob/master/inception_score/model.py (Tensorflow implementation)

- Diversity - https://github.com/richzhang/PerceptualSimilarity (Pytorch implementation)

- Accuracy - Training code is attached

train_accuracy_net.py. A trained model is provided. Adding the argument--accuracy_model_path MODEL_PATHwill output the accuracy of the objects.

Run this command

$ python scripts/sample_images.py --checkpoint TRAINED_MODEL_CHECKPOINT --output_dir OUTPUT_DIRwith these arguments:

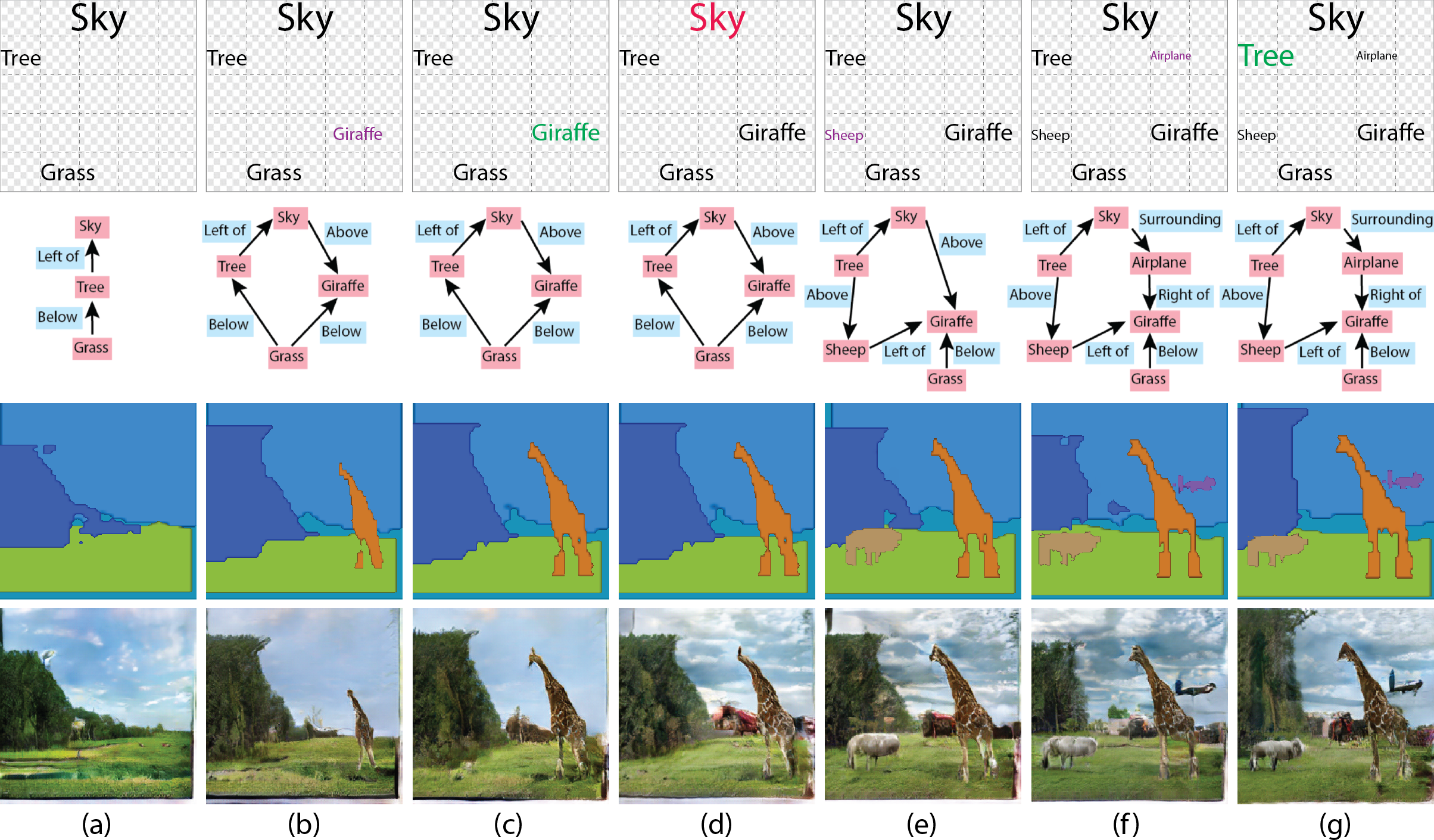

- (c) - Ground truth layout: --use_gt_boxes 1 --use_gt_masks 1

- (d) - Ground truth location attributes: --use_gt_attr 1

- (e) - Ground truth appearance attributes: --use_gt_textures 1

- (f) - Scene Graph only - No extra attributes needed

If you find this code useful in your research then please cite

@InProceedings{Ashual_2019_ICCV,

author = {Ashual, Oron and Wolf, Lior},

title = {Specifying Object Attributes and Relations in Interactive Scene Generation},

booktitle = {The IEEE International Conference on Computer Vision (ICCV)},

month = {October},

year = {2019}

}

Our project borrows some source files from sg2im. We thank the authors.