Easily train or fine-tune SOTA computer vision models with one open source training library

Fill our 4-question quick survey! We will raffle free SuperGradients swag between those who will participate -> Fill Survey

Website • Why Use SG? • User Guide • Docs • Getting Started Notebooks • Transfer Learning • Pretrained Models • Community • License • Deci Platform

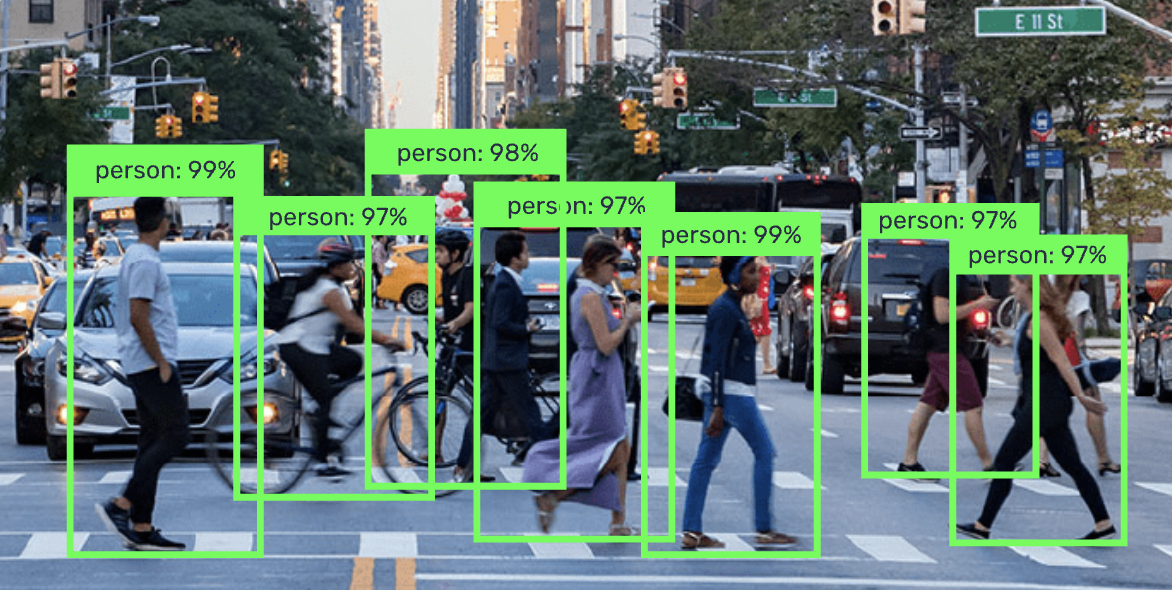

Welcome to SuperGradients, a free, open-source training library for PyTorch-based deep learning models. SuperGradients allows you to train or fine-tune SOTA pre-trained models for all the most commonly applied computer vision tasks with just one training library. We currently support object detection, image classification and semantic segmentation for videos and images.

Built-in SOTA Models

Easily load and fine-tune production-ready, pre-trained SOTA models that incorporate best practices and validated hyper-parameters for achieving best-in-class accuracy.

Easily Reproduce our Results

Why do all the grind work, if we already did it for you? leverage tested and proven recipes & code examples for a wide range of computer vision models generated by our team of deep learning experts. Easily configure your own or use plug & play hyperparameters for training, dataset, and architecture.

Production Readiness and Ease of Integration

All SuperGradients models’ are production ready in the sense that they are compatible with deployment tools such as TensorRT (Nvidia) and OpenVINO (Intel) and can be easily taken into production. With a few lines of code you can easily integrate the models into your codebase.

- 【09/03/2022】 New quick start and transfer learning example notebooks for Semantic Segmentation.

- 【07/02/2022】 We added RegSeg recipes and pre-trained models to our Semantic Segmentation models.

- 【01/02/2022】 We added issue templates for feature requests and bug reporting.

- 【20/01/2022】 STDC family - new recipes added with even higher mIoU💪

- 【17/01/2022】 We have released transfer learning example notebook for object detection (YOLOv5).

Check out SG full release notes.

- ViT models (Vision Transformer).

- Knowledge Distillation support.

- YOLOX models (recipes, pre-trained checkpoints).

- SSD MobileNet models (recipes, pre-trained checkpoints) for edge devices deployment.

- Dali implementation.

- Integration with professional tools.

- Getting Started

- Transfer Learning

- Installation Methods

- Computer Vision Models - Pretrained Checkpoints

- Implemented Model Architectures

- Contributing

- Citation

- Community

- License

- Deci Platform

The most simple and straightforward way to start training SOTA performance models with SuperGradients reproducible recipes. Just define your dataset path and where you want your checkpoints to be saved and you are good to go from your terminal!

python -m super_gradients.train_from_recipe --config-name=imagenet_regnetY architecture=regnetY800 dataset_interface.data_dir=<YOUR_Imagenet_LOCAL_PATH> ckpt_root_dir=<CHEKPOINT_DIRECTORY>Want to try our pre-trained models on your machine? Import SuperGradients, initialize your SgModel, and load your desired architecture and pre-trained weights from our SOTA model zoo

# The pretrained_weights argument will load a pre-trained architecture on the provided dataset

# This is an example of loading COCO-2017 pre-trained weights for a YOLOv5 Nano object detection model

import super_gradients

from super_gradients.training import SgModel

trainer = SgModel(experiment_name="yolov5n_coco_experiment",ckpt_root_dir=<CHECKPOINT_DIRECTORY>)

trainer.build_model(architecture="yolo_v5n", arch_params={"pretrained_weights": "coco", num_classes": 80})Get started with our quick start notebook for image classification tasks on Google Colab for a quick and easy start using free GPU hardware.

Classification Quick Start in Google Colab Classification Quick Start in Google Colab

|

Download notebook Download notebook

|

View source on GitHub View source on GitHub

|

Get started with our quick start notebook for object detection tasks on Google Colab for a quick and easy start using free GPU hardware.

Detection Quick Start in Google Colab Detection Quick Start in Google Colab

|

Download notebook Download notebook

|

View source on GitHub View source on GitHub

|

Get started with our quick start notebook for semantic segmentation tasks on Google Colab for a quick and easy start using free GPU hardware.

Segmentation Quick Start in Google Colab Segmentation Quick Start in Google Colab

|

Download notebook Download notebook

|

View source on GitHub View source on GitHub

|

Learn more about SuperGradients training components with our walkthrough notebook on Google Colab for an easy to use tutorial using free GPU hardware

SuperGradients Walkthrough in Google Colab SuperGradients Walkthrough in Google Colab

|

Download notebook Download notebook

|

View source on GitHub View source on GitHub

|

Learn more about SuperGradients transfer learning or fine tuning abilities with our COCO pre-trained YoloV5nano fine tuning into a sub-dataset of PASCAL VOC example notebook on Google Colab for an easy to use tutorial using free GPU hardware

Detection Transfer Learning in Google Colab Detection Transfer Learning in Google Colab

|

Download notebook Download notebook

|

View source on GitHub View source on GitHub

|

Learn more about SuperGradients transfer learning or fine tuning abilities with our Citiscapes pre-trained RegSeg48 fine tuning into a sub-dataset of Supervisely example notebook on Google Colab for an easy to use tutorial using free GPU hardware

Segmentation Transfer Learning in Google Colab Segmentation Transfer Learning in Google Colab

|

Download notebook Download notebook

|

View source on GitHub View source on GitHub

|

General requirements

- Python 3.7, 3.8 or 3.9 installed.

- torch>=1.9.0

- The python packages that are specified in requirements.txt;

To train on nvidia GPUs

- Nvidia CUDA Toolkit >= 11.2

- CuDNN >= 8.1.x

- Nvidia Driver with CUDA >= 11.2 support (≥460.x)

Install using GitHub

pip install git+https://github.com/Deci-AI/super-gradients.git@stable| Model | Dataset | Resolution | Top-1 | Top-5 | Latency (HW)*T4 | Latency (Production)**T4 | Latency (HW)*Jetson Xavier NX | Latency (Production)**Jetson Xavier NX | Latency Cascade Lake |

|---|---|---|---|---|---|---|---|---|---|

| EfficientNet B0 | ImageNet | 224x224 | 77.62 | 93.49 | 0.93ms | 1.38ms | - * | - | 3.44ms |

| RegNet Y200 | ImageNet | 224x224 | 70.88 | 89.35 | 0.63ms | 1.08ms | 2.16ms | 2.47ms | 2.06ms |

| RegNet Y400 | ImageNet | 224x224 | 74.74 | 91.46 | 0.80ms | 1.25ms | 2.62ms | 2.91ms | 2.87ms |

| RegNet Y600 | ImageNet | 224x224 | 76.18 | 92.34 | 0.77ms | 1.22ms | 2.64ms | 2.93ms | 2.39ms |

| RegNet Y800 | ImageNet | 224x224 | 77.07 | 93.26 | 0.74ms | 1.19ms | 2.77ms | 3.04ms | 2.81ms |

| ResNet 18 | ImageNet | 224x224 | 70.6 | 89.64 | 0.52ms | 0.95ms | 2.01ms | 2.30ms | 4.56ms |

| ResNet 34 | ImageNet | 224x224 | 74.13 | 91.7 | 0.92ms | 1.34ms | 3.57ms | 3.87ms | 7.64ms |

| ResNet 50 | ImageNet | 224x224 | 79.47 | 93.0 | 1.03ms | 1.44ms | 4.78ms | 5.10ms | 9.25ms |

| MobileNet V3_large-150 epochs | ImageNet | 224x224 | 73.79 | 91.54 | 0.67ms | 1.11ms | 2.42ms | 2.71ms | 1.76ms |

| MobileNet V3_large-300 epochs | ImageNet | 224x224 | 74.52 | 91.92 | 0.67ms | 1.11ms | 2.42ms | 2.71ms | 1.76ms |

| MobileNet V3_small | ImageNet | 224x224 | 67.45 | 87.47 | 0.55ms | 0.96ms | 2.01ms * | 2.35ms | 1.06ms |

| MobileNet V2_w1 | ImageNet | 224x224 | 73.08 | 91.1 | 0.46 ms | 0.89ms | 1.65ms * | 1.90ms | 1.56ms |

NOTE:

- Latency (HW)* - Hardware performance (not including IO)

- Latency (Production)** - Production Performance (including IO)

- Performance measured for T4 and Jetson Xavier NX with TensorRT, using FP16 precision and batch size 1

- Performance measured for Cascade Lake CPU with OpenVINO, using FP16 precision and batch size 1

| Model | Dataset | Resolution | mAPval 0.5:0.95 |

Latency (HW)*T4 | Latency (Production)**T4 | Latency (HW)*Jetson Xavier NX | Latency (Production)**Jetson Xavier NX | Latency Cascade Lake |

|---|---|---|---|---|---|---|---|---|

| YOLOv5 nano | COCO | 640x640 | 27.7 | 1.48ms | 5.43ms | 9.28ms | 17.44ms | 21.71ms |

| YOLOv5 small | COCO | 640x640 | 37.3 | 2.29ms | 6.14ms | 14.31ms | 22.50ms | 34.10ms |

| YOLOv5 medium | COCO | 640x640 | 45.2 | 4.60ms | 8.10ms | 26.76ms | 34.95ms | 65.86ms |

| YOLOv5 large | COCO | 640x640 | 48.0 | 7.20ms | 10.28ms | 43.89ms | 51.92ms | 122.97ms |

NOTE:

- Latency (HW)* - Hardware performance (not including IO)

- Latency (Production)** - Production Performance (including IO)

- Latency performance measured for T4 and Jetson Xavier NX with TensorRT, using FP16 precision and batch size 1

- Latency performance measured for Cascade Lake CPU with OpenVINO, using FP16 precision and batch size 1

| Model | Dataset | Resolution | mIoU | Latency b1T4 | Latency b1T4 including IO |

|---|---|---|---|---|---|

| DDRNet 23 | Cityscapes | 1024x2048 | 78.65 | 7.62ms | 25.94ms |

| DDRNet 23 slim | Cityscapes | 1024x2048 | 76.6 | 3.56ms | 22.80ms |

| STDC 1-Seg50 | Cityscapes | 512x1024 | 74.36 | 2.83ms | 12.57ms |

| STDC 1-Seg75 | Cityscapes | 768x1536 | 76.87 | 5.71ms | 26.70ms |

| STDC 2-Seg50 | Cityscapes | 512x1024 | 75.27 | 3.74ms | 13.89ms |

| STDC 2-Seg75 | Cityscapes | 768x1536 | 78.93 | 7.35ms | 28.18ms |

| RegSeg (exp48) | Cityscapes | 1024x2048 | 78.15 | 13.09ms | 41.88ms |

| Larger RegSeg (exp53) | Cityscapes | 1024x2048 | 79.2 | 24.82ms | 51.87ms |

| ShelfNet LW 34 | COCO Segmentation (21 classes from PASCAL including background) | 512x512 | 65.1 | - | - |

NOTE: Performance measured on T4 GPU with TensorRT, using FP16 precision and batch size 1 (latency), and not including IO

- DensNet (Densely Connected Convolutional Networks) - Densely Connected Convolutional Networks https://arxiv.org/pdf/1608.06993.pdf

- DPN - Dual Path Networks https://arxiv.org/pdf/1707.01629

- EfficientNet - https://arxiv.org/abs/1905.11946

- GoogleNet - https://arxiv.org/pdf/1409.4842

- LeNet - https://yann.lecun.com/exdb/lenet/

- MobileNet - Efficient Convolutional Neural Networks for Mobile Vision Applications https://arxiv.org/pdf/1704.04861

- MobileNet v2 - https://arxiv.org/pdf/1801.04381

- MobileNet v3 - https://arxiv.org/pdf/1905.02244

- PNASNet - Progressive Neural Architecture Search Networks https://arxiv.org/pdf/1712.00559

- Pre-activation ResNet - https://arxiv.org/pdf/1603.05027

- RegNet - https://arxiv.org/pdf/2003.13678.pdf

- RepVGG - Making VGG-style ConvNets Great Again https://arxiv.org/pdf/2101.03697.pdf

- ResNet - Deep Residual Learning for Image Recognition https://arxiv.org/pdf/1512.03385

- ResNeXt - Aggregated Residual Transformations for Deep Neural Networks https://arxiv.org/pdf/1611.05431

- SENet - Squeeze-and-Excitation Networkshttps://arxiv.org/pdf/1709.01507

- ShuffleNet - https://arxiv.org/pdf/1707.01083

- ShuffleNet v2 - Efficient Convolutional Neural Network for Mobile Deviceshttps://arxiv.org/pdf/1807.11164

- VGG - Very Deep Convolutional Networks for Large-scale Image Recognition https://arxiv.org/pdf/1409.1556

- CSP DarkNet

- DarkNet-53

- SSD (Single Shot Detector) - https://arxiv.org/pdf/1512.02325

- YOLO v3 - https://arxiv.org/pdf/1804.02767

- YOLO v5 - by Ultralytics

- DDRNet (Deep Dual-resolution Networks) - https://arxiv.org/pdf/2101.06085.pdf

- LadderNet - Multi-path networks based on U-Net for medical image segmentation https://arxiv.org/pdf/1810.07810

- RegSeg - Rethink Dilated Convolution for Real-time Semantic Segmentation https://arxiv.org/pdf/2111.09957

- ShelfNet - https://arxiv.org/pdf/1811.11254

- STDC - Rethinking BiSeNet For Real-time Semantic Segmentation https://arxiv.org/pdf/2104.13188

Check SuperGradients Docs for full documentation, user guide, and examples.

To learn about making a contribution to SuperGradients, please see our Contribution page.

Our awesome contributors:

Made with contrib.rocks.

If you are using SuperGradients library or benchmarks in your research, please cite SuperGradients deep learning training library.

If you want to be a part of SuperGradients growing community, hear about all the exciting news and updates, need help, request for advanced features, or want to file a bug or issue report, we would love to welcome you aboard!

-

Slack is the place to be and ask questions about SuperGradients and get support. Click here to join our Slack

-

To report a bug, file an issue on GitHub.

-

Join the SG Newsletter for staying up to date with new features and models, important announcements, and upcoming events.

-

For a short meeting with us, use this link and choose your preferred time.

This project is released under the Apache 2.0 license.

Deci Platform is our end to end platform for building, optimizing and deploying deep learning models to production.

Sign up for our FREE Community Tier to enjoy immediate improvement in throughput, latency, memory footprint and model size.

Features:

- Automatically compile and quantize your models with just a few clicks (TensorRT, OpenVINO).

- Gain up to 10X improvement in throughput, latency, memory and model size.

- Easily benchmark your models’ performance on different hardware and batch sizes.

- Invite co-workers to collaborate on models and communicate your progress.

- Deci supports all common frameworks and Hardware, from Intel CPUs to Nvidia's GPUs and Jetsons.

Sign up for Deci Platform for free here