This repository is the PyTorch implementation of MambaVision: A Hybrid Mamba-Transformer Vision Backbone.

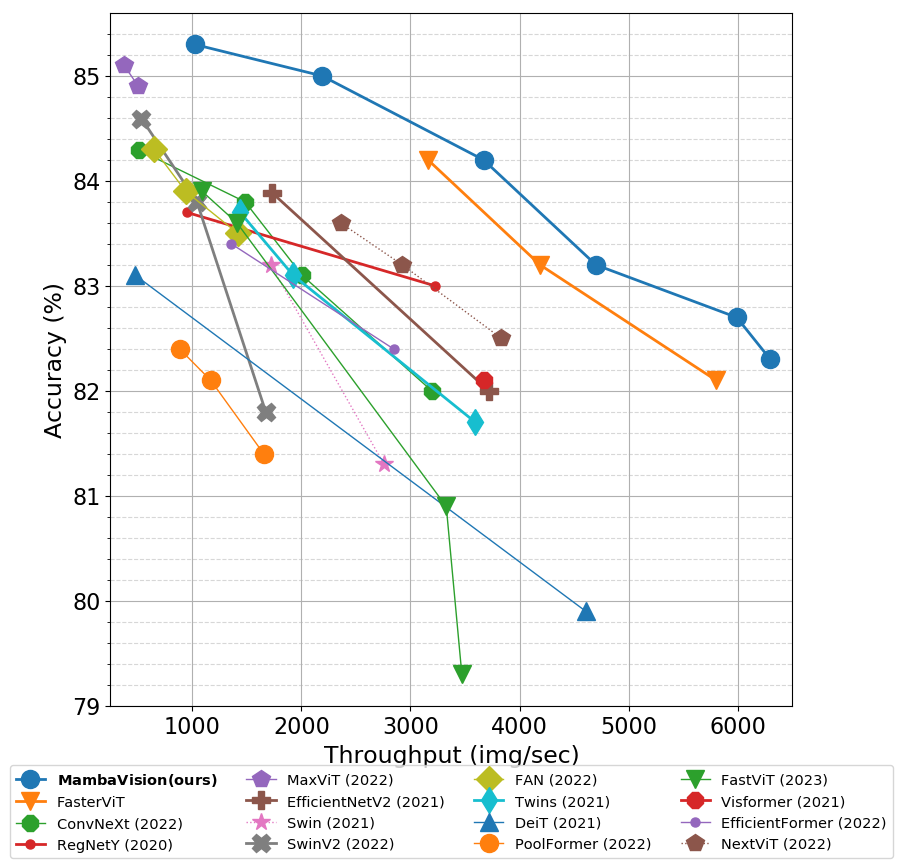

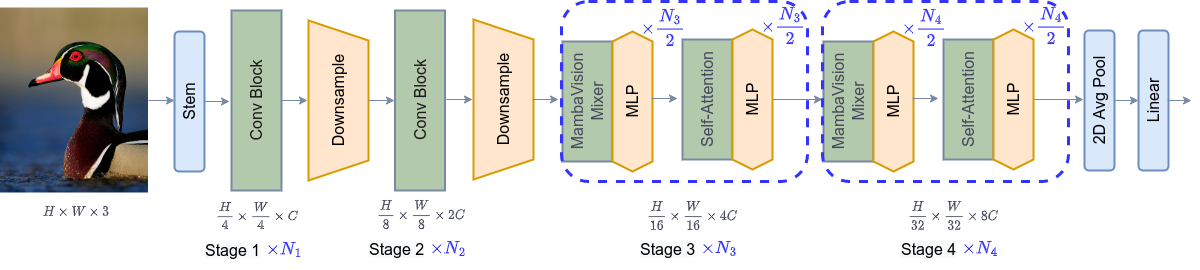

We propose a novel hybrid Mamba-Transformer backbone, denoted as MambaVision, which is specifically tailored for vision applications. Our core contribution includes redesigning the Mamba formulation to enhance its capability for efficient modeling of visual features. In addition, we conduct a comprehensive ablation study on the feasibility of integrating Vision Transformers (ViT) with Mamba. Our results demonstrate that equipping the Mamba architecture with several self-attention blocks at the final layers greatly improves the modeling capacity to capture long-range spatial dependencies. Based on our findings, we introduce a family of MambaVision models with a hierarchical architecture to meet various design criteria. For Image classification on ImageNet-1K dataset, MambaVision model variants achieve a new State-of-the-Art (SOTA) performance in terms of Top-1 accuracy and image throughput. In downstream tasks such as object detection, instance segmentation and semantic segmentation on MS COCO and ADE20K datasets, MambaVision outperforms comparably-sized backbones and demonstrates more favorable performance.

The architecture of MambaVision is demonstrated in the following:

ImageNet-1K Pretrained Models

| Name | Acc@1 | Throughput(Img/Sec) | #Params(M) | FLOPs(G) |

|---|---|---|---|---|

| MambaVision-T | 82.3 | 6298 | 31.8 | 4.4 |

| MambaVision-T2 | 82.7 | 5590 | 35.1 | 5.1 |

| MambaVision-S | 83.2 | 4700 | 50.1 | 7.5 |

| MambaVision-B | 84.2 | 3670 | 91.8 | 13.8 |

| MambaVision-L | 85.0 | 2190 | 206.9 | 30.8 |

| MambaVision-L2 | 85.3 | 1021 | 227.9 | 34.9 |

The dependencies can be installed by running:

pip install -r requirements.txtPlease download the ImageNet dataset from its official website. The training and validation images need to have sub-folders for each class with the following structure:

imagenet

├── train

│ ├── class1

│ │ ├── img1.jpeg

│ │ ├── img2.jpeg

│ │ └── ...

│ ├── class2

│ │ ├── img3.jpeg

│ │ └── ...

│ └── ...

└── val

├── class1

│ ├── img4.jpeg

│ ├── img5.jpeg

│ └── ...

├── class2

│ ├── img6.jpeg

│ └── ...

└── ...

The MambaVision model can be trained from scratch on ImageNet-1K dataset by running:

python -m torch.distributed.launch --nproc_per_node <num-of-gpus> --master_port 11223 train.py \

--config <config-file> --data_dir <imagenet-path> --batch-size <batch-size-per-gpu> --tag <run-tag> --model-emaTo resume training from a pre-trained checkpoint:

python -m torch.distributed.launch --nproc_per_node <num-of-gpus> --master_port 12223 train.py \

--resume <checkpoint-path> --config <config-file> --data_dir <imagenet-path> --batch-size <batch-size-per-gpu> --tag <run-tag> --model-emaTo evaluate a pre-trained checkpoint using ImageNet-1K validation set on a single GPU:

python validate.py --model <model-name> --checkpoint <checkpoint-path> --data_dir <imagenet-path> --batch-size <batch-size-per-gpu>