Help: Simple use case

sathishsoundharajan opened this issue · comments

Hi Team, trying to understand simple use case.. This is the producer code

const amqplib = require('amqplib');

const open = require('amqplib').connect('amqp://localhost');

const q = 'q.test-ha';

let i = 1;

open.then(function(conn) {

return conn.createChannel();

}).then(function(ch) {

return ch.assertQueue(q).then(function(ok) {

while (i > 0 && i < 100000) {

console.log('Running ', i);

ch.sendToQueue(q, Buffer.from(i.toString()));

let j = 1;

// while(j < 1000000000) { j = j + 1 }

console.log('Sent ', i);

i = i + 1;

}

});

}).catch(console.warn);

Consumer Code

const amqplib = require('amqplib');

const open = require('amqplib').connect('amqp://localhost');

const q = 'q.test-ha';

open.then(function(conn) {

return conn.createChannel();

}).then(function(ch) {

return ch.assertQueue(q).then(function(ok) {

return ch.consume(q, function(msg) {

if (msg !== null) {

console.log('Consumed New Message', msg.content.toString());

ch.ack(msg);

console.log('Message acked', msg.content.toString());

}

});

});

}).catch(console.warn);

Now two questions

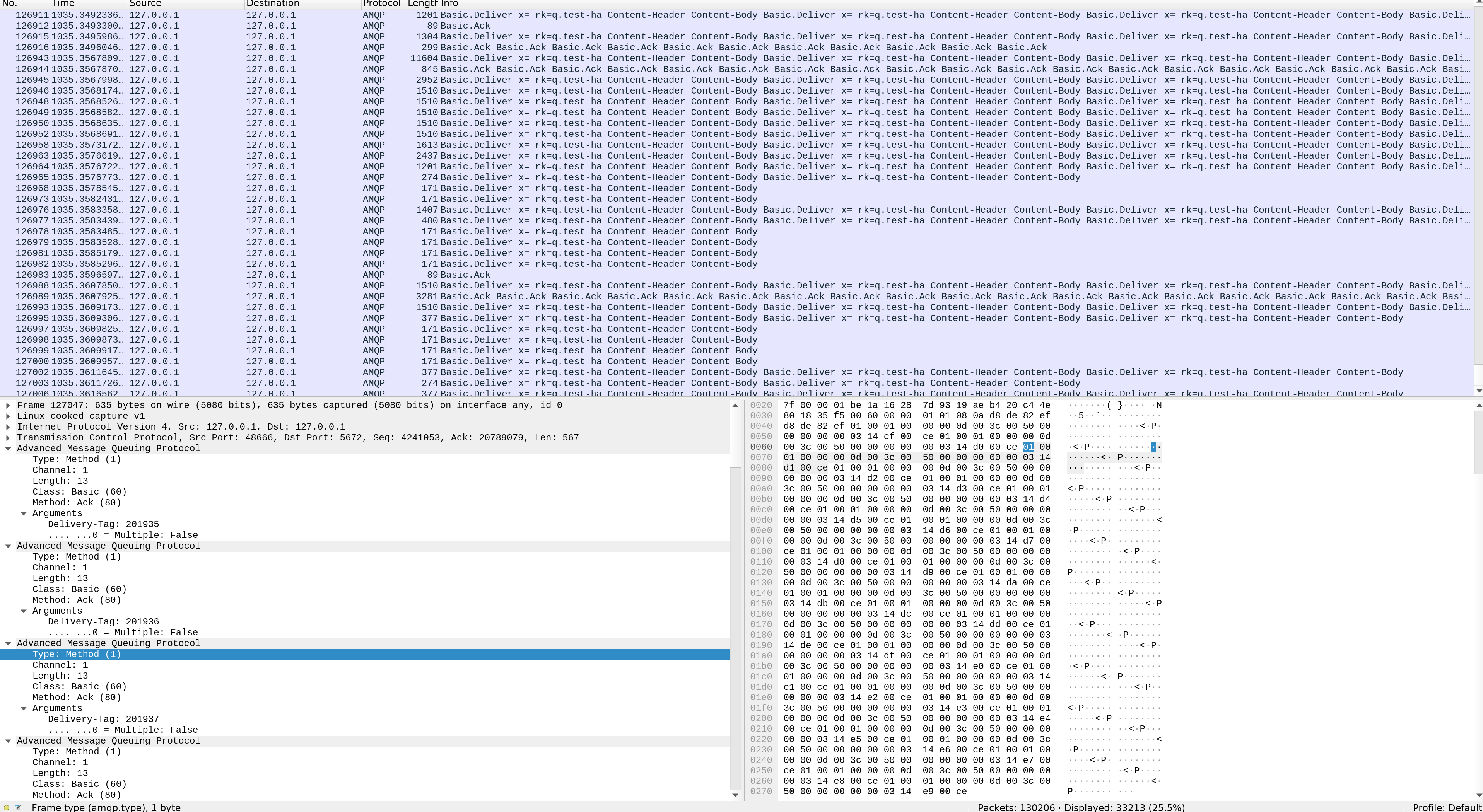

- Now when i run the producer & monitor the wireshark traffic i found that the even though the console.log(sent) says the message is sent, the message actually not sent and it's kind of grouped/batched & then sent to RabbitMQ server. ( Is this intentional ? ) If that is the case we should not consider ch.sendToQueue(q, Buffer.from(i.toString())); as sync call Am i right ?

- Same with consumers when consumes all those messages produced and noticed the wireshark for confirmations and found that it bulk ack the messages. Even though those messages are long back consider ack'd in code.

If rabbitmq client buffers and sends those message, what is the criteria it uses for buffering like messages count/buffer size/timer ? How can we really confirm message is ack'd if internally rabbitmq-client buffers and sends the message to server ?

Thank you for taking the time to express your questions so clearly. In answer...

-

Publishing messages is an asynchronous operation, even though the channel

sendToQueuedoes not yield a promise. Internally, amqplib writes the message to a buffer before sending it to the broker, however there is no batching - the message is sent as soon as possible. I suspect what is happening in your example is that the synchronous while loop in which you are callingsendToQueueis blocking the messages being read from the buffer and actually published. If you were to make this loop asynchronous, you would see the messages being sent to the broker as you expected. -

I suspect that providing you start your consumer before your publisher, and that you make the publisher asynchronous as described above, you will start to see the Basic.Publish and Basic.Ack commands interleave. I would also consider setting a prefetch on the consumer to prevent flooding.

Finally, How can we really confirm message is ack'd if internally rabbitmq-client buffers and sends the message to server

Depending on what you want, you may need to do this in two parts...

Part 1. Confirm the message has been safely delivered to the broker

You do this by using confirm channels and adding a callback to the channel sendToQueue function as per the Channel API

However a network error could prevent the confirmation reaching the publisher, so even if the message was safely delivered the callback may never be executed (although in practice this should be incredibly rare). Your system design should manage this somehow, e.g. by setting a reasonable timeout and assuming the message has failed if the timeout expires before receiving the confirmation. What you do then is also down to your system design, but typically there are two options

-

Resend the message, which requires your system to tolerate duplicates, and may still fail for the same reason as before

-

Drop the message, reporting that the publish may have failed and potentially stashing the message for subsequent investigation / retry if you determine it was not sent.

In the systems I design I tend to prefer option 2 as provided you design your application to gracefully shutdown when it is stopped or redeployed (i.e. block new work while waiting for existing work to complete), a lost confirm should be rare. It is also usually easy for a human to manually intervene and compensate for the lost message if it did not reach the broker.

Part 2. Acknowledge the message has been consumed

There are two ways of doing this with RabbitMQ, automatically or explicitly. Automatically means the message will be deleted by the broker before it is delivered to your application. This is called "AT MOST ONCE" delivery. Explicitly means it is up to you when the message will be acknowledged. This is called "AT LEAST ONCE" delivery. No message broker provides true EXACTLY ONCE delivery despite what their documentation might lead you to believe. Therefore, you as system designer need to decide whether "AT MOST ONCE" or "AT LEAST ONCE" is the best choice, and try to mitigate the problems with the selected option.

With "AT MOST ONCE" you have to accept that your application could crash after the message was deleted from the queue but before it was successfully processed, causing it to be lost. You therefore might want something which reconciles what was published and what was successfully consumed.

With "AT LEAST ONCE" you have to accept that your application could crash/the acknowledgement be lost, after partially or completely processing the message, but before it was deleted from the queue, thus causing the message to be redelivered. My preferred way of handing this is by designing my consumers to tolerate duplicates by making the operations idempotent, but this is not always possible. For example, if your workflow involves inserting/updating a database record, then sending an email, I might use a unique constraint or version number to detect duplicate messages during the database operation and abort the workflow. If the database operation was successful, I would acknowledge the message just before sending the email. In this way I prevent the customer being flooded with emails.

However, if my application crashed after acknowledging the message but before sending the email it would mean that the email would never be sent. This scenario should always mitigated by existing business processes - emails can be lost outside of your network, blocked by spam filters, accidentally deleted by customers, sent to the wrong email address, etc, etc, so customers will always need a way of contacting the business to complain that they have not received and email.

@cressie176 Thank you for taking your valuable time to explain my doubts. When i debugged ack function i saw that it writes to a buffer, post that i dunno how that is communicated to server, meaning there is no obvious tcp call :). I guess somewhere tcp web socket is created and kept open and whenever i call ack that is writing to that buffer variable, and that variable read somewhere and sent to rabbitmq server. My understand would be completely wrong. If you can shine some light here would be much helpful.

I believe you are part of rabbitmq community slack. But the reason i'm trying to understand the basics is because of this below issue we faced recently.

We have 3 Node RabbitMQ cluster. All queues are created by durable & HA_ALL ( Mirroring enabled when I hover over the Node information of each queue).

Issue: We have set of consumers which are processing the messages from queues.Now from yesterday we have been running to problem, consumer get the message processes them and also call ack function using amqplib & We can also confirm ack didn't throw any error. But the ack msg is not getting removed from queue. In fact to put it in plain words, consumer sent ack to server without no errors, but somehow the ack is not received by the server and keeps waiting for ack from consumer. When it enters this state all queues across all consumers gradually seems to have the same problem.

RabbitMQ logs does not indicate any issues, to confirm ack is getting success.. We have added logs post ack() function with try catch..

When this issue happens, if restart all of my consumers, without any issues it's getting processed for certain period of time and goes to issue state. ( This indicates there is no issue with consumers processing and ack function & also does not seem to be rogue payload causing nack ).

We also looked for double ack errors in log 406.. Not able find any.

Link: https://rabbitmq.slack.com/archives/C1EDN83PA/p1682581979836749

I'm going to change the publish function async and retry running the producer code and get back to you. But for the consumer ack question, consumer started post producer sent all messages, so definitely there is no blocking in the consumer code just get the message and call ack, the screenshot i have attached indicates batched ack'd. Am i looking at it wrong ?

My understand would be completely wrong. If you can shine some light here would be much helpful.

Your understanding is pretty close. From memory (and a very quick refresher) whenever the client sends a command to the broker is does so via connection.sendMessage. This writes the command to the buffer associated with the channel, however in reality this is piped to something called the muxer. The muxer acts like a channel load balancer writing chunks from the channel buffers to the broker via a stream associated with the connection

I believe you are part of rabbitmq community slack.

I've just read the thread. I'm sorry they couldn't be of more help - migrating all your queues to quorum queues won't be an easy task. I have seen weird things like this with RabbitMQ before, although for us it was with publisher confirms rather than consumer acknowledgements. One node in our cluster just stopped acknowledging messages even though we were using a confirm channel.

so definitely there is no blocking in the consumer code just get the message and call ack

Your code is correct - ch.ack(message).

However there are some other things which may be worth checking. RabbitMQ can get blocked connections - https://www.rabbitmq.com/connection-blocked.html so possibly that is worth listening and logging. I would assume though that if you are still receiving messages, then this is not the problem. You can read how to listen for it here

Hope you get to the bottom of it

On the RabbitMQ thread you mentioned you are trying to build a case for the upgrade. This article might help - https://aphyr.com/posts/315-call-me-maybe-rabbitmq. It explains the design flaws RabbitMQ has in the event of a network partition. Upgrading Rabbit and migrating to quorum queues (introduced since the article) should be much more reliable.

@cressie176 Sorry it took couple of days to understanding the internal.

Producer Code:

const amqplib = require('amqplib');

const open = require('amqplib').connect('amqp://localhost');

const q = 'q.test';

let i = 1;

async function main() {

const conn = await open;

const ch = await conn.createConfirmChannel();

while (i > 0 && i < 100) {

console.log('Running ', i);

const re = ch.publish(q, '', Buffer.from(i.toString()));

console.log('Publish ', i, re);

const result = await ch.waitForConfirms();

console.log('Confirm ', i, result);

i = i + 1;

}

}

If i run the above code and check in wireshark, i'm not seeing any buffering meaning each message i'm publishing to exchange is confirmed by this line await ch.waitForConfirms(); it reached the server. If i remove that line, then there are some buffering, even i make it async it does not matter, at some point publishing without waitForConfirms will always buffer ( dunno the criteria ) but it is happening.

Now the tricky part, we can make the publish reliable by using waitForConfirms. Now let's take a look at consumer.

Here is the consumer code

const amqplib = require('amqplib');

const open = require('amqplib').connect('amqp://localhost');

const q = 'q.test';

async function main() {

const conn = await open;

const ch = await conn.createConfirmChannel();

ch.consume(q, async function(msg) {

console.log('Running ', msg.content.toString());

ch.ack(msg);

console.log('Ack ', msg.content.toString());

})

}

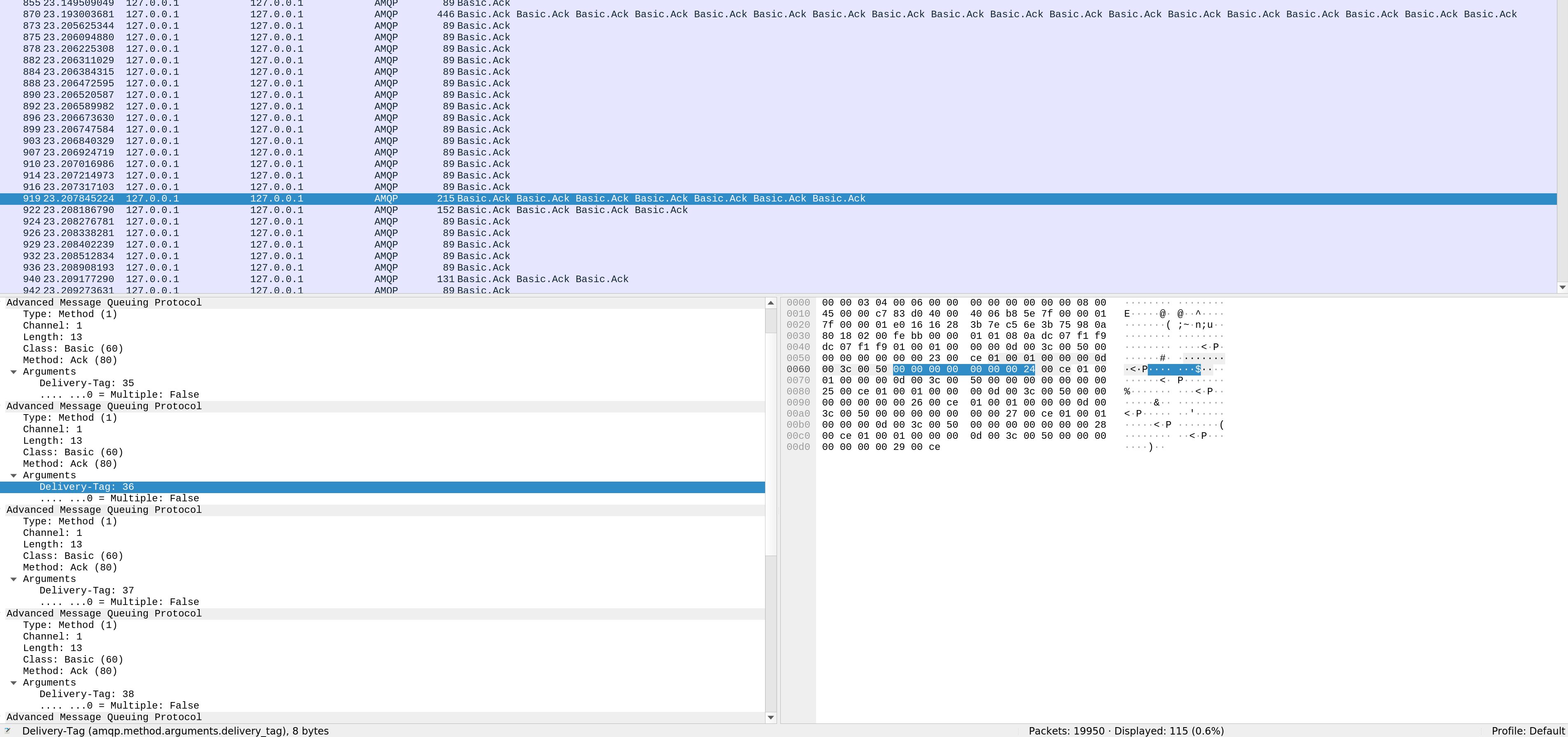

I queued 100 messages using the producer code mentioned above. Now if i execute the above code & check wireshark ( screenshot, Note for grouped Basic.Ack, Basic.Ack lines ) those lines indicate even ack'd is also buffered.

Now question is, like publishing where i can use waitForConfirms to confirm the message reached the server. For Ack is there anything i can use to confirm it ? Because obviously we cannot make ch.ack will always make sure the message reached server, like it would buffered 10 messages but due to network issue it might have dropped, so i would like to make sure each message definitely reached the server. Do we have anyway to code this ?

even i make it async it does not matter, at some point publishing without waitForConfirms will always buffer ( dunno the criteria ) but it is happening.

I believe what you are seeing is a consequence of a mixture of synchronous and asynchronous code, plus the event loop. The synchronous code will block, so without the asynchronous waitForConfirms the loop will write all messages to the buffer, before amqplib gets the opportunity to send the contents of the buffer from the broker. Even with asynchronous code the two activities will be competing for the opportunity to run, and if sending data asynchronously to the broker takes 10x as long as an iteration of the asynchronous while loop, then you will still write to the buffer faster than you read from it.

so i would like to make sure each message definitely reached the server. Do we have anyway to code this ?

The only way I can think of is set a channel prefetch limit of 1. This will mean the broker will only deliver one message at a time and wait for the consumer to acknowledge that message before delivering another. However, if for some reason the consumer acknowledgement gets lost, the queue will be blocked until the channel is closed. It's worth having something that monitors the state of the queue to detect this early.

Thank you @cressie176 ..

Yep, i saw that keeping the prefetch to 1 makes ack work like synchronous ( no buffering when i saw ), but more than 1 then there is possibility of buffering..

Once again. Thank you for taking your time to explain all of this.

Hey @cressie176 Found out very weird use case.. closed this issue prematurely.. Sorry..

const amqplib = require('amqplib');

const open = require('amqplib').connect('amqp://localhost');

const q = 'q.test';

async function sleep() {

return new Promise(resolve => {

setTimeout(resolve, 30000)

});

}

async function main() {

const conn = await open;

const ch = await conn.createConfirmChannel();

conn.connection.heartbeater.on('beat', () => console.log('beat', new Date().toISOString()))

console.log(conn.connection.muxer.out.bytesRead)

console.log(conn.connection.muxer.out.bytesWritten)

ch.on('ack', function(...args) {

console.log(args);

})

ch.on('delivery', function(...args) {

console.log(args);

})

ch.prefetch(1);

ch.consume(q, async function(msg) {

console.log(ch.unconfirmed.length)

console.log('Running ', msg.content.toString());

await sleep();

ch.ack(msg);

console.log('Ack ', msg.content.toString());

console.log(conn.connection.muxer.out.bytesRead)

console.log(conn.connection.muxer.out.bytesWritten)

})

}

main().catch(console.error)

i'm running rabbitmq in docker container, now what i did was i just published 1 message from rabbitmq management console & started my consumer, you can see that i have intentionally added delay before ack call. Now while my consumer is waiting i went ahead disabled my container network using this command ip link set eth0 down so docker container won't have network connectivity.

But ch.ack executed successfully without no error :) Meaning further analysis found it write to socket (buffer.write) but it somehow doesn't throw error even though it not possible to reach container.

Do you have any insights on this ? I'm just digging deep into this ack and communication, so i'm continuously astonished how things are working than i expected. :)

I suspect this is down to how dockers internal networking works. If you actually killed the container I'm pretty node would report a connection error.

No i didn't kill the container, i'm trying to replicate network issue. That's why i just disabled network alone @cressie176 ..

I understand you didn't kill the container, but simply disabling eth0 isn't necessarily enough for the node application to notice the problem. As far as I understand this is nothing to do with amqplib, it's just how sockets work and the reason it's recommended to use either RabbitMQ heartbeats or TCP keep alives.

Got it .. Thanks..