Yi-Fan Song, Zhang Zhang, Caifeng Shan and Liang Wang. Richly Activated Graph Convolutional Network for Robust Skeleton-based Action Recognition. IEEE Transaction on Circuits and Systems for Video Technology, 2020. [IEEE TCSVT 2020] [Arxiv Preprint]

Previous version (RA-GCN v1.0): [Github] [IEEE ICIP 2019] [Arxiv Preprint]

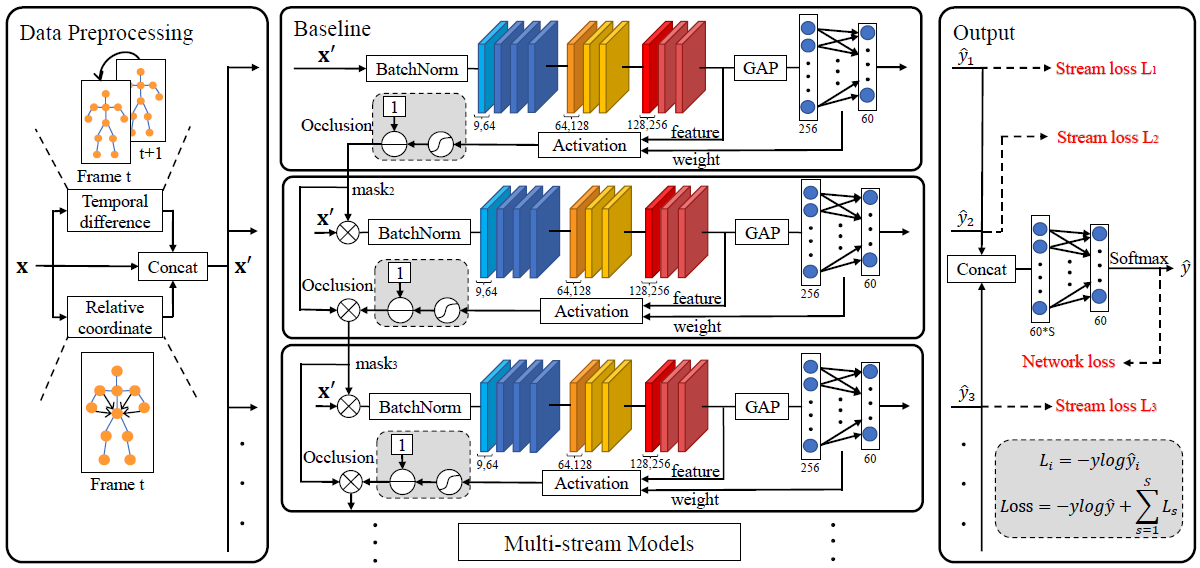

The following picture is the pipeline of RA-GCN v2.

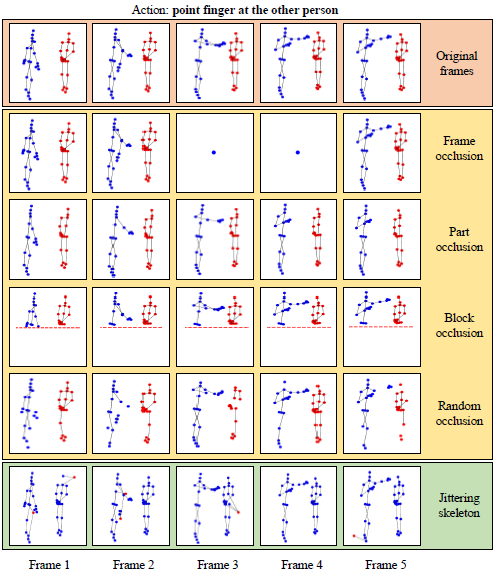

The occlusion and jittering skeleton samples are illustrated by

This code is based on Python3 (anaconda, >=3.5) and PyTorch (>=0.4.0 and <=1.2.0).

Note: nn.ParameterList may report an error when using more than one GPU in higher PyTorch version.

Other Python libraries are presented in the 'requirements.txt', which can be installed by

pip install -r requirements.txt

Our models are experimented on the NTU RGB+D 60 & 120 datasets, which can be download from here.

There are 302 samples of NTU RGB+D 60 need to be ignored, which are shown in the datasets/ignore60.txt, and 532 samples of NTU RGB+D 120, shown in the datasets/ignore120.txt.

Several pretrained models are provided, which include baseline, 2-stream RA-GCN and 3-stream RA-GCN for the cross-subject (CS) and cross-view (CV) benchmarks of the NTU RGB+D 60 dataset and the cross-subject (CSub) and cross-setup (CSet) benchmarks of the NTU RGB+D 120 dataset. The baseline model also means 1-stream RA-GCN.

These models can be downloaded from BaiduYun (Extraction code: s9kt) or GoogleDrive.

You should put these models into the 'models' folder.

Before training and evaluating, there are some parameters should be noticed.

- (1) '--config' or '-c': The config of RA-GCN. You must use this parameter in the command line or the program will output an error. There are 8 configs given in the configs folder, which can be illustrated in the following tabel.

| config | 2001 | 2002 | 2003 | 2004 | 2101 | 2102 | 2103 | 2104 |

|---|---|---|---|---|---|---|---|---|

| model | 2s RA-GCN | 3s RA-GCN | 2s RA-GCN | 3s RA-GCN | 2s RA-GCN | 3s RA-GCN | 2s RA-GCN | 3s RA-GCN |

| benchmark | CS | CS | CV | CV | CSub | CSub | CSet | CSet |

-

(2) '--resume' or '-r': Resume from checkpoint. If you want to start training from a saved checkpoint in the 'models' folder, you can add this parameter to the command line.

-

(3) '--evaluate' or '-e': Only evaluate trained models. For evaluating, you should add this parameter to the command line. The evaluating model will be seleted by the '--config' parameter.

-

(4) '--extract' or '-ex': Extract features from a trained model for visualization. Using this parameter will make a data file named 'visualize.npz' at the current folder.

-

(5) '--visualization' or '-v': Show the information and details of a trained model. You should extract features by using '--extract' parameter before visualizing.

Other parameters can be updated by modifying the corresponding config file in the 'configs' folder or using command line to send parameters to the model, and the parameter priority is command line > yaml config > default value.

Before training or testing our model, please generate datasets first by using 'gen_data.py' in the current folder.

In this file, two parameters are required to be modified, which are 'folder1' and 'folder2'. You should change them to your path to NTU RGB+D datasets, e.g., /data1/yifan.song/NTU_RGBD/nturgbd_skeletons_s001_to_s017/ for NTU RGB+D 60 and /data1/yifan.song/NTU_RGBD/nturgbd_skeletons_s018_to_s032/ for NTU RGB+D 120.

Then, you can generate datasets by

python gen_data.py

and the results are saved in 'datasets' folder.

You can simply train the model by

python main.py -c <config>

where <config> is the config file name in the 'configs' folder, e.g., 2001.

If you want to restart training from the saved checkpoint last time, you can run

python main.py -c <config> -r

Before evaluating, you should ensure the trained model corresponding the config are already saved in the 'models' folder. Then run

python main.py -c <config> -e

Before occlusion and jittering experiments, you should ensure the trained model corresponding the config are already saved in the 'models' folder. Then run

python main.py -c <config> -e -op <occlusion_part>

(or)

python main.py -c <config> -e -jj <jittering_prob> --sigma <noise_variance>

where <occlusion_part> denotes the occluded part (choices, [1,2,3,4,5] means left arm, right arm, two hands, two legs and trunk, respectively), <jittering_prob> denotes the probablity of jittering (choices, [0.02,0.04,0.06,0.08,0.10]), and <noise_variance> (choices, [0.05,0.1]) denotes the variance of noises.

To visualize the details of the trained model, you can run

python main.py -c <config> -ex -v

where '-ex' can be removed if the data file 'visualize.npz' already exists in the current folder.

Top-1 Accuracy for the provided models on NTU RGB+D 60 & 120 datasets.

| models | 3s RA-GCN | 2s RA-GCN | baseline | version 1.0 |

|---|---|---|---|---|

| NTU CS | 87.3% | 86.7% | 85.8% | 85.9% |

| NTU CV | 93.6% | 93.4% | 93.1% | 93.5% |

| NTU CSub | 81.1% | 81.0% | 78.2% | 74.4% |

| NTU CSet | 82.7% | 82.5% | 80.0% | 79.4% |

These results are presented in the paper.

If you have any question, please send e-mail to yifan.song@cripac.ia.ac.cn.

Please cite our paper when you use this code in your reseach.

@InProceedings{song2019richly,

title = {RICHLY ACTIVATED GRAPH CONVOLUTIONAL NETWORK FOR ACTION RECOGNITION WITH INCOMPLETE SKELETONS},

author = {Yi-Fan Song and Zhang Zhang and Liang Wang},

booktitle = {International Conference on Image Processing (ICIP)},

organization = {IEEE},

year = {2019},

}

@Article{song2020richly,

title = {Richly Activated Graph Convolutional Network for Robust Skeleton-based Action Recognition},

author = {Yi-Fan Song and Zhang Zhang and Caifeng Shan and Liang Wang},

journal = {IEEE Transactions on Circuits and Systems for Video Technology},

year = {2020},

}