Make sure you have ollama installed locally (https://ollama.com/)

ollama run llama3

pipenv shell

pip install -r requirements.txt

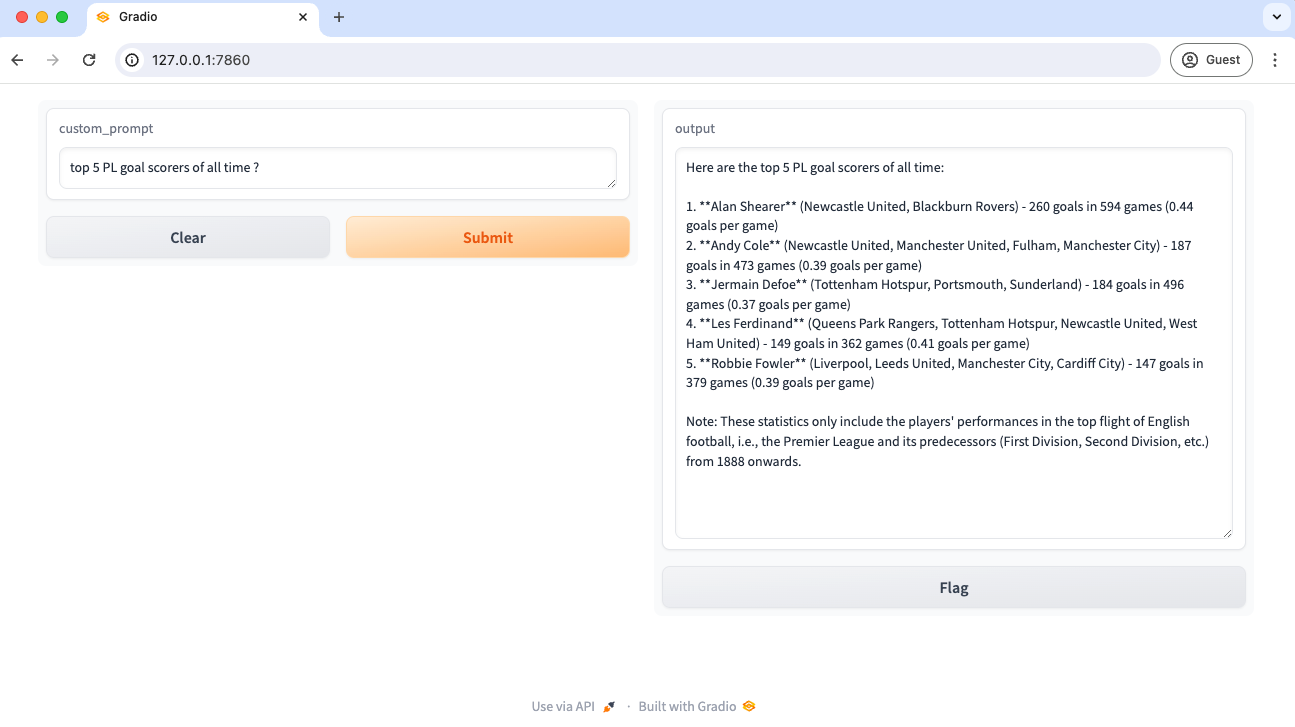

- LLM with UI (Gradio)

python main.py

head over to [http://127.0.0.1:7860]

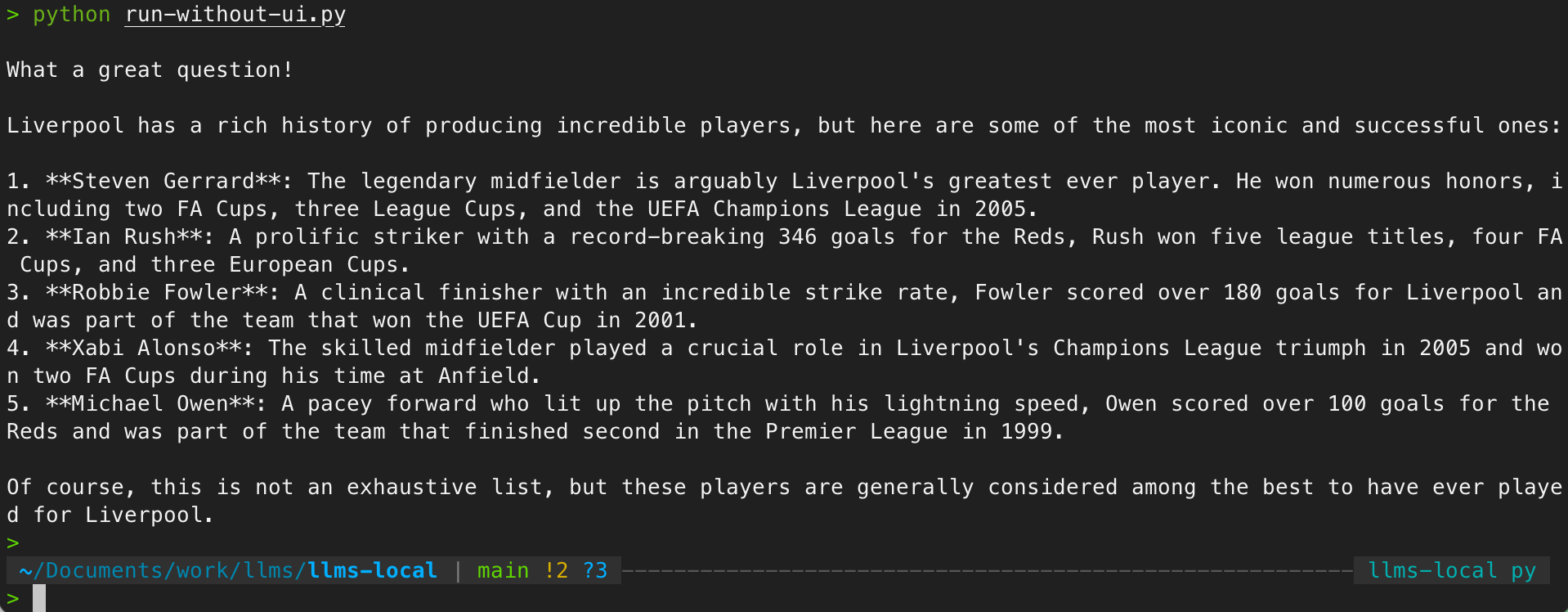

- LLM without UI (Console)

python run-without-ui.py

- Quite and Fast with Open WebUI (suggested)

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

head over to [http://127.0.0.1:3000]