Table of Contents

- Introduction

- Requirements

- Step by step deployment

- Automated deployment

- Working with kubernetes

- Demos

This repository contains scripts to:

- Create libvirt lab with vagrant and prepare some prerequirements.

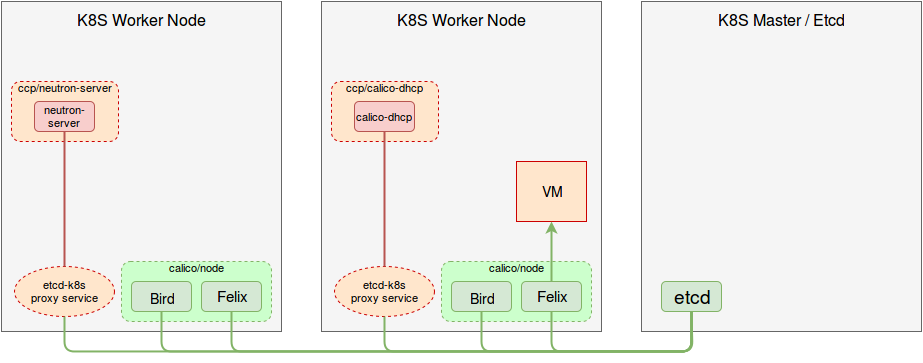

- Deploy Kubernetes with Calico networking plugin on a list of nodes using Kargo (the green part on the deployment scheme).

- Deploy OpenStack Containerized Control Plane (fuel-ccp) with

networking-calicoNeutron ML2 plugin on top of k8s (the red part on the deployment scheme).

On the host system the following is required:

libvirtvagrantvagrant-libvirtplugin (vagrant plugin install vagrant-libvirt)$USERshould be able to connect to libvirt (test withvirsh list --all)ansible-2.0+(if you're going to run fully automated deployment)

- Change default IP pool for vagrant networks if you want

export VAGRANT_POOL="10.100.0.0/16"- Clone this repo

git clone https://github.com/adidenko/vagrant-k8s

cd vagrant-k8s- Prepare the virtual lab

vagrant up- Login to master node and sudo to root

vagrant ssh $USER-k8s-00

sudo su -- Clone this repo

git clone https://github.com/adidenko/vagrant-k8s ~/mcp- Install required software and pull needed repos

cd ~/mcp

./bootstrap-master.sh- Set env vars for dynamic inventory

export INVENTORY=`pwd`/nodes_to_inv.py

export K8S_NODES_FILE=`pwd`/nodes- Check

nodeslist and make sure you have SSH access to them

cd ~/mcp

cat nodes

ansible all -m ping -i $INVENTORY- Deploy k8s using kargo playbooks

cd ~/mcp

./deploy-k8s.kargo.sh- Make sure CCP deployment config matches your deployment environment and update if needed. You can also add you CCP reviews here

cd ~/mcp

cat ccp.yaml- Run some extra customizations

ansible-playbook -i $INVENTORY playbooks/design.yaml -e @ccp.yaml- Clone CCP installer

cd ~/mcp

git clone https://github.com/adidenko/fuel-ccp-ansible- Deploy OpenStack CCP

cd ~/mcp

# Build CCP images

ansible-playbook -i $INVENTORY fuel-ccp-ansible/build.yaml -e @ccp.yaml

# Deploy CCP

ansible-playbook -i $INVENTORY fuel-ccp-ansible/deploy.yaml -e @ccp.yaml- Login to any k8s master node and wait for CCP deployment to complete

# On k8s master node

# Check CCP pods, all should become running

kubectl --namespace=ccp get pods -o wide

# Check CCP jobs status, wait until all complete

kubectl --namespace=ccp get jobs- Check Horizon

# On k8s master node check nodePort of Horizon service

HORIZON_PORT=$(kubectl --namespace=ccp get svc/horizon -o go-template='{{(index .spec.ports 0).nodePort}}')

echo $HORIZON_PORT

# Access Horizon via nodePort

curl -i -s $ANY_K8S_NODE_IP:$HORIZON_PORTJust run this:

export VAGRANT_ANSIBLE=true

export VAGRANT_DEPLOY_K8=true

export VAGRANT_DEPLOY_CCP=true

vagrant upAdditional environment variables for customization:

export KARGO_REPO="https://github.com/adidenko/kargo"

export KARGO_COMMIT="update-calico-unit"

export VAGRANT_DEPLOY_K8_CMD="./deploy-k8s.kargo.sh"

# Custom yaml for Kargo

export KARGO_CUSTOM_YAML="$(pwd)/my-custom.yaml"

# Custom Kargo inventory

export KARGO_INVENTORY="$(pwd)/my-inventory.cfg"

# If you want to test calico route reflectors

export CALICO_RRS=1

# Deploy prometheus monitoring

export VAGRANT_DEPLOY_PROMETHEUS="true"- Login to one of your kube-master nodes and run

# List images in registry

curl -s 127.0.0.1:31500/v2/_catalog | python -mjson.tool

# Check CCP jobs status

kubectl --namespace=ccp get jobs

# Check CCP pods

kubectl --namespace=ccp get pods -o wide- Troubleshooting

# Get logs from pod

kubectl --namespace=ccp logs $POD_NAME

# Exec command from pod

kubectl --namespace=ccp exec $POD_NAME -- cat /etc/resolv.conf

kubectl --namespace=ccp exec $POD_NAME -- curl http://etcd-client:2379/health

# Run a container

docker run -t -i 127.0.0.1:31500/ccp/neutron-dhcp-agent /bin/bash- Network checker

cd ~/mcp

./deploy-netchecker.sh

# or in ccp namespace

./deploy-netchecker.sh ccp- CCP

# Run a bash in one of containers

docker run -t -i 127.0.0.1:31500/ccp/nova-base /bin/bash

# Inside container export credentials

export OS_USERNAME=admin

export OS_PASSWORD=password

export OS_TENANT_NAME=admin

export OS_REGION_NAME=RegionOne

export OS_AUTH_URL=http://keystone:35357

# Run CLI commands

openstack service list

neutron agent-listGeneral demo showing that this PoC works

Demo showing cross-workload security: how to allow connections between Kubernetes namespace and OpenStack tenant and isolate them from other namespaces and tenants.