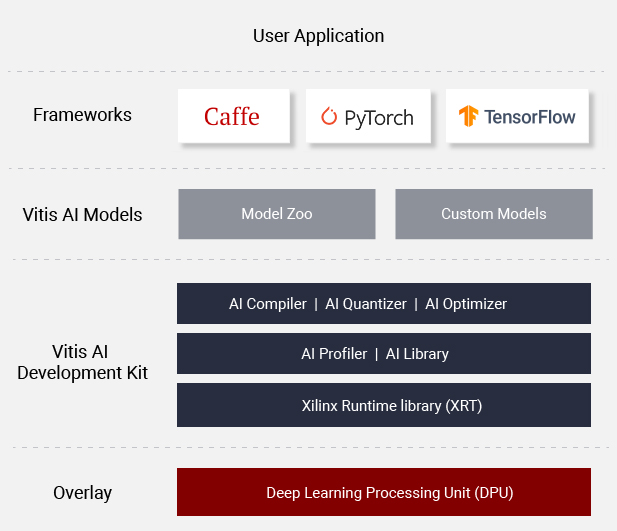

Vitis AI is Xilinx’s development stack for AI inference on Xilinx hardware platforms, including both edge devices and Alveo cards. It consists of optimized IP, tools, libraries, models, and example designs. It is designed with high efficiency and ease of use in mind, unleashing the full potential of AI acceleration on Xilinx FPGA and ACAP.

Vitis AI is composed of the following key components:

- AI Model Zoo - A comprehensive set of pre-optimized models that are ready to deploy on Xilinx devices.

- AI Optimizer - An optional model optimizer that can prune a model by up to 90%. It is separately available with commercial licenses.

- AI Quantizer - A powerful quantizer that supports model quantization, calibration, and fine tuning.

- AI Compiler - Compiles the quantized model to a high-efficient instruction set and data flow.

- AI Profiler - Perform an in-depth analysis of the efficiency and utilization of AI inference implementation.

- AI Library - Offers high-level yet optimized C++ APIs for AI applications from edge to cloud.

- DPU - Efficient and scalable IP cores can be customized to meet the needs for many different applications

- For more details on the different DPUs available, please click here.

Learn More: Vitis AI Overview

See What's New

- Release Notes

- Vitis AI Quantizer and DNNDK runtime all open source

- 14 new Reference Models AI Model Zoo (Pytorch, Caffe, Tensorflow)

- VAI Quantizer supports optimized models (pruned)

- DPU naming scheme has been updated to be consistent across all configurations

- Introducing Vitis AI profiler for edge and cloud

- VAI DPUs supported in ONNXRuntime and TVM

- Added Alveo U50/U50LV support

- Added Alveo U280 support

- Alveo U50/U50LV DPU DPUCAHX8H micro-architecture improvement

- DPU TRD upgraded to support Vitis 2020.1 and Vivado 2020.1

- Vitis AI for Pytorch CNN general access (Beta version)

Getting Started

Two options are available for installing the containers with the Vitis AI tools and resources.

- Pre-built containers on Docker Hub: xilinx/vitis-ai

- Build containers locally with Docker recipes: Docker Recipes

Installation

-

Install Docker - if Docker not installed on your machine yet

-

Clone the Vitis-AI repository to obtain the examples, reference code, and scripts.

git clone --recurse-submodules https://github.com/Xilinx/Vitis-AI cd Vitis-AI

Using Pre-build Docker

Download the latest Vitis AI Docker with the following command. This container runs on CPU.

docker pull xilinx/vitis-ai:latest

To run the docker, use command:

./docker_run.sh xilinx/vitis-ai:latest

Building Docker from Recipe

There are two types of docker recipes provided - CPU recipe and GPU recipe. If you have a compatible nVidia graphics card with CUDA support, you could use GPU recipe; otherwise you could use CPU recipe.

CPU Docker

Use below commands to build the CPU docker:

cd ./docker

./docker_build_cpu.sh

To run the CPU docker, use command:

./docker_run.sh xilinx/vitis-ai-cpu:latest

GPU Docker

Use below commands to build the GPU docker:

cd ./docker

./docker_build_gpu.sh

To run the GPU docker, use command:

./docker_run.sh xilinx/vitis-ai-gpu:latest

Please use the file ./docker_run.sh as a reference for the docker launching scripts, you could make necessary modification to it according to your needs. More Detail can be found here: Run Docker Container

Advanced - X11 Support for Examples on Alveo

Some examples in VART and Vitis-AI-Library for Alveo card need X11 support to display images, this requires you have X11 server support at your terminal and you need to make some modifications to **./docker_run.sh** file to enable the image display. For example, you could use following script to start the Vitis-AI CPU docker for Alveo with X11 support.#!/bin/bash

HERE=$(pwd) # Absolute path of current directory

user=`whoami`

uid=`id -u`

gid=`id -g`

xclmgmt_driver="$(find /dev -name xclmgmt\*)"

docker_devices=""

for i in ${xclmgmt_driver} ;

do

docker_devices+="--device=$i "

done

render_driver="$(find /dev/dri -name renderD\*)"

for i in ${render_driver} ;

do

docker_devices+="--device=$i "

done

rm -Rf /tmp/.Xauthority

cp $HOME/.Xauthority /tmp/

chmod -R a+rw /tmp/.Xauthority

docker run \

$docker_devices \

-v /opt/xilinx/dsa:/opt/xilinx/dsa \

-v /opt/xilinx/overlaybins:/opt/xilinx/overlaybins \

-e USER=$user -e UID=$uid -e GID=$gid \

-v $HERE:/workspace \

-v /tmp/.X11-unix:/tmp/.X11-unix \

-v /tmp/.Xauthority:/tmp/.Xauthority \

-e DISPLAY=$DISPLAY \

-w /workspace \

-it \

--rm \

--network=host \

xilinx/vitis-ai-cpu:latest \

bash

Before run this script, please make sure either you have local X11 server running if you are using Windows based ssh terminal to connect to remote server, or you have run xhost + command at a command terminal if you are using Linux with Desktop. Also if you are using ssh to connect to the remote server, remember to enable X11 Forwarding option either with Windows ssh tools setting or with -X options in ssh command line.

Get Started with Examples

Programming with Vitis AI

Vitis AI offers a unified set of high-level C++/Python programming APIs to run AI applications across edge-to-cloud platforms, including DPU for Alveo, and DPU for Zynq Ultrascale+ MPSoC and Zynq-7000. It brings the benefits to easily port AI applications from cloud to edge and vice versa. 7 samples in VART Samples are available to help you get familiar with the unfied programming APIs.

| ID | Example Name | Models | Framework | Notes |

|---|---|---|---|---|

| 1 | resnet50 | ResNet50 | Caffe | Image classification with VART C++ APIs. |

| 2 | resnet50_mt_py | ResNet50 | TensorFlow | Multi-threading image classification with VART Python APIs. |

| 3 | inception_v1_mt_py | Inception-v1 | TensorFlow | Multi-threading image classification with VART Python APIs. |

| 4 | pose_detection | SSD, Pose detection | Caffe | Pose detection with VART C++ APIs. |

| 5 | video_analysis | SSD | Caffe | Traffic detection with VART C++ APIs. |

| 6 | adas_detection | YOLO-v3 | Caffe | ADAS detection with VART C++ APIs. |

| 7 | segmentation | FPN | Caffe | Semantic segmentation with VART C++ APIs. |

For more information, please refer to Vitis AI User Guide

References

- Vitis AI Overview

- Vitis AI User Guide

- Vitis AI Model Zoo with Performance & Accuracy Data

- Vitis AI Tutorials

- Developer Articles

- Performance Whitepaper

System Requirements

Questions and Support