Disentangled Representation Learning for 3D Face Shape

This repository is the implementation of our CVPR 2019 paper "Disentangled Representation Learning for 3D Face Shape"

Authors: Zihang Jiang ,Qianyi Wu, Keyu Chen and Juyong Zhang .

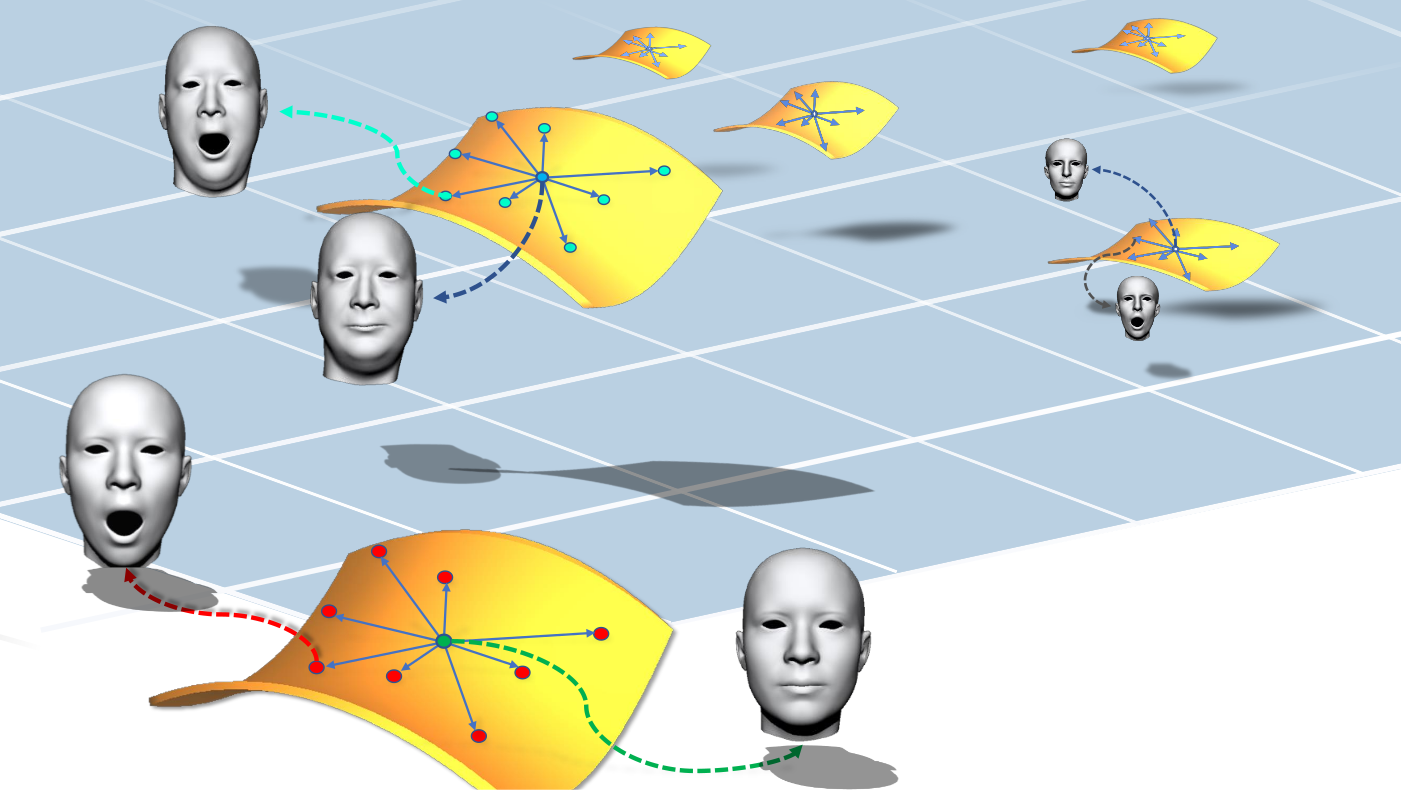

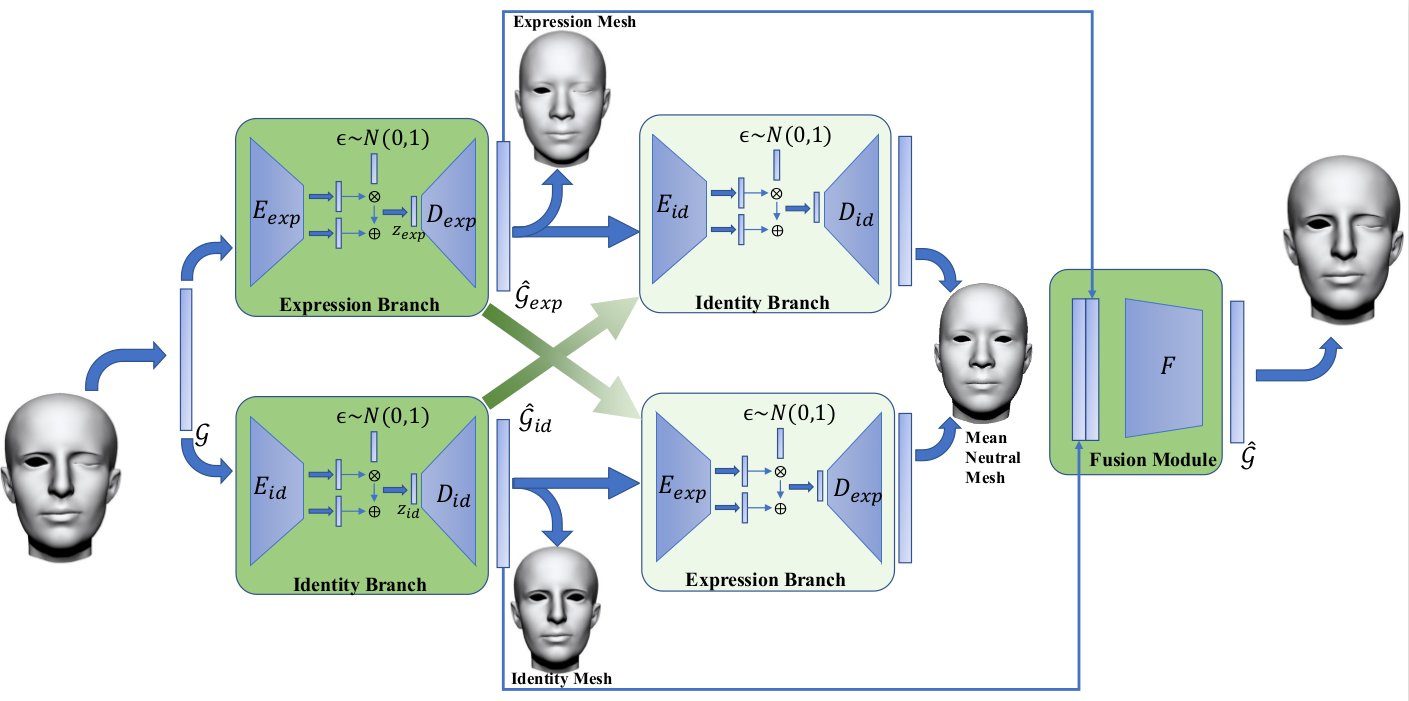

Our Proposed Framework

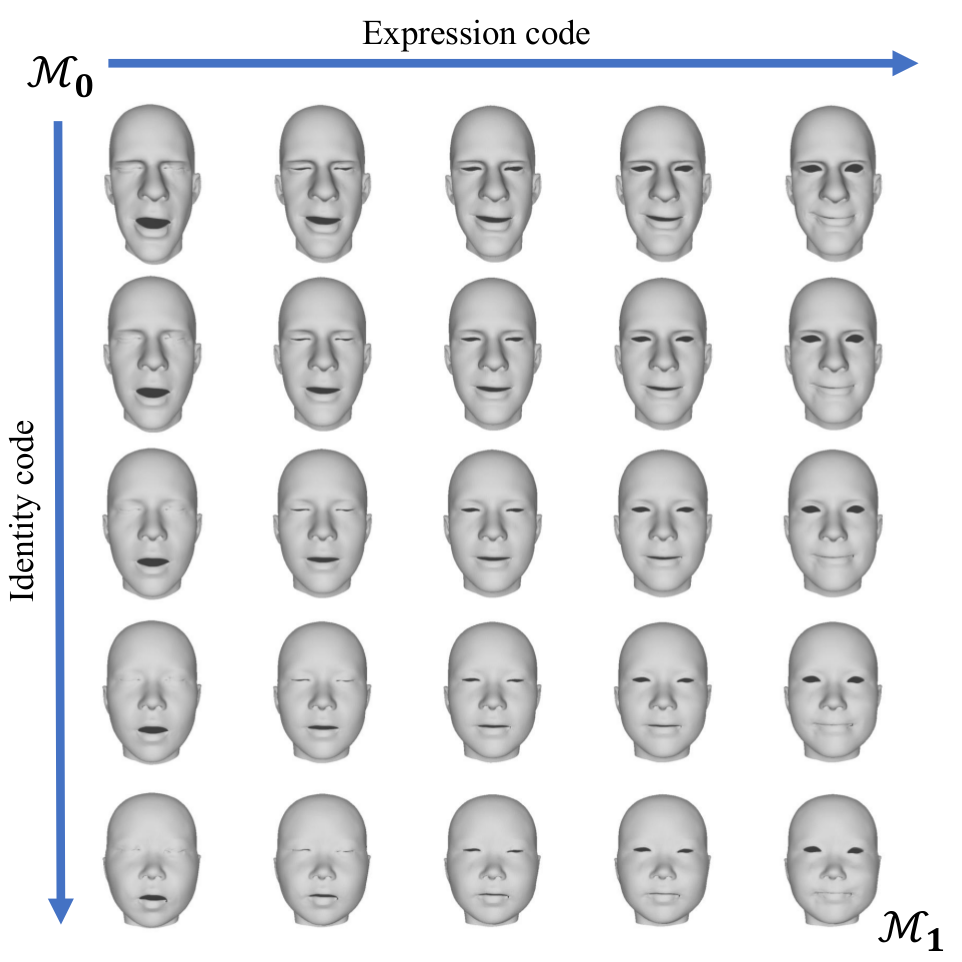

Result Examples

We can manipulate 3D face shape in expression and identity code space.Usage

Dataset

Please download FaceWareHouse dataset and extract it into the corresponding dir like data/FaceWarehouse_Data.

Requirements

1. Basic Environment

tensorflow-gpu = 1.9.0

Keras = 2.2.2

openmesh (both python version which can be installed through pip install openmesh, and compiled version are required)

1.5 quick start

For convenience, we included repos for get_dr and get_mesh in third party folder and provided scripts for easy install. See readme in third party folder for detail.

2. Requirements for Data Processing (About Deformation Representation Feature)

-

We provide a python interface for obtaining Deformation Representation (DR) Feature. Code are avaliable at Here to generate DR feature for each obj file by specific one reference mesh. Replace the

get_dr.cpython-36m-x86_64-linux-gnu.sowith compiled one and runpython get_fwh_dr.pyAfter that, you can change the data_path and data_format insrc/data_utils.py. -

To recover mesh from DR feature, you need to compile get_mesh, and replace the

get_mesh.cpython-36m-x86_64-linux-gnu.soinsrcfolder. -

Also, python version of libigl is needed for mesh-IO and you need to replace the

pyigl.soinsrcfolder if the current one does not match your environment.

After all requirements are satisfied, you can use following command to train and test the model.

Training

Run following command to generate training and testing data for 3D face DR learning

python src/data_utils.pyWe have provided DR feature of expression mesh on Meanface we used in this project on here.

Run this command to pretrain identity branch

python main.py -m gcn_vae_id -e 20Run following command to pretrain expression branch

python main.py -m gcn_vae_exp -e 20Run following command for end_to_end training the whole framework

python main.py -m fusion_dr -e 20Testing

You can test on each branch and the whole framework like

python main.py -m fusion_dr -l -tNote that we also provided our pretrained model on Google Drive

Evaluation

The measurement.py and STED folder is for computation of numerical result mentioned in our paper, including two reconstruction metrics and two decompostion metrics.

Notes

- if you train the model on your own dataset(for which topology is different from FaceWarehouse mesh), you have to recompute

Mean_Face.objand expression meshes on mean face as mentioned in our paper and regenerate theFWH_adj_matrix.npzindata/disentaglefolder usingsrc/igl_test.py. - We will release srcipts for data augmentation method metioned in our paper. You could put the augmented interpolated data in

data/disentangle/Interpolated_results - Currently we have fully tested this package on Ubuntu 16.04 LTS environment with CUDA 9.0. Windows and MacOS are not ensured working.

- Errors like

Unknown CMake command "pybind11_add_module"you may encounter while building get_mesh and get_dr can be solved by

git submodule init

git submodule update --recursive- If you have comments or questions, please contact Zihang Jiang (jzh0103@mail.ustc.edu.cn), Qianyi Wu (wqy9619@mail.ustc.edu.cn), Keyu Chen (cky95@mail.ustc.edu.cn), Juyong Zhang (juyong@ustc.edu.cn).

Citation

Please cite the following papers if it helps your research:

Disentangled Representation Learning for 3D Face Shape

@inproceedings{Jiang2019Disentangled,

title={Disentangled Representation Learning for 3D Face Shape},

author={Jiang, Zi-Hang and Wu, Qianyi and Chen, Keyu and Zhang, Juyong},

booktitle={IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2019}

}

Acknowledgement

GCN part code was inspired by https://github.com/tkipf/keras-gcn.

License

Free for personal or research use, for commercial use please contact us via email.