Problems with gensim coherence and coherence_texts

hlra opened this issue · comments

Hi everyone,

First of all for the enormously helpful toolkit. I am trying to use it as an evaluation tool for topic modelling in gensim.

I face the following warnings when trying to run the lda_utils.tm_gensim.evaluate_topic_models function based on a gensim corpus:

2018-05-08 14:38:39,658 : INFO : multiproc models: starting with 13 parameter sets on 1 documents (= 13 tasks) and 4 processes C:\Topic Modelling\venv1\lib\site-packages\gensim\topic_coherence\direct_confirmation_measure.py:193: RuntimeWarning: invalid value encountered in true_divide numerator = (co_occur_count / num_docs) + EPSILON C:\Topic Modelling\venv1\lib\site-packages\gensim\topic_coherence\direct_confirmation_measure.py:194: RuntimeWarning: invalid value encountered in true_divide denominator = (w_prime_count / num_docs) * (w_star_count / num_docs) C:\Topic Modelling\venv1\lib\site-packages\gensim\topic_coherence\direct_confirmation_measure.py:189: RuntimeWarning: invalid value encountered in true_divide co_doc_prob = co_occur_count / num_docs

As a consequence, the gensim measures requiring tokenized texts (coherence_gensim_c_npmi, etc.) cannot be calculated.

I tried to locate the problem more specifically. My best guess is that it is actually a problem with the gensim coherence model. I suspect the coherence model functions cannot infer the correct num_docs from the specified arguments. The code I use is:

models = lda_utils.tm_gensim.evaluate_topic_models(dtm, varying_params, const_params, metric=lda_utils.tm_gensim.AVAILABLE_METRICS, return_models=True, coherence_gensim_texts = coherence_gensim_texts, coherence_gensim_kwargs={'dictionary': topicmod.evaluate.FakedGensimDict.from_vocab(id2word)})

Where 'dtm' is a gensim corpus transformed with the gensim function corpus2csc, 'coherence_gensim_texts' is a 2D list containing the text documents as required, and 'id2word' is a gensim dictionary.

Therefore I guess the responsible code begins somewhere here:

tmtoolkit/tmtoolkit/topicmod/evaluate.py

Lines 336 to 349 in 225ac98

What could be other reasons for these warnings? If it is really the num_docs problem, what could be a possible reason that num_docs cannot be inferred? Any ideas?

You have two options:

- You pass

dtmandvocabandtexts.dtmmust be a 2D matrix with the shape (number of documents, vocab size). If it is sparse, it must be in COO format (see SciPy). If you're using tmtoolkit to generate the DTM, then this is the case. If not, you have to get it into that format somehow. Next, you should pass your vocabulary ascoherence_gensim_vocab=vocabwherevocabis a list (or NumPy array - I'm not sure atm) of the words in your corpus (in a order that is the same as in the columns of your DTM). Finally you pass the texts as your already did. - You pass a

gensim_modeland agensim_corpusandtexts.

You shouldn't pass a dictionary via coherence_gensim_kwargs.

Thanks for the detailed reply and sorry for my late reaction, I only continued to work on this now. I tried option two this way:

models = tmtoolkit.topicmod.tm_gensim.evaluate_topic_models(varying_params, const_params, metric=tmtoolkit.topicmod.tm_gensim.AVAILABLE_METRICS, return_models=True, texts=texts, gensim_model=lda, gensim_corpus=corpus)

However, a DTM still seems to be required:

File "<input>", line 24, in <module> File "C:\Topic Modelling\venv1\lib\site-packages\tmtoolkit\topicmod\tm_gensim.py", line 148, in evaluate_topic_models n_max_processes=n_max_processes, return_models=return_models) File "C:\Topic Modelling\venv1\lib\site-packages\tmtoolkit\topicmod\parallel.py", line 231, in __init__ n_max_processes) File "C:\Topic Modelling\venv1\lib\site-packages\tmtoolkit\topicmod\parallel.py", line 48, in __init__ self.data = {None: self._prepare_sparse_data(data)} File "C:\Topic Modelling\venv1\lib\site-packages\tmtoolkit\topicmod\parallel.py", line 150, in _prepare_sparse_data raise ValueError('datamust be a NumPy array/matrix or SciPy sparse matrix of two dimensions') ValueError:data must be a NumPy array/matrix or SciPy sparse matrix of two dimensions

Does this mean I have to try to generate the COO as you describe above or am I passing the arguments incorrectly?

Thanks again!

Sorry, this was a misunderstanding. I thought you wanted to use metric_coherence_gensim directly but you use evaluate_topic_models.

Anyway, if this is the case, you will need to pass the parameters as explained in the documentation of evaluate_topic_models. In your case this means

tmtoolkit.topicmod.tm_gensim.evaluate_topic_models(dtm, varying_params, const_params, metric=tmtoolkit.topicmod.tm_gensim.AVAILABLE_METRICS, return_models=True, texts=texts)

This means you need to pass a document-term-matrix dtm. If you don't have it you will need to create it from you gensim data for example with corpus2csc from gensim.matutils.

I might add a functionality in the future that automatically makes the data conversion but at the moment this is not implemented.

Yes that's right, I am only trying to replicate the visualization of the coherence and perplexity measures based on my gensim analysis.

Creating the dtm with corpus2csc works fine and convenient. I tried it before, for me this did the trick:

dtm = matutils.corpus2csc(corpus, dtype=int, num_docs=len(corpus))

Unfortunately, I am still running into problems with the non-u-mass measures. When I use your suggestion, I get this error:

Traceback (most recent call last): File "C:\Users\Sakul\AppData\Local\Programs\Python\Python36\lib\multiprocessing\process.py", line 258, in _bootstrap self.run() File "C:\Topic Modelling\venv1\lib\site-packages\tmtoolkit\topicmod\parallel.py", line 207, in run results = self.fit_model(data, params) File "C:\Topic Modelling\venv1\lib\site-packages\tmtoolkit\topicmod\tm_gensim.py", line 102, in fit_model res = metric_coherence_gensim(**metric_kwargs) File "C:\Topic Modelling\venv1\lib\site-packages\tmtoolkit\topicmod\evaluate.py", line 349, in metric_coherence_gensim coh_model = gensim.models.CoherenceModel(**coh_model_kwargs) File "C:\Topic Modelling\venv1\lib\site-packages\gensim\models\coherencemodel.py", line 179, in __init__ "The associated dictionary should be provided with the corpus or 'id2word'" ValueError: The associated dictionary should be provided with the corpus or 'id2word' for topic model should be set as the associated dictionary.

The only workaround I could figure out so far is the one from my initial post, passing a dictionary as coherence_gensim_kwarg:

models = tmtoolkit.topicmod.tm_gensim.evaluate_topic_models(dtm, varying_params, const_params, metric=tmtoolkit.topicmod.tm_gensim.AVAILABLE_METRICS, return_models=True, coherence_gensim_texts=texts, coherence_gensim_kwargs={'dictionary': tmtoolkit.topicmod.evaluate.FakedGensimDict.from_vocab(dictionary)})

However, this way some measures seem to be calculated succesfully except the non-u-mass measures, which is probably also indicated by the warnings mentioned above:

2018-05-08 14:38:39,658 : INFO : multiproc models: starting with 13 parameter sets on 1 documents (= 13 tasks) and 4 processes C:\Topic Modelling\venv1\lib\site-packages\gensim\topic_coherence\direct_confirmation_measure.py:193: RuntimeWarning: invalid value encountered in true_divide numerator = (co_occur_count / num_docs) + EPSILON C:\Topic Modelling\venv1\lib\site-packages\gensim\topic_coherence\direct_confirmation_measure.py:194: RuntimeWarning: invalid value encountered in true_divide denominator = (w_prime_count / num_docs) * (w_star_count / num_docs) C:\Topic Modelling\venv1\lib\site-packages\gensim\topic_coherence\direct_confirmation_measure.py:189: RuntimeWarning: invalid value encountered in true_divide co_doc_prob = co_occur_count / num_docs

Finally related to this, I get another new error now which I did not get a few weeks ago, apparently stemming from plot_eval_results:

Traceback (most recent call last): File "... in <module> tmtoolkit.topicmod.visualize.plot_eval_results(results_by_n_topics) File "C:\Topic Modelling\venv1\lib\site-packages\tmtoolkit\topicmod\visualize.py", line 361, in plot_eval_results for m_dir in sorted(set(metric_direction), reverse=True): TypeError: '<' not supported between instances of 'str' and 'NoneType'

Back then, at least the non-u-mass measures could be plotted, now there seems to be another problem.

Sorry for the tenacity but it would be very helpful to have a working version and maybe also useful for other gensim users until future functionalities are available. Thanks for your work and help!

I fixed some issues in the code. Please update tmtoolkit to the newest version and use it as shown in the new evaluation with Gensim example.

Unfortunately, it still woun't work for me. I updated tmtoolkit to version 0.7.1.

I consequently changed my script to use the tmtoolkit functions and data types (at first I thought gnsm_corpus was an actual gensim corpus, however according to the example it seems to be a list).

By now, I am almost using the exact same code as in the example:

mydata = pd.read_csv('mydataw1.csv', index_col=False, encoding='utf-8')

mycorp = Corpus(dict(zip(mydata.id, mydata.text)))

preproc = TMPreproc(mycorp, language='english')

preproc.tokenize().clean_tokens()

doc_labels = list(preproc.tokens.keys())

texts = list(preproc.tokens.values())

gnsm_dict = corpora.Dictionary.from_documents(texts)

gnsm_corpus = [gnsm_dict.doc2bow(text) for text in texts]

const_params = dict(update_every=0, passes=2000)

ks = list(range(2, 25, 1))

varying_params = [dict(num_topics=k, alpha=1.0 / k) for k in ks]

eval_results = tm_gensim.evaluate_topic_models((gnsm_dict, gnsm_corpus), varying_params, const_params, coherence_gensim_texts=texts)

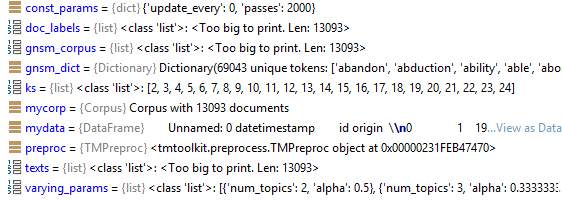

These are the input variables I am using:

However, I am still getting the same error:

Traceback (most recent call last): File "lda_evaluation.py", line 71, in <module> eval_results = tm_gensim.evaluate_topic_models((gnsm_dict, gnsm_corpus), varying_params, const_params, coherence_gensim_texts=texts) # necessary for coherence C_V metric File "tm_gensim.py", line 148, in evaluate_topic_models n_max_processes=n_max_processes, return_models=return_models) File "parallel.py", line 231, in __init__ n_max_processes) File "parallel.py", line 48, in __init__ self.data = {None: self._prepare_sparse_data(data)} File "parallel.py", line 150, in _prepare_sparse_data raise ValueError('datamust be a NumPy array/matrix or SciPy sparse matrix of two dimensions') ValueError:data must be a NumPy array/matrix or SciPy sparse matrix of two dimensions

Seeing the line numbers in your traceback, I believe you're not using tmtoolkit v0.7.1 yet (the last ValueError exception comes from line 151 in the new version). Please make sure that you really upgraded tmtoolkit and you're really using this version in your Python environment. You can check with pip list in the terminal or import tmtoolkit; print(tmtoolkit.__version__) in a Python session. You can also set a debugging stop point near line 150 in the file mentioned above and see whats going on there.

PS: 2000 passes is quite much. Please note that passes in gensim are not the same as iterations in lda.

PPS: You can also pass an "actual" gensim corpus (a list can be an actual gensim corpus btw) -- it only has to behave like a list and contain (word_id, n_word_occurrences) tuples just like doc2bow generates them.

That was exactly the problem, thanks a lot! It works fine now, though I might have to adjust the new plot_eval_results plot for all measures but that is not a problem. Perfect, the tool will be very helpful for the presentation of our results.

Thanks also for the hint with the passes. I will check the options of the gensim lda again.

Glad to hear that!