Updates | Introduction | Statement |

Image Classification: Please see ViTAE-Transformer for image classification;

Object Detection: Please see ViTAE-Transformer for object detection;

Sementic Segmentation: Please see ViTAE-Transformer for semantic segmentation;

Animal Pose Estimation: Please see ViTAE-Transformer for animal pose estimation;

Matting: Please see ViTAE-Transformer for matting;

Remote Sensing: Please see ViTAE-Transformer for Remote Sensing;

09/04/2021

- The pretrained models for ViTAE on matting and remote sensing are released! Please try and have fun!

24/03/2021

- The pretrained models for both ViTAE and ViTAEv2 are released. The code for downstream tasks are also provided for reference.

07/12/2021

- The code is released!

19/10/2021

- The paper is accepted by Neurips'2021! The code will be released soon!

06/08/2021

- The paper is post on arxiv! The code will be made public available once cleaned up.

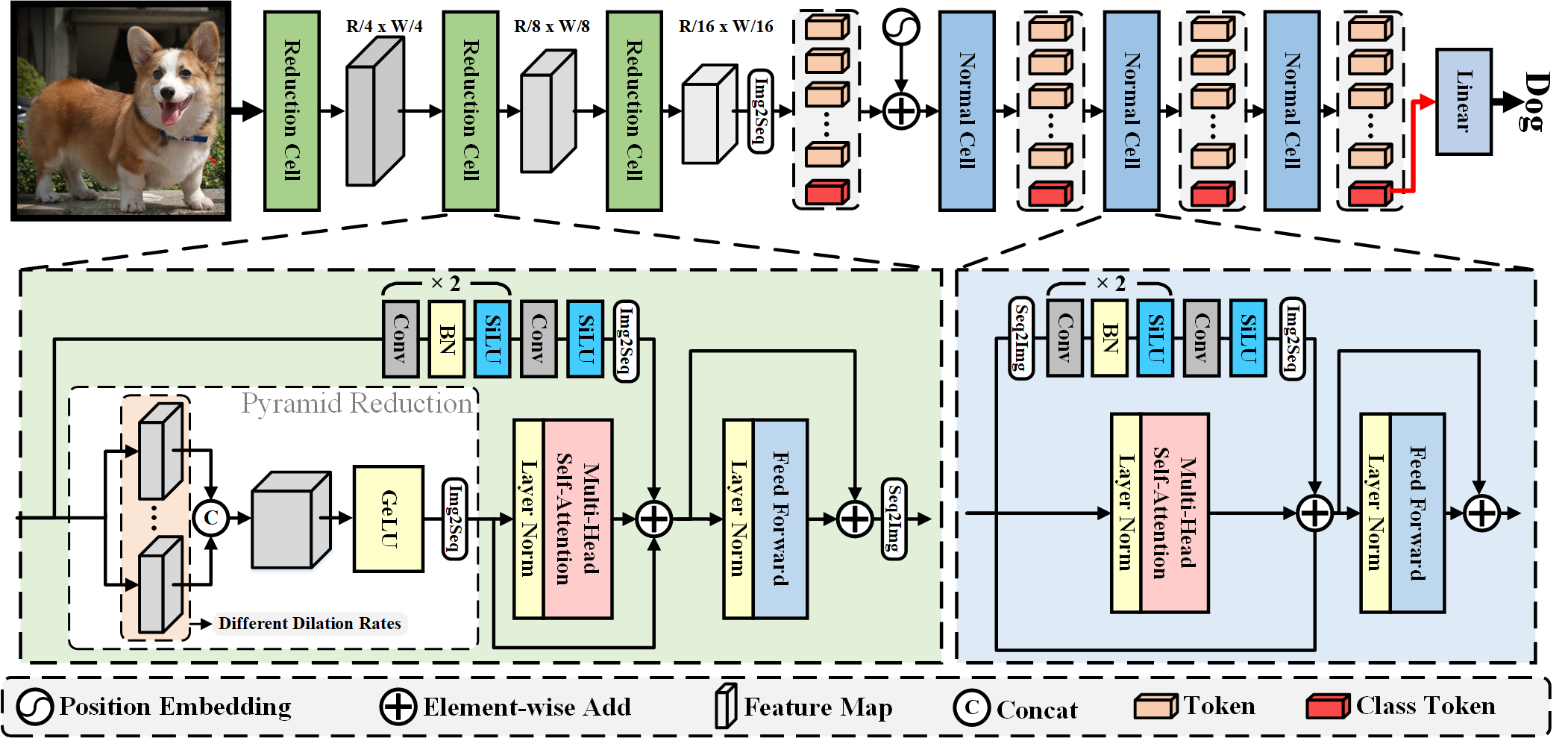

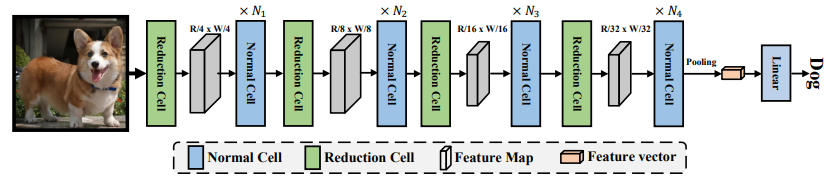

This repository contains the code, models, test results for the paper ViTAE: Vision Transformer Advanced by Exploring Intrinsic Inductive Bias. It contains several reduction cells and normal cells to introduce scale-invariance and locality into vision transformers. In ViTAEv2, we explore the usage of window attentions without shift operations to obtain a better balance between memory footprint, speed, and performance. We also stack the proposed RC and NC in a multi-stage manner to faciliate the learning on other vision tasks including detection, segmentation, and pose.

Fig.1 - The details of RC and NC design in ViTAE. Fig.2 - The multi-stage design of ViTAEv2.This project is for research purpose only. For any other questions please contact yufei.xu at outlook.com qmzhangzz at hotmail.com .

@article{xu2021vitae,

title={Vitae: Vision transformer advanced by exploring intrinsic inductive bias},

author={Xu, Yufei and Zhang, Qiming and Zhang, Jing and Tao, Dacheng},

journal={Advances in Neural Information Processing Systems},

volume={34},

year={2021}

}

@article{zhang2022vitaev2,

title={ViTAEv2: Vision Transformer Advanced by Exploring Inductive Bias for Image Recognition and Beyond},

author={Zhang, Qiming and Xu, Yufei and Zhang, Jing and Tao, Dacheng},

journal={arXiv preprint arXiv:2202.10108},

year={2022}

}

Image Classification: See ViTAE for Image Classification

Object Detection: See ViTAE for Object Detection.

Semantic Segmentation: See ViTAE for Semantic Segmentation.

Animal Pose Estimation: See ViTAE for Animal Pose Estimation.

Matting: See ViTAE for Matting.

Remote Sensing: See ViTAE for Remote Sensing.