Features • News • QuickStart • Cases • Customization • FAQ • Community • Contributors

【English | 中文】

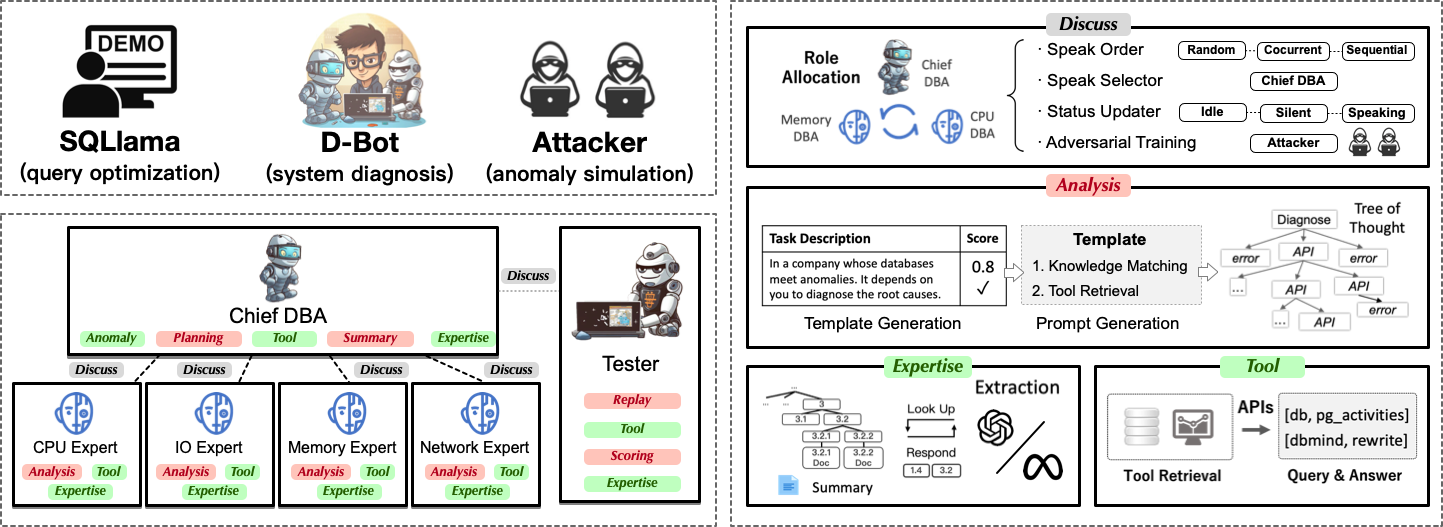

🧗 We aim to provide a collection of useful, user-friendly, and advanced database tools. These tools are built around LLMs, including query optimization (SQLIama), system diagnosis (D-Bot), and anomaly simulation (attacker)

-

Well-Founded Diagnosis: D-Bot can provide founded diagnosis by utilizing relevant database knowledge (with document2experience).

-

Practical Tool Utilization: D-Bot can utilize both monitoring and optimization tools to improve the maintenance capability (with tool learning and tree of thought).

-

In-depth Reasoning: Compared with vanilla LLMs, D-Bot will achieve competitive reasoning capability to analyze root causes (with multi-llm communications).

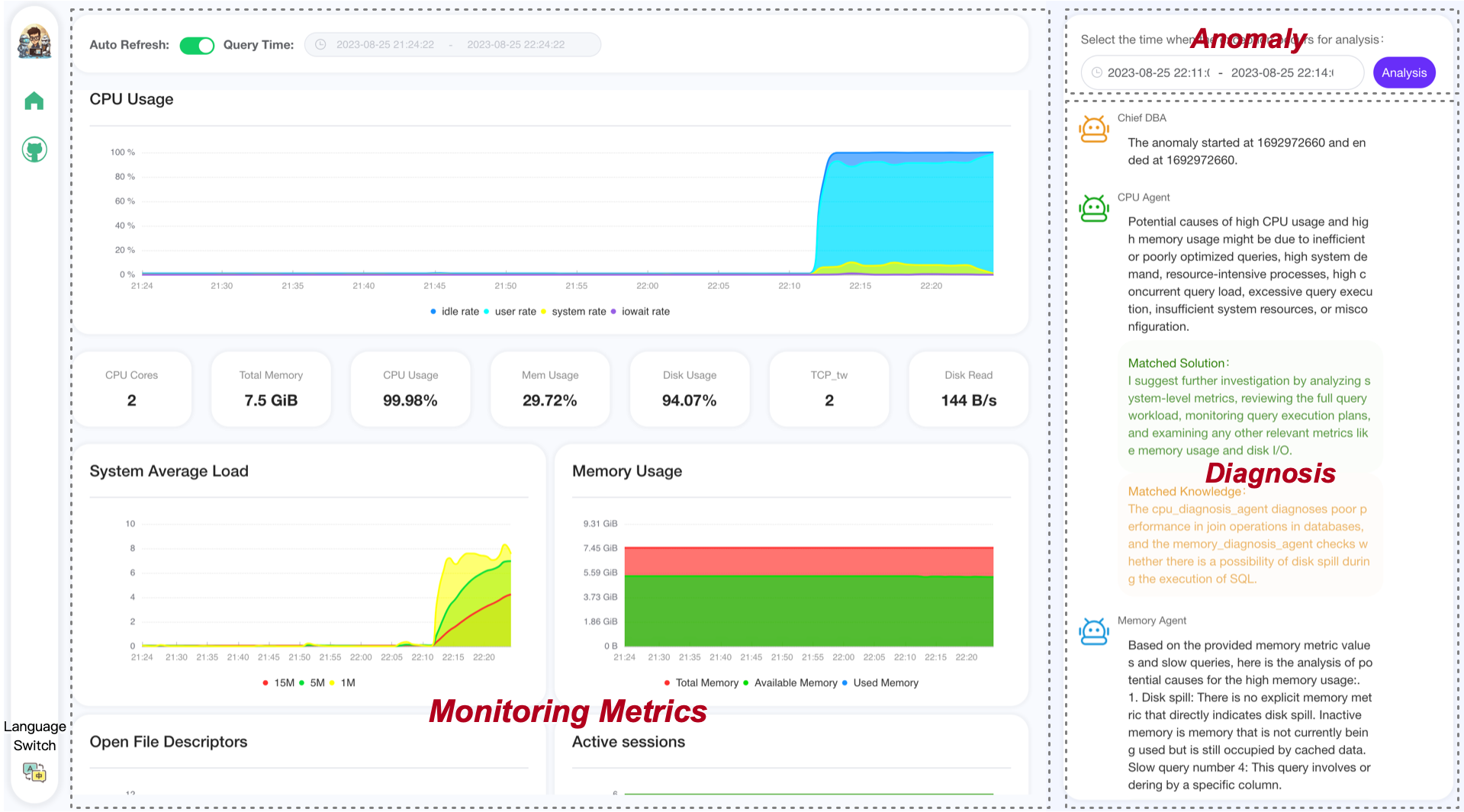

A demo of using D-Bot

db_diag.mp4

-

[2023/9/10] Add diagnosis logs 🔗 link and replay button in the frontend ⏱ link

-

[2023/9/09] Add typical anomalies 🔗 link

-

[2023/9/05] A unique framework is available! Start diag+tool service with a single command, experiencing 5x speed up!! 🚀 link

-

[2023/8/22] Support multi-level tools with 60+ APIs 🔗 link

-

[2023/8/10] Our vision papers are released (continuously update)

This project is evolving with new features 👫👫

Don't forget to star ⭐ and watch 👀 to stay up to date :)

.

├── multiagents

│ ├── agent_conf # Settings of each agent

│ ├── agents # Implementation of different agent types

│ ├── environments # E.g., chat orders / chat update / terminal conditions

│ ├── knowledge # Diagnosis experience from documents

│ ├── llms # Supported models

│ ├── memory # The content and summary of chat history

│ ├── response_formalize_scripts # Useless content removal of model response

│ ├── tools # External monitoring/optimization tools for models

│ └── utils # Other functions (e.g., database/json/yaml operations)

-

PostgreSQL v12 or higher

Additionally, install extensions like pg_stat_statements (track frequent queries), pg_hint_plan (optimize physical operators), and hypopg (create hypothetical Indexes).

Note pg_stat_statements continuosly accumulate query statistics over time. So you need to clear the statistics from time to time: 1) To discard all the statistics, execute "SELECT pg_stat_statements_reset();"; 2) To discard the statistics of specific query, execute "SELECT pg_stat_statements_reset(userid, dbid, queryid);".

-

Enable slow query log in PostgreSQL (Refer to link)

(1) For "systemctl restart postgresql", the service name can be different (e.g., postgresql-12.service);

(2) Use absolute log path name like "log_directory = '/var/lib/pgsql/12/data/log'";

(3) Set "log_line_prefix = '%m [%p] [%d]'" in postgresql.conf (to record the database names of different queries).

-

Prometheus

and Grafana (tutorial)Check prometheus.md for detailed installation guides.

Grafana is no longer a necessity with our vue-based frontend.

Step 1: Install python packages.

pip install -r requirements.txtStep 2: Configure environment variables.

- Export your OpenAI API key

# macos

export OPENAI_API_KEY="your_api_key_here"# windows

set OPENAI_API_KEY="your_api_key_here"Step 3: Add database/anomaly/prometheus settings into tool_config_example.yaml and rename into tool_config.yaml:

```bash

POSTGRESQL:

host: 182.92.xxx.x

port: 5432

user: xxxx

password: xxxxx

dbname: postgres

DATABASESERVER:

server_address: 182.92.xxx.x

username: root

password: xxxxx

remote_directory: /var/lib/pgsql/12/data/log

PROMETHEUS:

api_url: http://8.131.xxx.xx:9090/

postgresql_exporter_instance: 172.27.xx.xx:9187

node_exporter_instance: 172.27.xx.xx:9100

BENCHSERVER:

server_address: 8.131.xxx.xx

username: root

password: xxxxx

remote_directory: /root/benchmark

```

You can ignore the settings of BENCHSERVER, which is not used in this version.

- If accessing openai service via vpn, execute this command:

# macos

export https_proxy=http://127.0.0.1:7890 http_proxy=http://127.0.0.1:7890 all_proxy=socks5://127.0.0.1:7890- Test your openai key

cd others

python openai_test.py

We also provide a local website demo for this environment. You can launch it with

# cd website

cd front_demo

rm -rf node_modules/

rm -r package-lock.json

# install dependencies for the first run (nodejs, ^16.13.1 is recommended)

npm install --legacy-peer-deps

# back to root directory

cd ..

# launch the local server and open the website

sh run_demo.shModify the "python app.py" command within run_demo.sh if multiple versions of Python are installed.

After successfully launching the local server, visit http://127.0.0.1:9228/ to trigger the diagnosis procedure.

python main.pyWe support AlertManager for Prometheus. You can find more information about how to configure alertmanager here: alertmanager.md.

- We provide AlertManager-related configuration files, including alertmanager.yml, node_rules.yml, and pgsql_rules.yml. The path is in the config folder in the root directory, which you can deploy to your Prometheus server to retrieve the associated exceptions.

- We also provide webhook server that supports getting alerts. The path is a webhook folder in the root directory that you can deploy to your server to get and store Prometheus's alerts. The diagnostic model periodically grabs Alert information from this server. This file is obtained using SSh. You need to configure your server information in the tool_config.yaml in the config folder.

- node_rules.yml and pgsql_rules.yml is a reference https://github.com/Vonng/pigsty code in this open source project, their monitoring do very well, thank them for their effort.

Within the anomaly_trigger directory, we aim to offer scripts that could incur typical anomalies, e.g.,

| Root Cause | Description | Case |

|---|---|---|

|

Long execution time for large data insertions | |

|

Long execution time for large data fetching | |

|

Missing indexes causing performance issues | 🔗 link |

|

Unnecessary and redundant indexes in tables | |

|

Unused space caused by data modifications | |

|

Poor performance of Join operators | |

|

Non-promotable subqueries in SQL | |

|

Outdated statistical info affecting execution plan | |

|

Lock contention issues | |

|

Severe external CPU resource contention | |

|

IO resource contention affecting SQL performance | |

|

High-concurrency inserts affecting SQL execution | 🔗 link |

|

High-concurrency commits affecting SQL execution | 🔗 link |

|

Workload concentration affecting SQL execution | 🔗 link |

|

Tool small allocated memory space | |

|

Reach the max I/O capacity or throughput |

-

Extract knowledge from both code (./knowledge_json/knowledge_from_code) and documents (./knowledge_json/knowledge_from_document).

- Add code blocks into diagnosis_code.txt file -> Rerun the extract_knowledge.py script -> Check the update results and sync to root_causes_dbmind.jsonl.

-

Tool APIs (for optimization)

Module Functions index_selection (equipped) heuristic algorithm query_rewrite (equipped) 45 rules physical_hint (equipped) 15 parameters For functions within [query_rewrite, physical_hint], you can use api_test.py script to verify the effectiveness.

If the function actually works, append it to the api.py of corresponding module.

-

Tool Usage Algorithm (tree of thought)

cd tree_of_thought python test_database.pyHistory messages may take up many tokens, and so carefully decide the turn number.

🤨 The '.sh' script command cannot be executed on windows system.

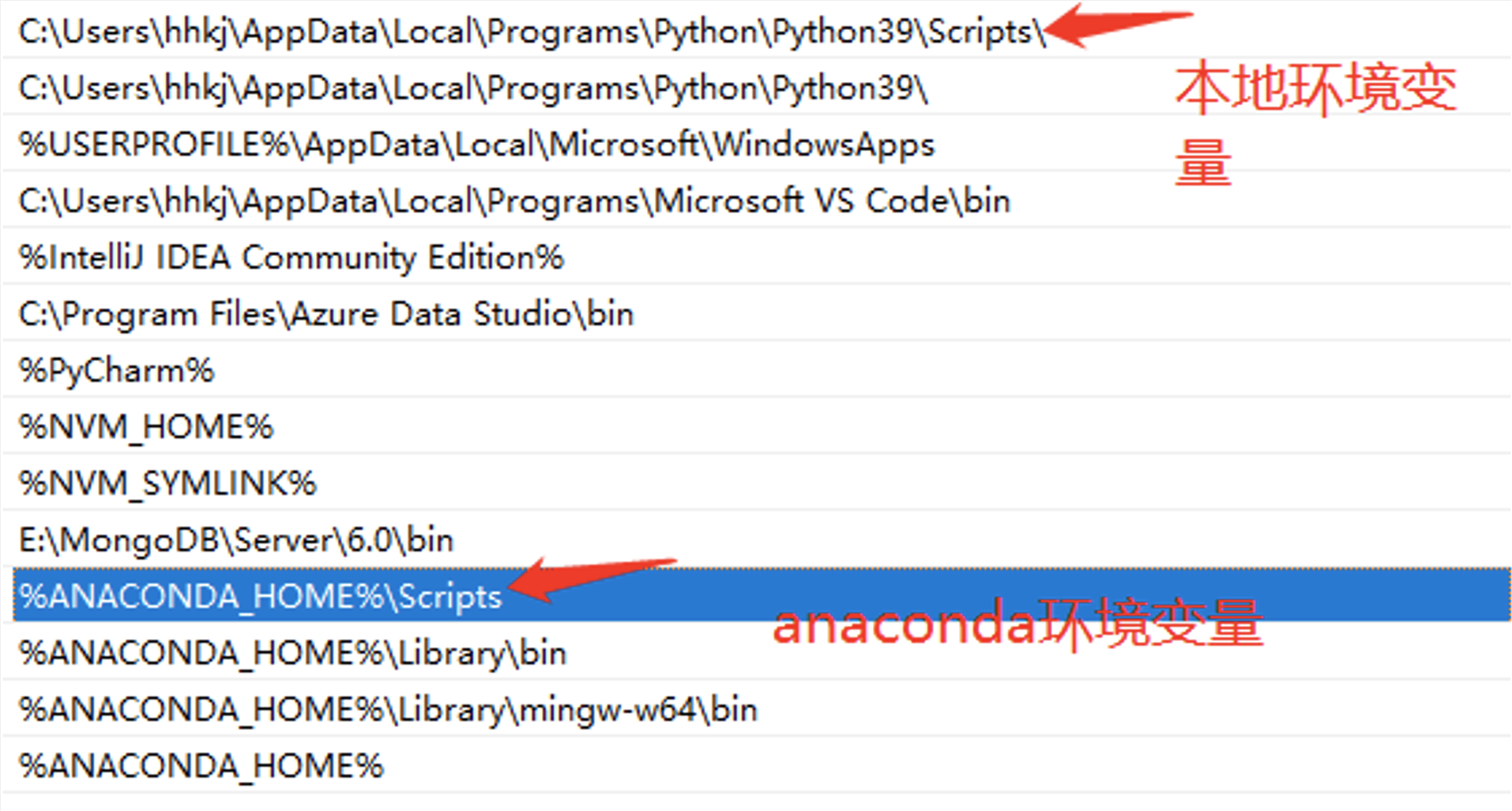

Switch the shell to *git bash* or use *git bash* to execute the '.sh' script.🤨 "No module named 'xxx'" on windows system.

This error is caused by issues with the Python runtime environment path. You need to perform the following steps:Step 1: Check Environment Variables.

You must configure the "Scripts" in the environment variables.

Step 2: Check IDE Settings.

For VS Code, download the Python extension for code. For PyCharm, specify the Python version for the current project.

-

Project cleaning -

Support more anomalies - Strictly constrain the llm outputs (excessive irrelevant information) based on the matched knowledge

- Query log option (potential to take up disk space and we need to consider it carefully)

- Add more communication mechanisms

- Support more knowledge sources

- Support localized private models (e.g., llama/vicuna/luca)

- Release training datasets

- Support other databases (e.g., mysql/redis)

https://github.com/OpenBMB/AgentVerse

https://github.com/OpenBMB/BMTools

Feel free to cite us if you like this project.

@misc{zhou2023llm4diag,

title={LLM As DBA},

author={Xuanhe Zhou, Guoliang Li, Zhiyuan Liu},

year={2023},

eprint={2308.05481},

archivePrefix={arXiv},

primaryClass={cs.DB}

}@misc{zhou2023dbgpt,

title={DB-GPT: Large Language Model Meets Database},

author={Xuanhe Zhou, Zhaoyan Sun, Guoliang Li},

year={2023},

archivePrefix={Data Science and Engineering},

}

Other Collaborators: Wei Zhou, Kunyi Li.

We thank all the contributors to this project. Do not hesitate if you would like to get involved or contribute!