Grasping the Arrow of Time from the Singularity: Decoding Micromotion in Low-dimensional Latent Spaces from StyleGAN

Project Page | Paper

Demo:

Qiucheng Wu1*,

Yifan Jiang2*,

Junru Wu3*,

Kai Wang5,6,

Gong Zhang5,6,

Humphrey Shi4,5,6,

Zhangyang Wang2,

Shiyu Chang1

1University of California, Santa Barbara, 2The University of Texas at Austin, 3Texas A&M University, 4UIUC, 5University of Oregon, 6Picsart AI Research (PAIR)

*denotes equal contribution.

This is the official implementation of the paper "Grasping the Arrow of Time from the Singularity: Decoding Micromotion in Low-dimensional Latent Spaces from StyleGAN".

Overview

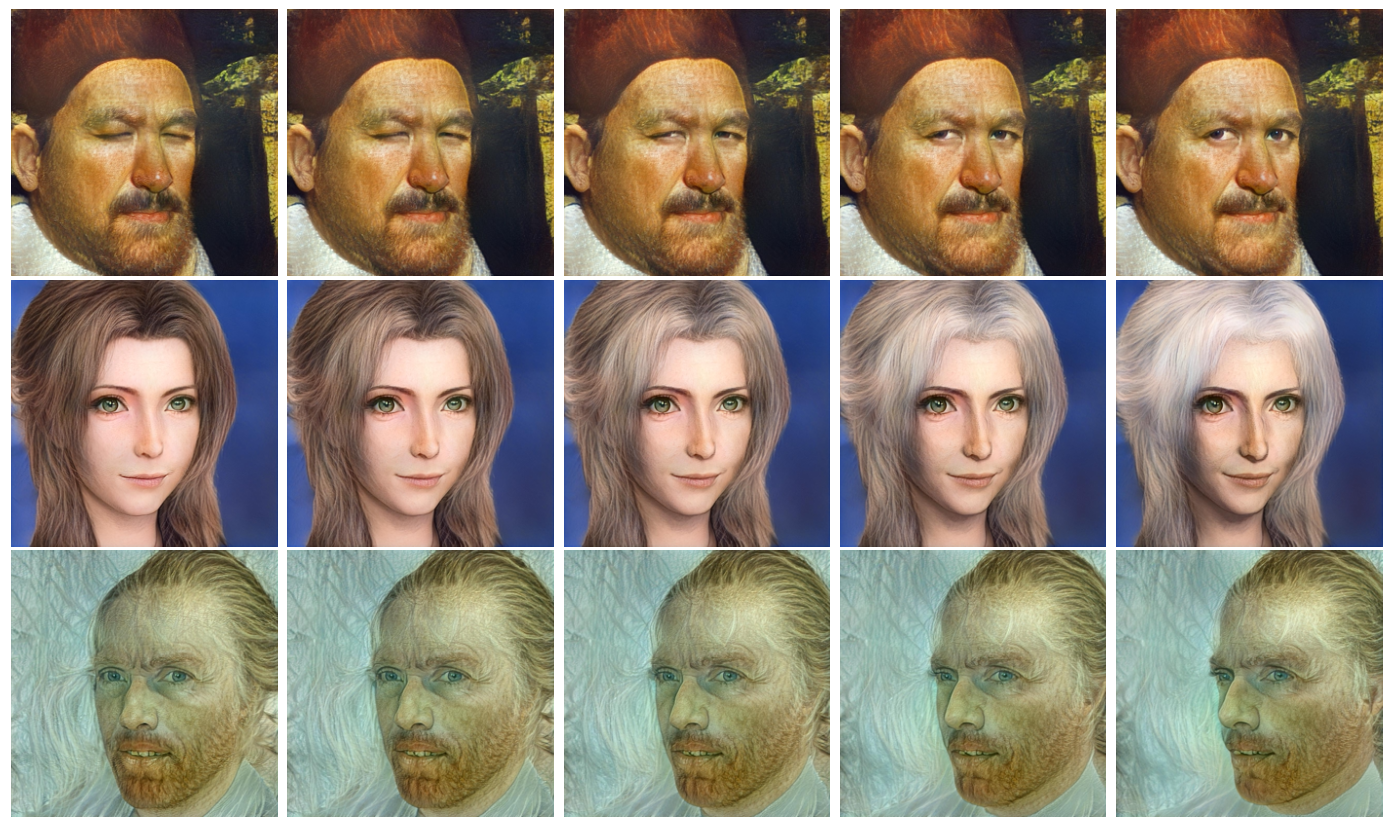

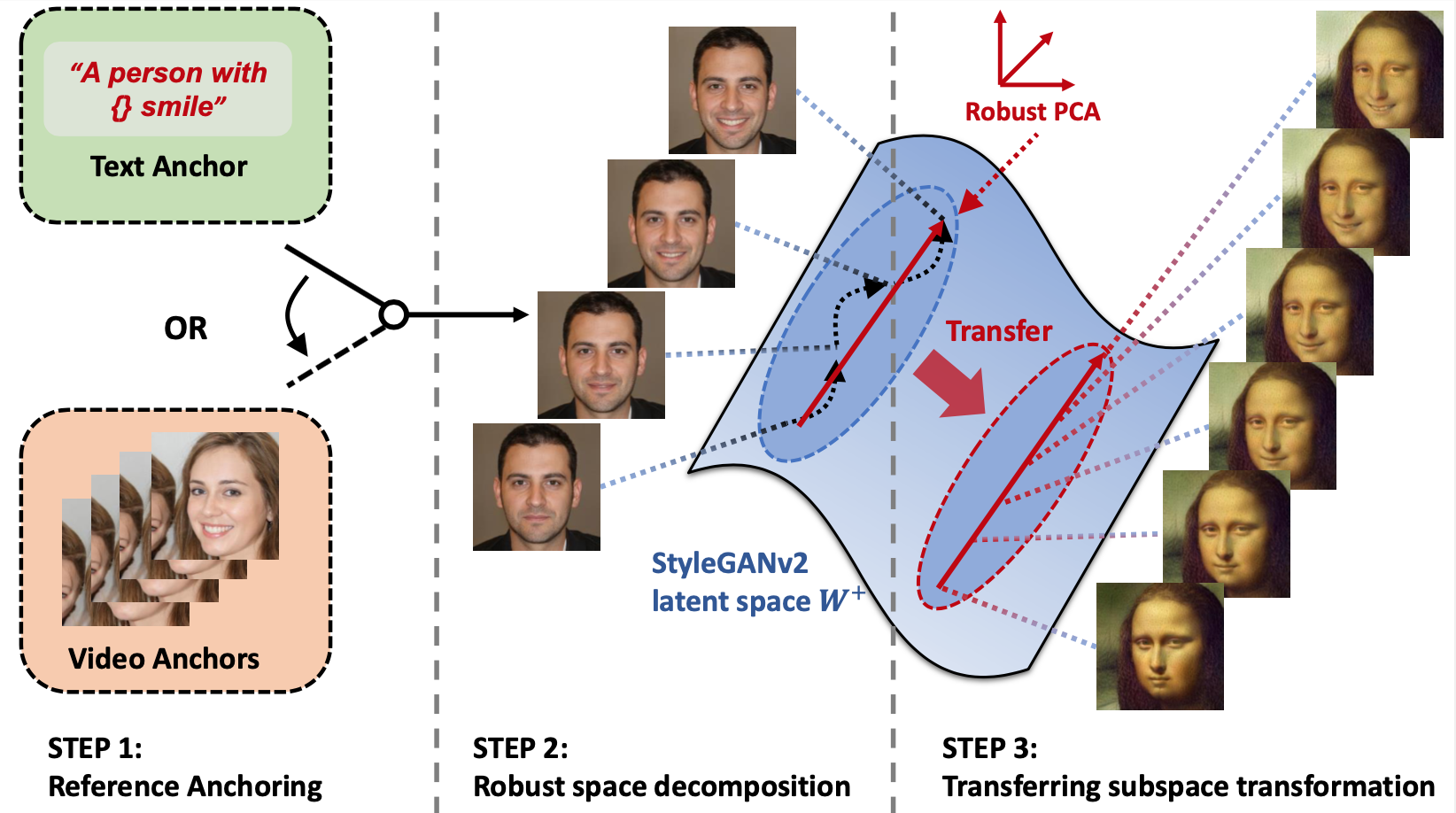

In this work, we hypothesize and demonstrate that a series of meaningful, natural, and versatile small, local movements (referred to as “micromotion”, such as expression, head movement, and aging effect) can be represented in low-rank spaces extracted from the latent space of a conventionally pre-trained StyleGAN-v2 model for face generation, with the guidance of proper “anchors” in the form of either short text or video clips. Starting from one target face image, with the editing direction decoded from the low-rank space, its micromotion features can be represented as simple as an affine transformation over its latent feature. Perhaps more surprisingly, such micromotion subspace, even learned from just single target face, can be painlessly transferred to other unseen face images, even those from vastly different domains (such as oil painting, cartoon, and sculpture faces).

The workflow

Our complete workflow can be distilled down to three simple steps: (a) collecting anchor latent codes from a single identity; (b) enforcing robustness linear decomposition to obtain a noise-free low-dimensional space; (c) applying the extracted edit direction from low-dimensional space to arbitrary input identities.

Prerequisite

Please setup the environment with provided environment.yml file:

conda env create -f environment.yml

conda activate test-grasping

pip install torch==1.7.1+cu110 torchvision==0.8.2+cu110 torchaudio===0.7.2 -f https://download.pytorch.org/whl/torch_stable.htmlRunning Inference

We provided a colab to get started easily.

You can also clone the github repo.

git clone https://github.com/wuqiuche/micromotion-codebase.gitThe pretrained models we used are from the following repositories: StyleCLIP and restyle-encoder. Please download the pre-trained inverter, generator and identity models from their repositories. The models we used are:

- Inverter, move it under

restyle_encoder/pretrained_models/ - Generator, move it under

StyleCLIP/ - Identity, move it under

StyleCLIP/

Reproduce results from pre-trained micromotion latents

In-Distribution Results

| Image | Micromotion | Command |

|---|---|---|

| 01.jpg | "Angry" | python main.py --category angry --input examples/01.jpg |

Out-of-Distribution Results

| Image | Micromotion | Command |

|---|---|---|

| mona_lisa.jpg | "Smile" | python main.py --category smile --input examples/mona_lisa.jpg |

| aerith.png | "Aging" | python main.py --category aging --input examples/aerith.png |

| pope.jpg | "Closing Eyes" | python main.py --category eyesClose --input examples/pope.jpg |

| van_gouh.jpg | "Turning Head" | python main.py --category headsTurn --input examples/van_gouh.jpg |

Videos will be generate under folder results.

Running Training

python main.py --category CATEGORY --template TEMPLATE --seed SEED --input INPUTArguments

--input define the input image

--category define pretrained micromotion latents, use "custom" to specific text template.

--template define text template for micromotions.

--seed specifies seed for the anchor facial image for micromotion, the anchor facial image will be saved under text

Micromotions and its corresponding text templates used in the paper

| Micromotion | Template | Command |

|---|---|---|

| "Smile" | "a person with {degree} smile" | python main.py --category custom --template "a person with {degree} smile" --input examples/mona_lisa.jpg |

| "Aging" | "a {degree} old person" | python main.py --category custom --template "a {degree} old person with gray hair" --input examples/aerith.png |

| "Closing Eyes" | "a person with eyes {degree} closed" | python main.py --category custom --template "a person with eyes {degree} closed" --input examples/pope.jpg |

| "Angry" | "{degree} angry" | python main.py --category custom --template "{degree} angry" --input examples/01.jpg |

Videos will be generate under folder gifs.

Results

In-Distribution Results

fig4.mp4

Out-of-Distribution Results

Painting

painting.mp4

Video Game

game.mp4

Sketch

sketch.mp4

Statue

statue.mp4

Parent Repository

This code is adopted from https://github.com/orpatashnik/StyleCLIP and https://github.com/yuval-alaluf/restyle-encoder.