This is the official PyTorch implementation code. For technical details, please refer to:

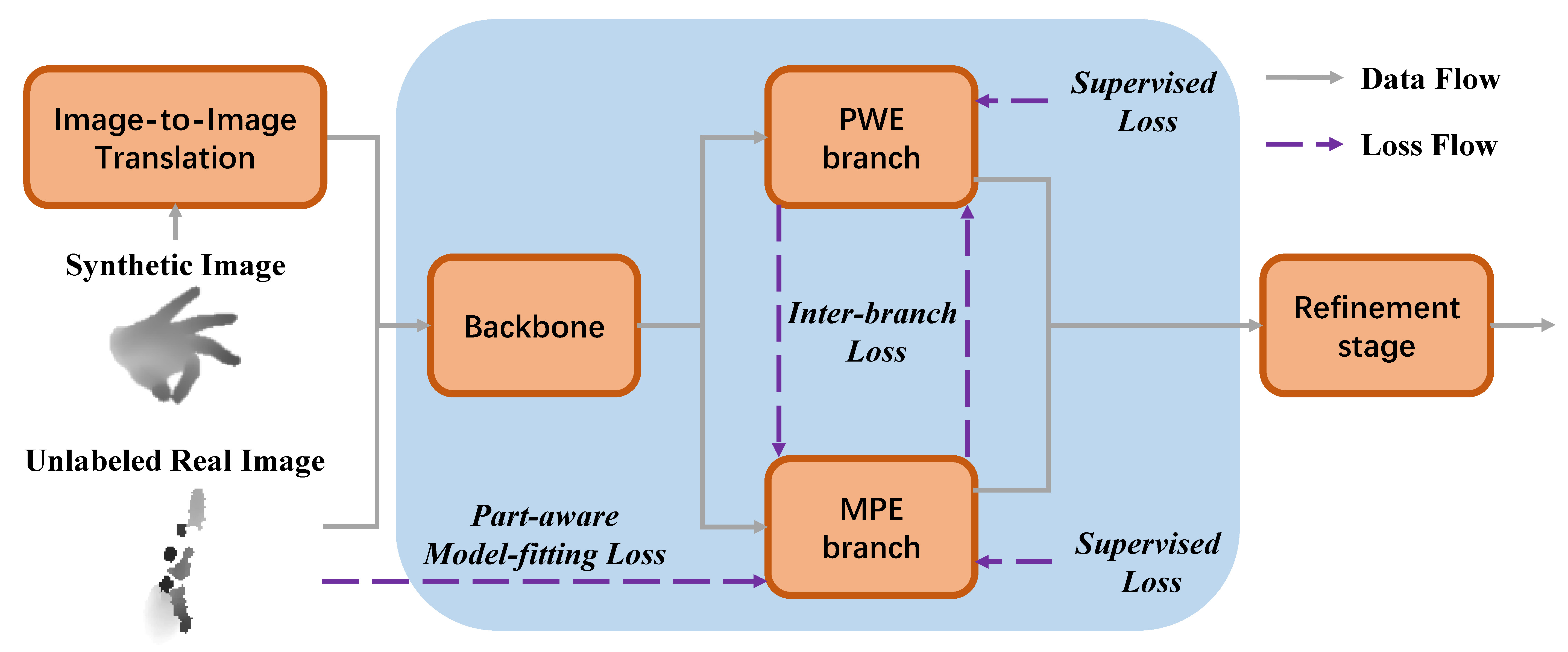

A Dual-Branch Self-Boosting Framework for Self-Supervised 3D Hand Pose Estimation

Pengfei Ren, Haifeng Sun, Jiachang Hao, Qi Qi, Jingyu Wang, Jianxin Liao

[Paper]

Compared with semi-automatic annotation methods in ICVL and MSRA datasets, our self-supervised method can generate more accurate and robust 3D hand pose and hand mesh.

Using the 3D skeleton generated by DSF can greatly improve the accuracy of the skeleton-based action recognition.

| Method | Modality | SHREC 14 | SHREC 28 | DHG 14 | DHG 28 |

|---|---|---|---|---|---|

| PointLSTM | Point clouds | 95.9 | 94.7 | - | - |

| Res-TCN | Skeleton | 91.1 | 87.3 | 86.9 | 83.6 |

| ST-GCN | Skeleton | 92.7 | 87.7 | 91.2 | 87.1 |

| STA-Res-TCN | Skeleton | 93.6 | 90.7 | 89.2 | 85.0 |

| ST-TS-HGR-NET | Skeleton | 94.3 | 89.4 | 87.3 | 83.4 |

| HPEV | Skeleton | 94.9 | 92.3 | 92.5 | 88.9 |

| DG-STA | Skeleton | 94.4 | 90.7 | 91.9 | 88.0 |

| DG-STA (AWR) | Skeleton | 96.3↑1.9 | 93.3↑2.6 | 94.5↑2.6 | 92.1 ↑4.1 |

| DG-STA (DSF) | Skeleton | 96.8↑2.4 | 95.0↑4.3 | 96.3↑4.4 | 95.9↑7.9 |

- Python >= 3.8

- PyTorch >= 1.10

- pytorch3d == 0.4.0

- CUDA (tested with cuda11.3)

- Other dependencies described in requirements.txt

- Go to MANO website

- Download Models and copy the

models/MANO_RIGHT.pklinto theMANOfolder - Your folder structure should look like this:

DSF/

MANO/

MANO_RIGHT.pkl

- Download and decompress NYU and modify the

root_dirinconfig.pyaccording to your setting. - Download the center files [Google Drive] and put them into the

trainandtestdirectories of NYU respectively. - Download the MANO parameter file of the NYU dataset we generated MANO Files

- Your folder structure should look like this:

.../

nyu/

train/

center_train_0_refined.txt

center_train_1_refined.txt

center_train_2_refined.txt

...

test/

center_test_0_refined.txt

center_test_1_refined.txt

center_test_2_refined.txt

...

posePara_lm_collosion/

nyu-train-0-pose.txt

...

- Download our pre-trained model with self-supervised training [Google Drive]

- Download our pre-trained model with only synthetic data [Google Drive]

- Download the Consis-CycleGAN model [Google Drive]

Set load_model as the path to the pretrained model and change the phase to "test" in config.py, run

python train_render.pyTo perform self-supervised training, set finetune_dir as the path to the pretrained model with only synthetic data and tansferNet_pth as the path to the Consis-CycleGAN model in config.py.

Then, change the phase to "train", run

python train_render.pyTo perform pre-training, set train_stage to "pretrain" in config.py, run

python train_render.pyIf you find our work useful in your research, please citing:

@ARTICLE{9841448,

author={Ren, Pengfei and Sun, Haifeng and Hao, Jiachang and Qi, Qi and Wang, Jingyu and Liao, Jianxin},

journal={IEEE Transactions on Image Processing},

title={A Dual-Branch Self-Boosting Framework for Self-Supervised 3D Hand Pose Estimation},

year={2022},

volume={31},

number={},

pages={5052-5066},

doi={10.1109/TIP.2022.3192708}}