Pytorch SSD-HarDNet

Harmonic DenseNet: A low memory traffic network (ICCV 2019)

Refer to Pytorch-HarDNet for more information

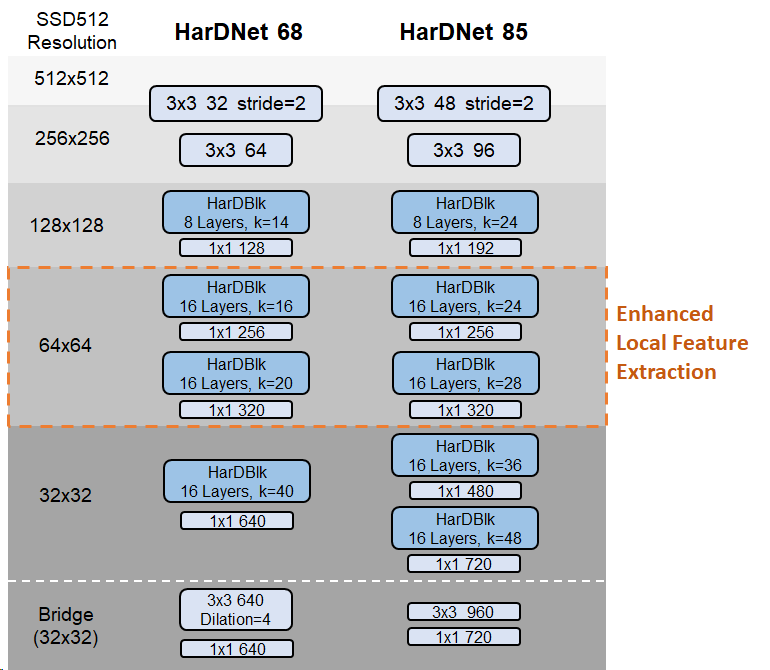

HarDNet68/85:

With enhanced feature extraction on high resolution feature maps, the performance on object detection can be better than models designed for image classification which generally concentrate on global feature extraction.

Results

| Method | COCO mAP on test-dev |

Overall fps (Titan X) |

Overall fps (1080Ti) |

Overall fps (Titan V) |

|---|---|---|---|---|

| SSD512-HarDNet68 | 31.7 | 41 fps | 46.7 fps | 50.4 fps |

| SSD512-HarDNet85 | 35.1 | 32.7 fps | 39.4 fps | 43.4 fps |

| RFBNet512-HarDNet68 | 33.9 | 30 fps | 37.5 fps | 41.5 fps |

| RFBNet512-HarDNet85 | 36.8 | 26 fps | 33.5 fps | 37.1 fps |

12/19 2019 update: Release new overall frame rate measurements after the nms speed improvement*.

*nms speed improvement: 1. employ torchvision nms. 2. filter out bbox with high prob to be background before the nms.

| Method | COCO mAP on test-dev | Inference Time (1080ti, without nms) |

|---|---|---|

| SSD512-VGG16 | 28.8 | 19.7ms |

| SSD513-ResNet101 | 31.2 | - |

| SSD512-HarDNet68 | 31.7 | 13.8ms |

| SSD512-HarDNet85 | 35.1 | 18.5ms |

| RFBNet512-HarDNet68 | 33.9 | 20.0ms |

| RFBNet512-HarDNet85 | 36.8 | 23.2ms |

Note: Inference time and overall fps results were measured with pytorch 1.3 (float32) and cuda 10.1. Please note that HarDNet still suffers from the explicit tensor copy for concatenations. To fully utilize the GPU please increase the batch size or input image size(> 512x512) in the test time. The current results was tested with batch_size=1 and image_size=512x512, which utilize only ~50% of GPU time in average.

SSD512-HarDNet68 detailed results (test-dev):

overall performance

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.317

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.510

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.338

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.125

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.351

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.479

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.277

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.419

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.439

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.184

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.485

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.636

SSD512-HarDNet85 detailed results (test-dev):

overall performance

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.351

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.548

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.376

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.150

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.389

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.515

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.301

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.454

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.475

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.217

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.528

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.673

RFBNet512-HarDNet68 detailed results (test-dev):

overall performance

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.339

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.543

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.362

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.147

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.366

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.505

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.292

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.444

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.468

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.233

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.500

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.664

RFBNet512-HarDNet85 detailed results (test-dev):

overall performance

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.368

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.571

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.395

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.169

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.405

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.529

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.309

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.474

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.498

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.259

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.543

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.688

Installation

- Install PyTorch 0.2.0 - 0.4.1 by selecting your environment on the website and running the appropriate command.

- Clone this repository. This repository is forked from PytorchSSD,

- Compile the nms and coco tools: (The nms utilities need to be compiled with an old version of Pytorch. After compile, you can upgrade Pytorch to the newest version)

./make.shNote*: Check you GPU architecture support in utils/build.py, line 131. Default is:

'nvcc': ['-arch=sm_52',

- Then download the dataset by following the instructions below and install opencv.

conda install opencvNote: For training, we currently support VOC and COCO.

Datasets

To make things easy, we provide simple VOC and COCO dataset loader that inherits torch.utils.data.Dataset making it fully compatible with the torchvision.datasets API.

VOC Dataset

Download VOC2007 trainval & test

# specify a directory for dataset to be downloaded into, else default is ~/data/

sh data/scripts/VOC2007.sh # <directory>Download VOC2012 trainval

# specify a directory for dataset to be downloaded into, else default is ~/data/

sh data/scripts/VOC2012.sh # <directory>COCO Dataset

Install the MS COCO dataset at /path/to/coco from official website, default is ~/data/COCO. Following the instructions to prepare minival2014 and valminusminival2014 annotations. All label files (.json) should be under the COCO/annotations/ folder. It should have this basic structure

$COCO/

$COCO/cache/

$COCO/annotations/

$COCO/images/

$COCO/images/test2015/

$COCO/images/train2014/

$COCO/images/val2014/UPDATE: The current COCO dataset has released new train2017 and val2017 sets which are just new splits of the same image sets.

Testing

python train_test.py -v SSD_HarDNet68 -s 512 --test <path_to_pretrained_weight.pth>

Pretrained Weights

- Pretrained backbone models: hardnet68_base_bridge.pth | hardnet85_base.pth

- Pretrained models for COCO dataset: SSD512-HarDNet68 | SSD512-HarDNet85 | RFBNet512-HarDNet68 | RFBNet512-HarDNet85

Training

- Run the follwing to train SSD-HarDNet:

python train_test.py -d VOC -v SSD_HarDNet68 -s 512- Note:

- -d: choose datasets, VOC or COCO.

- -v: choose backbone version, SSD_HarDNet68 or SSD_HarDNet85.

- -s: image size, 300 or 512.

Hyperparameters

- batch size = 32

- epochs = 150 (COCO) / 300 (VOC)

- initial lr = 4e-3

- lr decay by 0.1 at [60%, 80%, 90%] of total epochs

- weight decay = 1e-4 (COCO) / 5e-4 (VOC)

- we = 0 (no need for warm up)