This repository contains the official implementation of the following paper:

Towards An End-to-End Framework for Flow-Guided Video Inpainting

Zhen Li#, Cheng-Ze Lu#, Jianhua Qin, Chun-Le Guo*, Ming-Ming Cheng

IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022

[Paper] [Project Page (TBD)] [Poster (TBD)] [Video (TBD)]

You can try our colab demo here:

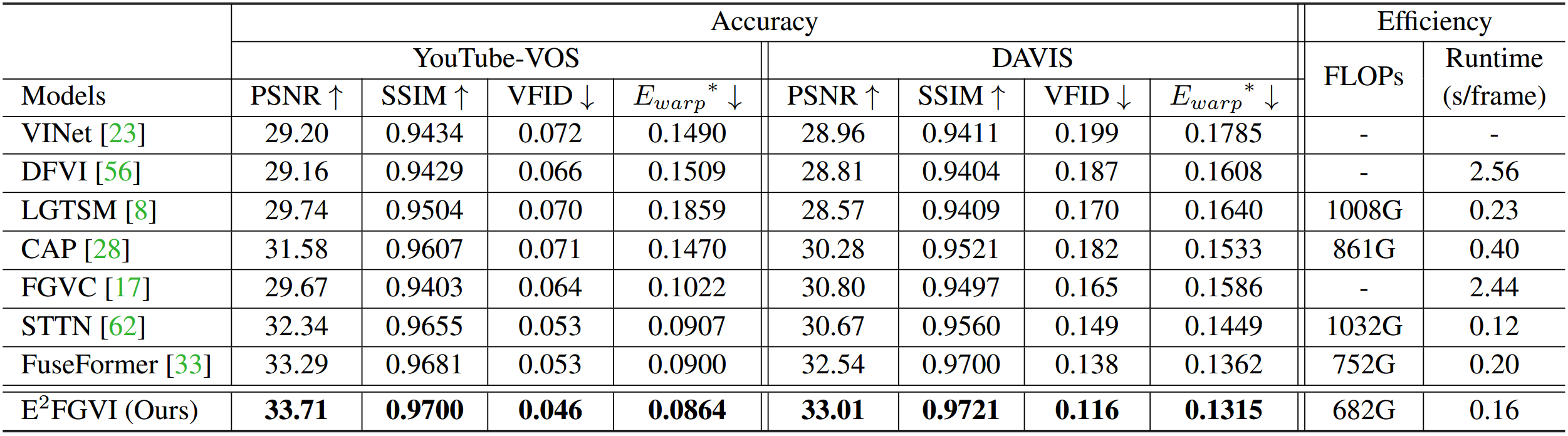

- SOTA performance: The proposed E2FGVI achieves significant improvements on all quantitative metrics in comparison with SOTA methods.

- Highly effiency: Our method processes 432 × 240 videos at 0.12 seconds per frame on a Titan XP GPU, which is nearly 15× faster than previous flow-based methods. Besides, our method has the lowest FLOPs among all compared SOTA methods.

- Update website page

- High-resolution version

- Update Youtube / Bilibili link

-

Clone Repo

git clone https://github.com/MCG-NKU/E2FGVI.git

-

Create Conda Environment and Install Dependencies

conda env create -f environment.yml conda activate e2fgvi

- Python >= 3.7

- PyTorch >= 1.5

- CUDA >= 9.2

- mmcv-full (following the pipeline to install)

Before performing the following steps, please download our pretrained model first.

🔗 Download Links: [Google Drive] [Baidu Disk]

Then, unzip the file and place the models to release_model directory.

The directory structure will be arranged as:

release_model

|- E2FGVI-CVPR22.pth

|- i3d_rgb_imagenet.pt (for evaluating VFID metric)

|- README.md

We provide two examples in the examples directory.

Run the following command to enjoy them:

# The first example (using split video frames)

python test.py --video examples/tennis --mask examples/tennis_mask --ckpt release_model/E2FGVI-CVPR22.pth

# The second example (using mp4 format video)

python test.py --video examples/schoolgirls.mp4 --mask examples/schoolgirls_mask --ckpt release_model/E2FGVI-CVPR22.pthThe inpainting video will be saved in the results directory.

Please prepare your own mp4 video (or split frames) and frame-wise masks if you want to test more cases.

| Dataset | YouTube-VOS | DAVIS |

|---|---|---|

| Details | For training (3,471) and evaluation (508) | For evaluation (50 in 90) |

| Images | [Official Link] (Download train and test all frames) | [Official Link] (2017, 480p, TrainVal) |

| Masks | [Google Drive] [Baidu Disk] (For reproducing paper results) | |

The training and test split files are provided in datasets/<dataset_name>.

For each dataset, you should place JPEGImages to datasets/<dataset_name>.

Then, run sh datasets/zip_dir.sh (Note: please edit the folder path accordingly) for compressing each video in datasets/<dataset_name>/JPEGImages.

Unzip downloaded mask files to datasets.

The datasets directory structure will be arranged as: (Note: please check it carefully)

datasets

|- davis

|- JPEGImages

|- <video_name>.zip

|- <video_name>.zip

|- test_masks

|- <video_name>

|- 00000.png

|- 00001.png

|- train.json

|- test.json

|- youtube-vos

|- JPEGImages

|- <video_id>.zip

|- <video_id>.zip

|- test_masks

|- <video_id>

|- 00000.png

|- 00001.png

|- train.json

|- test.json

|- zip_file.sh

Run the following command for evaluation:

python evaluate.py --dataset <dataset_name> --data_root datasets/ --ckpt release_model/E2FGVI-CVPR22.pthYou will get scores as paper reported.

The scores will also be saved in the results/<dataset_name> directory.

Please --save_results for further evaluating temporal warping error.

Our training configures are provided in train_e2fgvi.json

Run the following command for training:

python train.py -c configs/train_e2fgvi.jsonYou could run the same command if you want to resume your training.

The training loss can be monitored by running:

tensorboard --logdir release_model You could follow this pipeline to evaluate your model.

If you find our repo useful for your research, please consider citing our paper:

@inproceedings{liCvpr22vInpainting,

title={Towards An End-to-End Framework for Flow-Guided Video Inpainting},

author={Li, Zhen and Lu, Cheng-Ze and Qin, Jianhua and Guo, Chun-Le and Cheng, Ming-Ming},

booktitle={IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2022}

}If you have any question, please feel free to contact us via zhenli1031ATgmail.com or czlu919AToutlook.com.

This repository is maintained by Zhen Li and Cheng-Ze Lu.

This code is based on STTN, FuseFormer, Focal-Transformer, and MMEditing.