fcenet error

justcodew opened this issue · comments

想要fcenet来测试一下弯取文本的检测效果,数据集为icdar2015时,训练正常。但换成 ICDAR2019-ArT数据集时,

训练会报如下的错误:

error happened with msg: Traceback (most recent call last):

File "/home/code/PaddleOCR/ppocr/data/simple_dataset.py", line 136, in getitem

outs = transform(data, self.ops)

File "/home/code/PaddleOCR/ppocr/data/imaug/init.py", line 50, in transform

data = op(data)

File "/home/code/PaddleOCR/ppocr/data/imaug/fce_targets.py", line 662, in call

results = self.generate_targets(results)

File "/home/code/PaddleOCR/ppocr/data/imaug/fce_targets.py", line 649, in generate_targets

polygon_masks_ignore)

File "/home/code/PaddleOCR/ppocr/data/imaug/fce_targets.py", line 608, in generate_level_targets

level_img_size, lv_text_polys[ind])[None]

File "/home/code/PaddleOCR/ppocr/data/imaug/fce_targets.py", line 333, in generate_center_region_mask

center_line = (resampled_top_line + resampled_bot_line) / 2

ValueError: operands could not be broadcast together with shapes (3,2) (2,2)

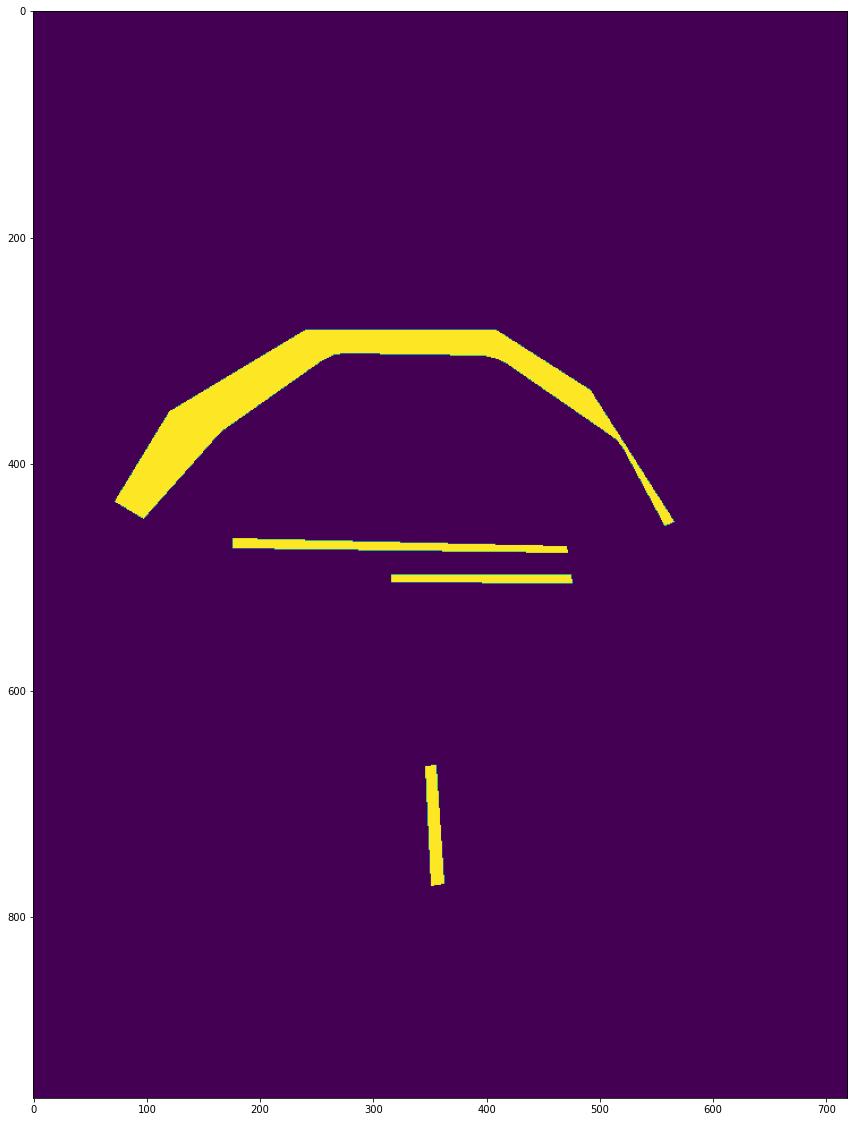

ICDAR2019-ArT数据集标注有可视化看过,没有问题。估计是代码这里有点问题,貌似是从mmocr转过来的。

可以帮忙看一下吗

能否提供越界的具体case

/train/img/gt_1807.jpg [{"transcription": "**少林武术培训", "points": [[26, 445], [95, 330], [231, 248], [417, 248], [515, 311], [614, 467], [542, 497], [491, 403], [397, 338], [274, 336], [186, 398], [104, 491]], "language": "Chinese", "illegibility": false}, {"transcription": "报名热线: 15221362588", "points": [[165, 455], [481, 462], [482, 489], [165, 485]], "language": "Chinese", "illegibility": false}, {"transcription": "021-20983256", "points": [[307, 490], [481, 490], [484, 513], [309, 512]], "language": "Latin", "illegibility": false}, {"transcription": "少林武术", "points": [[335, 658], [364, 655], [373, 779], [342, 783]], "language": "Chinese", "illegibility": false}]

收到

我用的就是这张图片,测试时有时正常,有时会报错。测试代码如下

import numpy as np

from paddle.io import DataLoader, BatchSampler

from ppocr.data.simple_dataset import SimpleDataSet

from ppocr.utils.loggers import VDLLogger

import yaml

import random

import paddle

from ppocr.utils.logging import get_logger

def set_seed(seed=1024):

random.seed(seed)

np.random.seed(seed)

paddle.seed(seed)

def build_dataloader(mode, batch_size, drop_last, shuffle, num_workers):

# dataset = SimpleDataSet(config,mode, logger, seed=0.5)

dataset = SimpleDataSet(config,mode, logger,seed=None)

batch_sampler = BatchSampler(dataset=dataset, batch_size=batch_size, shuffle=shuffle, drop_last=drop_last)

data_loader = DataLoader(dataset=dataset, batch_sampler=batch_sampler, num_workers=num_workers, return_list=True, use_shared_memory=False)

return data_loader

if __name__ == '__main__':

save_model_dir = './test_data/'

loggers = VDLLogger(save_model_dir)

log_file = './test_data/test_data_0607.log'

logger = get_logger(log_file=log_file)

config_file = r'/home/justcodew/code/PaddleOCR/configs/det/det_r50_vd_dcn_fce_art_error.yml'

config = yaml.load(open(config_file, 'rb'), Loader=yaml.Loader)

seed = config['Global']['seed'] if 'seed' in config['Global'] else 1024

set_seed(seed)

train_dataloader = build_dataloader('Train', batch_size=1, drop_last=False, shuffle=False, num_workers=1)

for indx, data in enumerate(train_dataloader):

print('indx ',indx)

配置文件:

Global:

use_gpu: true

epoch_num: 1500

log_smooth_window: 20

print_batch_step: 200

save_model_dir: ./output/det_fce/det_r50_dcn_fce_art/

save_epoch_step: 1000

eval_batch_step: [0, 200]

cal_metric_during_train: False

pretrained_model: ./pretrain_models/resnet50_pretrain_models/ResNet50_vd_ssld_pretrained

checkpoints:

save_inference_dir: ./output/det_fce/det_r50_dcn_fce_0620_art/

use_visualdl: True

infer_img: doc/imgs_en/img_10.jpg

save_res_path: ./output/det_fce/predicts_fce.txt

Architecture:

model_type: det

algorithm: FCE

Transform:

Backbone:

name: ResNet

layers: 50

#dcn_stage: [False, True, True, True]

out_indices: [1,2,3]

Neck:

name: FCEFPN

out_channels: 256

has_extra_convs: False

extra_stage: 0

Head:

name: FCEHead

fourier_degree: 5

Loss:

name: FCELoss

fourier_degree: 5

num_sample: 50

Optimizer:

name: Adam

beta1: 0.9

beta2: 0.999

lr:

learning_rate: 0.0001

regularizer:

name: 'L2'

factor: 0

PostProcess:

name: FCEPostProcess

scales: [8, 16, 32]

alpha: 1.0

beta: 1.0

fourier_degree: 5

box_type: 'poly'

Metric:

name: DetMetric

main_indicator: hmean

Train:

dataset:

name: SimpleDataSet

data_dir: /data/justcodew/ocr_data/icdar_convert/icdar_2019_art/

label_file_list:

- /data/justcodew/ocr_data/icdar_convert/icdar_2019_art/ppocr_train_label_error.txt

transforms:

- DecodeImage: # load image

img_mode: BGR

channel_first: False

ignore_orientation: True

- DetLabelEncode: # Class handling label

- ColorJitter:

brightness: 0.142

saturation: 0.5

contrast: 0.5

- RandomScaling:

- RandomCropFlip:

crop_ratio: 0.5

- RandomCropPolyInstances:

crop_ratio: 0.8

min_side_ratio: 0.3

- RandomRotatePolyInstances:

rotate_ratio: 0.5

max_angle: 30

pad_with_fixed_color: False

- SquareResizePad:

target_size: 800

pad_ratio: 0.6

- IaaAugment:

augmenter_args:

- { 'type': Fliplr, 'args': { 'p': 0.5 } }

- FCENetTargets:

fourier_degree: 5

- NormalizeImage:

scale: 1./255.

mean: [0.485, 0.456, 0.406]

std: [0.229, 0.224, 0.225]

order: 'hwc'

- ToCHWImage:

- KeepKeys:

keep_keys: ['image', 'p3_maps', 'p4_maps', 'p5_maps'] # dataloader will return list in this order

loader:

shuffle: False

drop_last: False

batch_size_per_card: 1

num_workers: 1

Eval:

dataset:

name: SimpleDataSet

data_dir: /data/justcodew/ocr_data/icdar_convert/icdar_2019_art/

label_file_list:

- /data/justcodew/ocr_data/icdar_convert/icdar_2019_art/ppocr_test_label.txt

transforms:

- DecodeImage: # load image

img_mode: BGR

channel_first: False

ignore_orientation: True

- DetLabelEncode: # Class handling label

- DetResizeForTest:

limit_type: 'min'

limit_side_len: 736

- NormalizeImage:

scale: 1./255.

mean: [0.485, 0.456, 0.406]

std: [0.229, 0.224, 0.225]

order: 'hwc'

- Pad:

- ToCHWImage:

- KeepKeys:

keep_keys: ['image', 'shape', 'polys', 'ignore_tags']

loader:

shuffle: False

drop_last: False

batch_size_per_card: 1 # must be 1

num_workers: 2

复现了,是一些边界问题,在这里修复了#6693

具体是修改了哪里的代码。

另外,运行代码的随机性是哪里导致的, 已经将参数改为 shuffle: False drop_last: False batch_size_per_card: 1和seed =None

还是会有时正常 ,有时报错

看到了

add boader judge

FCENET数据增广部分有点问题

https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.5/ppocr/data/imaug/fce_aug.py#L346

【1】

RandomCropPolyInstances中的方法可能会造成 results['polys'] 为空

需要加一下判断

if len(valid_masks_list) > 0 :

results['polys'] = np.array(valid_masks_list)

results['ignore_tags'] = valid_tags_list

【2】代码中有几处 check_argument 并未注释,该函数并不存在 (mmocr中的)

https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.5/ppocr/data/imaug/fce_targets.py#L500

当generate_fourier_maps中的ploy 全部都是一个点时,cal_fourier_signature会出错

比如令

poly = np.array([[59.50518,24.676094],

[59.50518,24.676094],

[59.50518,24.676094],

[59.50518,24.676094],

[59.50518,24.676094],

[59.50518,24.676094],

[59.50518,24.676094],

[59.50518,24.676094],

[59.50518,24.676094],

[59.50518,24.676094]])

mask = np.zeros((h, w), dtype=np.uint8)

polygon = np.array(poly).reshape((1, -1, 2))

cv2.fillPoly(mask, polygon.astype(np.int32), 1)

fourier_coeff = self.cal_fourier_signature(polygon[0], k)

另外你们这里代码的实现与mmocr有些不同

https://github.com/open-mmlab/mmocr/blob/b8f7ead74cb0200ad5c422e82724ca6b2eb1c543/mmocr/datasets/pipelines/textdet_targets/fcenet_targets.py#L243

为什么你们的实现是去掉了 text_instance

text_instance = [[poly[0][i], poly[0][i + 1]]

for i in range(0, len(poly[0]), 2)]

mask = np.zeros((h, w), dtype=np.uint8)

polygon = np.array(text_instance).reshape((1, -1, 2))

cv2.fillPoly(mask, polygon.astype(np.int32), 1)

fourier_coeff = self.cal_fourier_signature(polygon[0], k)