Omkar Thawakar, Sanath Narayan, Jiale Cao, Hisham Cholakkal, Rao Muhammad Anwer, Muhammad Haris Khan, Salman Khan, Michael Felsberg, Fahad Shahbaz Khan [ ECCV 2022 ]

- (Aug 27, 2022)

- Swin pretrained model and result file added (swin).

- (Aug 16, 2022)

- Code Uploaded.

- (April 3, 2022)

- Project repo created.

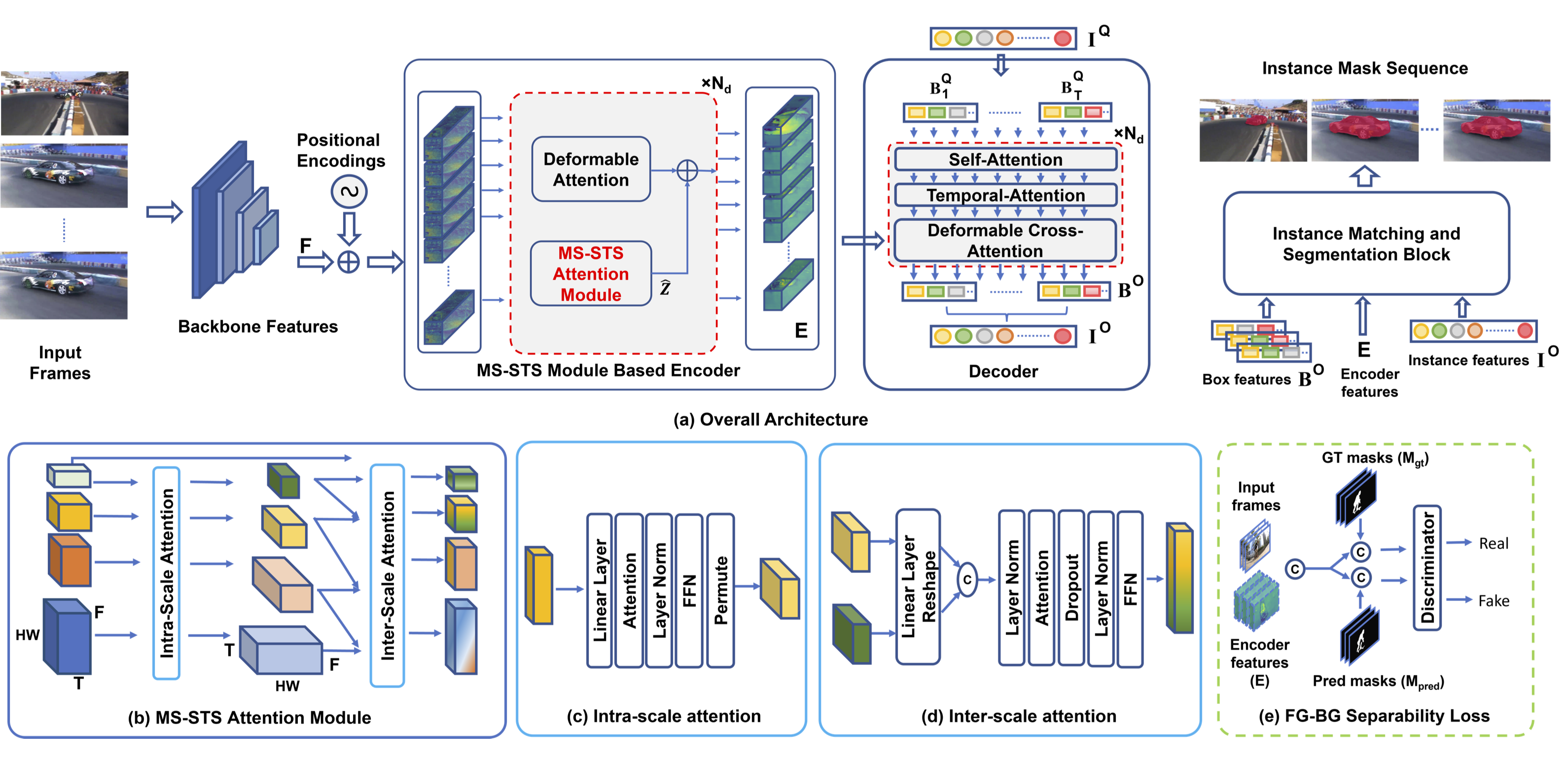

State-of-the-art transformer-based video instance segmentation (VIS) approaches typically utilize either single-scale spatio-temporal features or per-frame multi-scale features during the attention computations. We argue that such an attention computation ignores the multi-scale spatio-temporal feature relationships that are crucial to tackle target appearance deformations in videos. To address this issue, we propose a transformer-based VIS framework, named MS-STS VIS, that comprises a novel multi-scale spatio-temporal split (MS-STS) attention module in the encoder. The proposed MS-STS module effectively captures spatio-temporal feature relationships at multiple scales across frames in a video. We further introduce an attention block in the decoder to enhance the temporal consistency of the detected instances in different frames of a video. Moreover, an auxiliary discriminator is introduced during training to ensure better foreground-background separability within the multi-scale spatio-temporal feature space. We conduct extensive experiments on two benchmarks: Youtube-VIS (2019 and 2021). Our MS-STS VIS achieves state-of-the-art performance on both benchmarks. When using the ResNet50 backbone, our MS-STS achieves a mask AP of 50.1%, outperforming the best reported results in literature by 2.7% and by 4.8% at higher overlap threshold of AP75, while being comparable in model size and speed on Youtube-VIS 2019 val. set. When using the Swin Transformer backbone, MS-STS VIS achieves mask AP of 61.0% on Youtube-VIS 2019 val. set.

First, clone the repository locally:

git clone https://github.com/OmkarThawakar/MSSTS-VIS.gitThen, create environment and install dependencies:

conda env create env.ymlInstall dependencies and pycocotools for VIS:

pip install -r requirements.txt

pip install git+https://github.com/youtubevos/cocoapi.git#"egg=pycocotools&subdirectory=PythonAPI"Compiling CUDA operators:

cd ./models/ops

sh ./make.sh

# unit test (should see all checking is True)

python test.pyDownload and extract 2019 version of YoutubeVIS train and val images with annotations from CodeLab or YouTubeVIS, and download COCO 2017 datasets. We expect the directory structure to be the following:

MSSTS-VIS

├── datasets

│ ├── coco_keepfor_ytvis19.json

...

ytvis

├── train

├── val

├── annotations

│ ├── instances_train_sub.json

│ ├── instances_val_sub.json

coco

├── train2017

├── val2017

├── annotations

│ ├── instances_train2017.json

│ ├── instances_val2017.json

The modified coco annotations 'coco_keepfor_ytvis19.json' for joint training can be downloaded from [google]. Taken from SeqFormer.

We performed the experiment on NVIDIA Tesla V100 GPU. All models are trained with total batch size of 10.

To train model on YouTube-VIS 2019 with 8 GPUs , run:

GPUS_PER_NODE=8 ./tools/run_dist_launch.sh 8 ./configs/r50_mssts_ablation.sh

To train model on YouTube-VIS 2019 and COCO 2017 jointly, run:

GPUS_PER_NODE=8 ./tools/run_dist_launch.sh 8 ./configs/r50_mssts.sh

To train swin model on multiple nodes, run:

On node 1:

MASTER_ADDR=<IP address of node 1> NODE_RANK=0 GPUS_PER_NODE=8 ./tools/run_dist_launch.sh 16 ./configs/swin_mssts.sh

On node 2:

MASTER_ADDR=<IP address of node 1> NODE_RANK=1 GPUS_PER_NODE=8 ./tools/run_dist_launch.sh 16 ./configs/swin_mssts.sh

Evaluating on YouTube-VIS 2019:

python3 inference.py --masks --backbone [backbone] --model_path /path/to/model_weights --save_path results.json

To get quantitative results, please zip the json file and upload to the codalab server.

| Model | AP | AP50 | AP75 | AR1 | AR10 | Model | Results |

|---|---|---|---|---|---|---|---|

| MS-STS_r50 | 50.1 | 73.2 | 56.6 | 46.1 | 57.7 | r50 | r50_results |

| MS-STS_r101 | 51.1 | 73.2 | 59.0 | 48.3 | 58.7 | r101 | r101_results |

| MS-STS_swin | 61.0 | 85.2 | 68.6 | 54.7 | 66.4 | swin | swin_results |

@article{mssts2022,

title={Video Instance Segmentation via Multi-scale Spatio-temporal Split Attention Transformer},

author={Omkar Thawakar and Sanath Narayan and Jiale Cao and Hisham Cholakkal and Rao Muhammad Anwer and Muhammad Haris Khan and Salman Khan and Michael Felsberg and Fahad Shahbaz Khan},

journal={Proc. European Conference on Computer Vision (ECCV)},

year={2022},

}

Please contact me at omkar.thawakar@mbzuai.ac.ae for any queries.

This repo is based on SeqFormer , Deformable DETR and VisTR. Thanks for their wonderful works.