A Python package of Input-Output Hidden Markov Model (IOHMM).

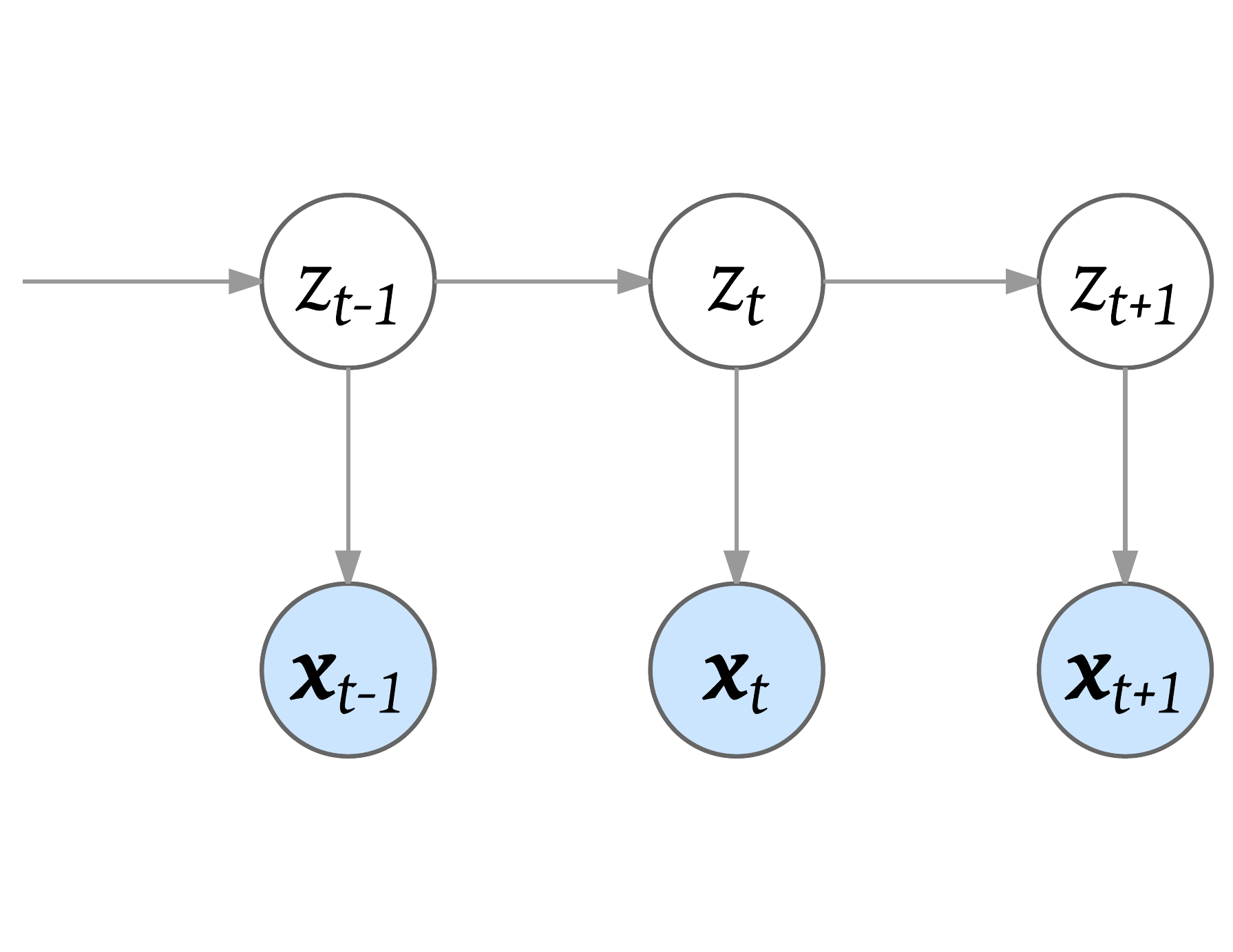

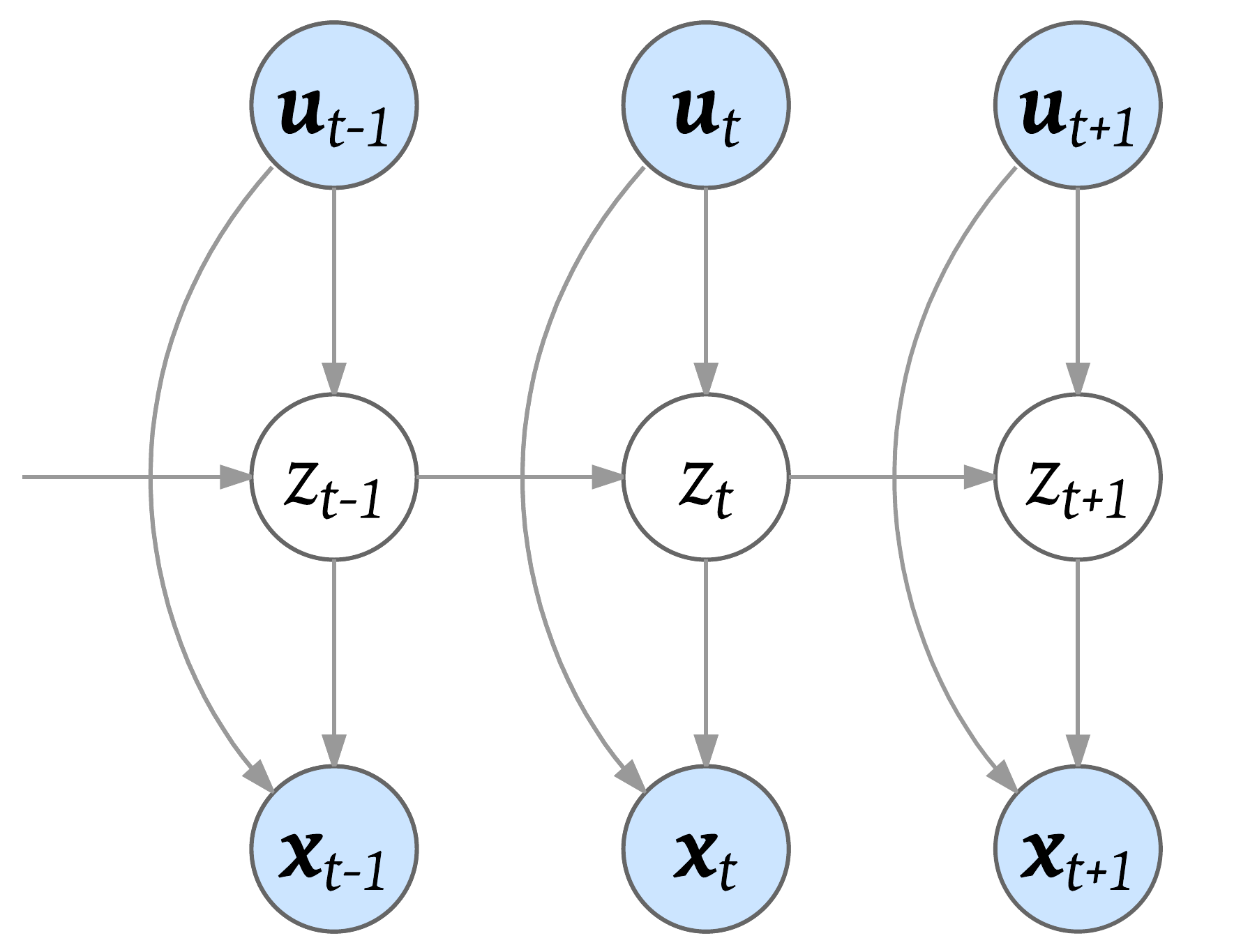

IOHMM extends standard HMM by allowing (a) initial, (b) transition and (c) emission probabilities to depend on various covariates. A graphical representation of standard HMM and IOHMM:

| Standard HMM | IOHMM |

|---|---|

|

|

The solid nodes represent observed information, while the transparent (white) nodes represent latent random variables. The top layer contains the observed input variables ut; the middle layer contains latent categorical variable zt; and the bottom layer contains observed output variables xt. The input for (a) initial, (b) transition and (c) emission probabilities does not have to be the same.

For more theoretical details:

Applications of IOHMM:

pip install IOHMMThe example directory contains a set of Jupyter Notebook of examples and demonstrations of:

-

3-in-1 IOHMM. IOHMM package supports:

- UnSupervised IOHMM when you have no ground truth hidden states at any timestamp. Expectation-Maximization (EM) algorithm will be used to estimate parameters (maximization step) and posteriors (expectation step).

- SemiSupervised IOHMM when you have certain amount of ground truth hidden states and would like to enforce these labeled hidden states during learning and use these labels to help direct the learning process.

- Supervised IOHMM when you want to purely use labeled ground truth hidden states during the learning. There will be no expectation step and only one shot of maximization step during the learning since all the posteriors are from labeled ground truth.

-

Crystal clear structure. Know each step you go:

- All sequences are represented by pandas dataframes. It has great interface to load csv, json, etc. files or to pull data from sql databases. It is also easy to visualize.

- Inputs and outputs covariates are specified by the column names (strings) in the dataframes.

- You can pass a list of sequences (dataframes) as data -- there is no more need to tag the start of each sequence in a single stacked sequence.

- You can specify different set of inputs for (a) initial, (b) transition and (c) different emission models.

-

Forward Backward algorithm. Faster and more robust:

- Fully vectorized. Only one 'for loop' (due to dynamic programming) in the forward/backward pass where most current implementations have more than one 'for loop'.

- All calculations are at log level, this is more robust to long sequence for which the probabilities easily vanish to 0.

-

Json-serialization. Models on the go:

- Save (

to_json) and load (from_json) a trained model in json format. All the attributes are easily visualizable in the json dictionary/file. See Jupyter Notebook of examples for more details. - Use a json configuration file to specify the structure of an IOHMM model (

from_config). This is useful when you have an application that uses IOHMM models and would like to specify the model before hand.

- Save (

-

Statsmodels and scikit-learn as the backbone. Take the best of both and better:

- Unified interface/wrapper to statsmodels and scikit-learn linear models/generalized linear models.

- Supports fitting the model with sample frequency weights.

- Supports regularizations in these models.

- Supports estimation of standard error of the coefficients in certain models.

- Json-serialization to save (

to_json) and load (from_json) of trained linear models.

- The structure of this implementation is inspired by depmixS4: depmixS4: An R Package for Hidden Markov Models.

- This IOHMM package uses/wraps statsmodels and scikit-learn APIs for linear supervised models.

Modified BSD (3-clause)