Identifying Ball Trajectories in Video

Overview

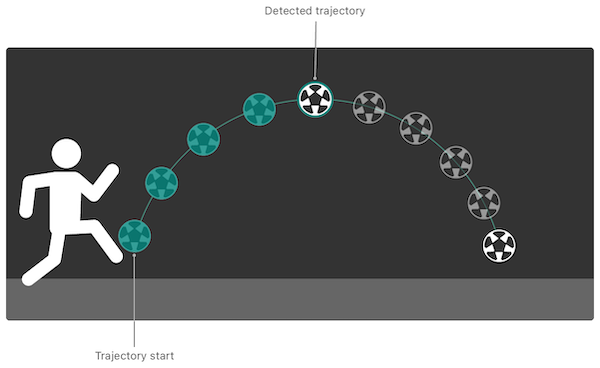

This is an app that detects the trajectory of a ball in real time from a video using the Vision framework. I assume that it capture in ball game sports where the background does not move. The gif below is a demo that detected the trajectory of a golf ball with the app. Starting in iOS 14, tvOS 14, and macOS 11, the framework provides the ability to detect the trajectories of objects in a video sequence. See the Apple document: Identifying Trajectories in Video for more details.

Motivation

A function that detects the trajectory is now available from iOS 14 as one of the features of the Vision framework for image processing. Therefore, I have implemented this function by referring to the document. The result of detecting the trajectory has been magically wonderful! I have shared the source code here and the article on the Qiita in japanese because there was little information on the internet about the function, other than the documentation. I have just started using the Vision framework, so I would appreciate any advice.

Flow of the trajectory detection

The function use "Request", "Request Hander", and "Observation" to detect the trajectory.

- Request: You configure the

VNDetectTrajectoriesRequestand its parameters you want to use in the Vision framework. - Request Handler: You pass the capturing movie and the request you configured. Then the detection process is performed.

- Observation: You can get the result from

VNTrajectoryObservation.

Properties of the Observation Result

The following properties can be obtained from the observation.

detectedPoints(red line in gif)[VNPoint]: Array of x, y coordinates in the image frame- You can get the x and y coordinates normalized to [0,1.0] of the trajectory for the number of frames that you configure as

trajectoryLengthin the request. - Output example: [[0.027778; 0.801562], [0.073618; 0.749221], [0.101400; 0.752338], [0.113900; 0.766406], [0.119444; 0.767187], [0.122233; 0.774219], [0.130556; 0.789063], [0.136111; 0.789063], [0.144444; 0.801562], [0.159733; 0.837897]]

projectedPoints(green line in gif)[VNPoint]: Array of x, y coordinates in the image frame- Going back 5 frames in the past, you can get the x, y coordinates normalized to [0,1.0] of the trajectory converted into a quadratic equation for 5 points.

- Output example: [[0.122233; 0.771996], [0.130556; 0.782801], [0.136111; 0.791214], [0.144444; 0.805637], [0.159733; 0.837722]]

equationCoefficientssimd_float3: the equation coefficients for the quadratic equation- You can get the quadratic equation, f(x) = ax2 + bx + c, converted from projectedPoint

- Output example: SIMD3(15.573865, -2.6386108, 0.86183345)

uuidUUID: Universally unique value- You can get the ID of the each trajectory. If the trajectory detected in the next frame is included in one in the previous frame, the same ID is given.

- Output example: E5256430-439E-4B0F-91B0-9DA6D65C22EE

timeRangeCMTimeRange: Timestamp of the period from when the trajectory was detected (Trajectory start) to when it continued to be detected (from Detected trajectory).- You can get the timestamp when the each trajectory detected.

- Output example: {{157245729991291/1000000000 = 157245.730}, {699938542/1000000000 = 0.700}}

confidenceVNConfidence: Confidence level of accuracy of the detected trajectory- You can get the confidence level normalized to [0,1.0] of the trajectory.

- Output example: 0.989471

Cited from Identifying Trajectories in Video | Apple Developer Documentation.

Implementation

Development Environment

- XCode 12.2

- Swift 5

Framework

- UIKit

- Vision

- AVFoundation

Implement a Trajectory Detection

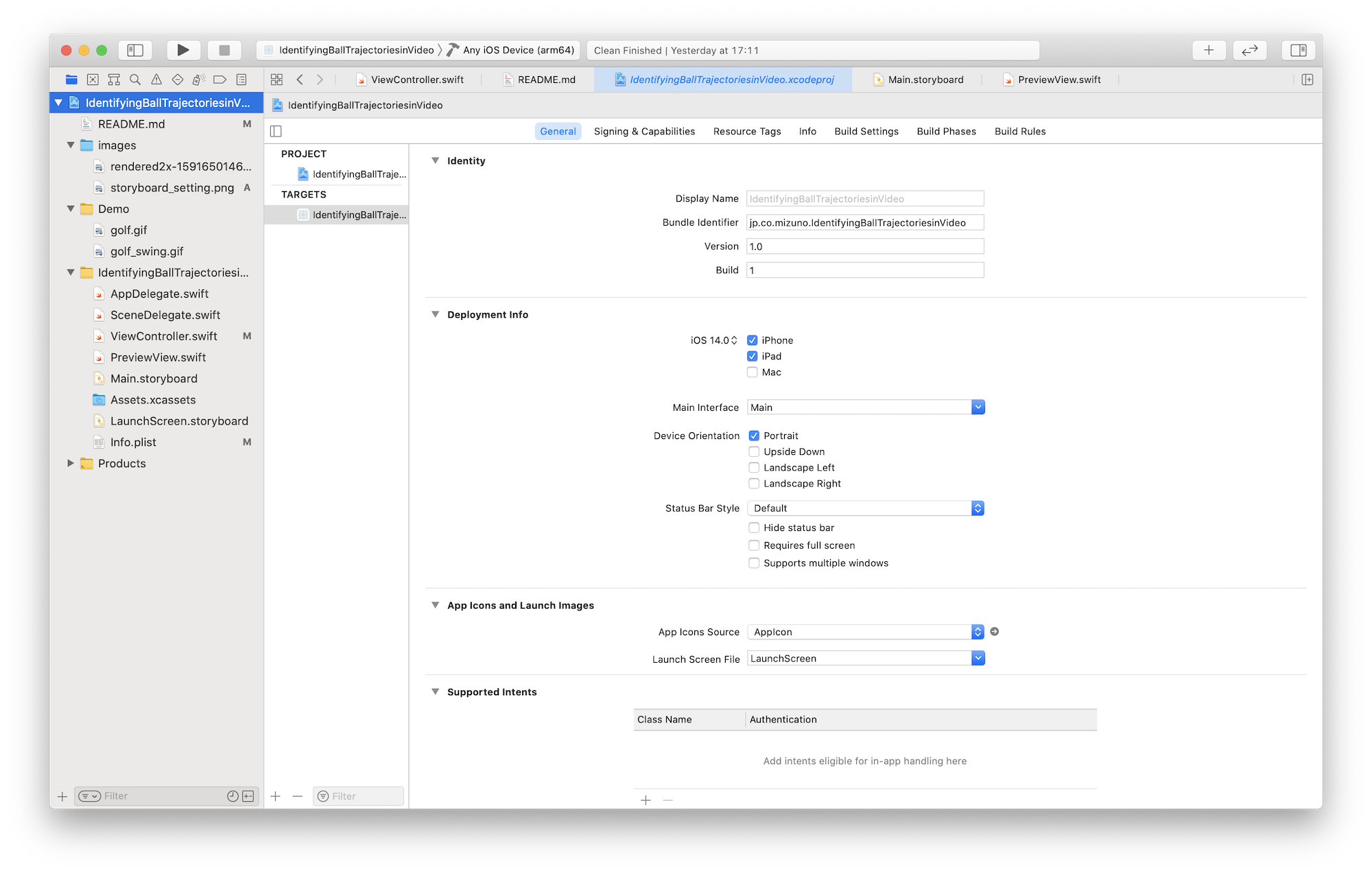

Configure a Device Orientation

With my implementation, if I set the device orientation to Landscape, the video playback screen will rotate 90 degrees, so it is set to Portrait.

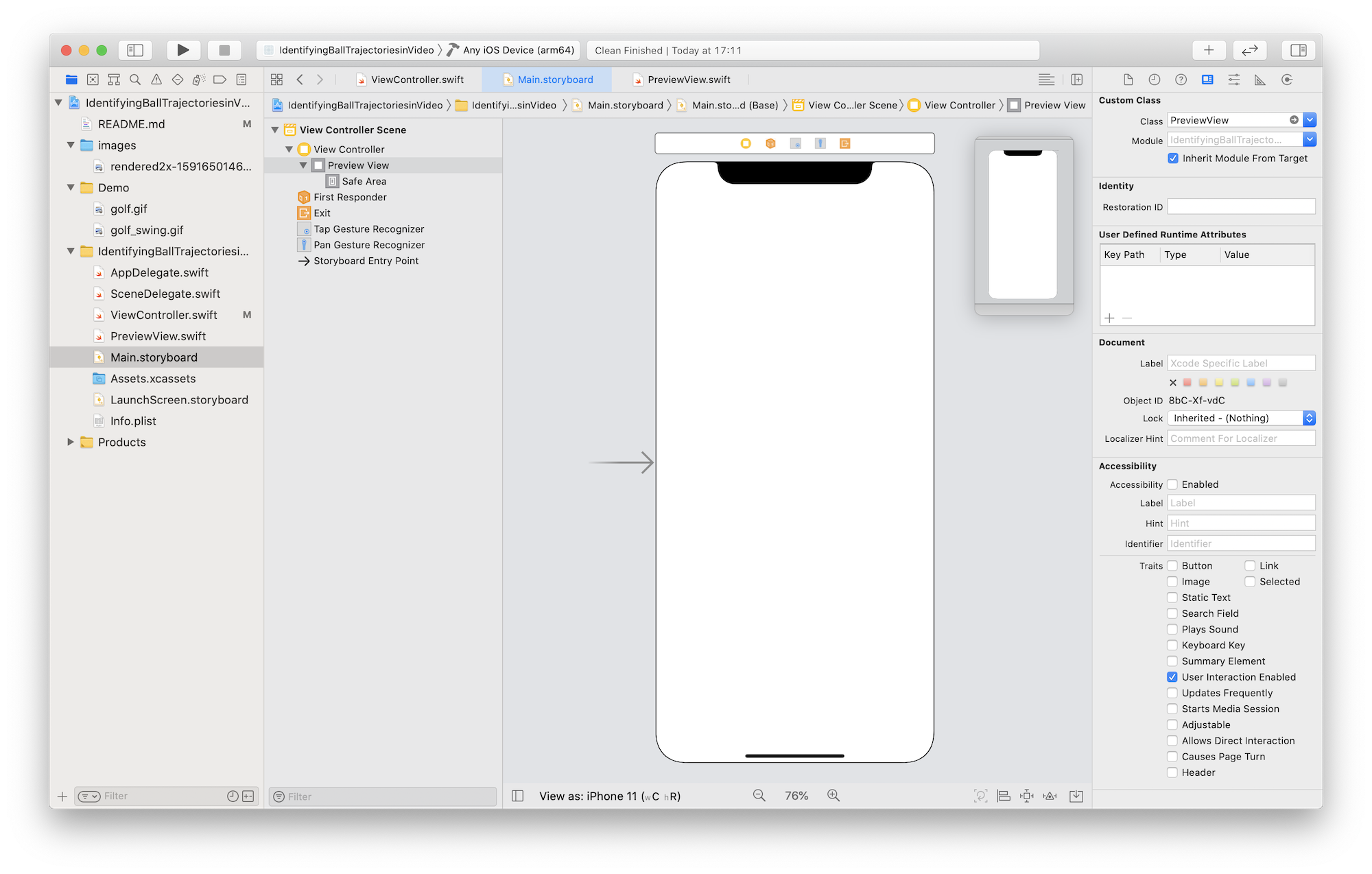

Display a Camera Preview

Use AVCaptureVideoPreviewLayer. This layer is CALayer's subclass that displays the video captured in the input device. This layer is combined with the capture session.

import UIKit

import AVFoundation

class PreviewView: UIView {

override class var layerClass: AnyClass {

return AVCaptureVideoPreviewLayer.self

}

var videoPreviewLayer: AVCaptureVideoPreviewLayer {

return layer as! AVCaptureVideoPreviewLayer

}

}

Connect a Storyboard

Associate the above PreviewView.swift with the Storyboard.

Framework

import UIKit

import AVFoundation

import Vision

Create a Request

Declare VNDetectTrajectoriesRequest to detect the trajectory, and the arguments are the following three.

-

frameAnalysisSpacing: CMTime- Set time interval of analysing the trajectory.

- Increasing this value will thin out the frames to be analyzed, which will be effective for devices with low spec. If set to

.zero, all frames can be analyzed, but the load will be large.

-

trajectoryLength: Int- Set the number of the point that compose with the trajectory.

- The minimum number is 5 points. If it is set a little number, it mis detect many trajactories. (I have set 10 points to catch a golf ball just right.)

-

completionHandler: VNRequestCompletionHandler?- Set the closure called when detection is complete. (I have implemented the original method (completionHandler) and call it.)

var request: VNDetectTrajectoriesRequest = VNDetectTrajectoriesRequest(frameAnalysisSpacing: .zero, trajectoryLength: 10, completionHandler: completionHandler)

Applying Filtering Criteria

Set the following three properties that are prepared for the request as needed.

objectMaximumNormalizedRadius: Float(In my implementation, the setting has not been reflected for unknown reasons.)- Set a size that’s slightly larger than the object (ball) you want to detect.

- If setting it, you can filter moving large things.

- The setting range is [0.0, 1.0], which is the normalized frame size. The default is 1.0.

- The similar property

maximumObjectSizehas been deprecated.

objectMinimumNormalizedRadius: Float- Set a size that’s slightly smaller than the object (ball) you want to detect.

- If setting it, you can filter moving small things and noises.

- The setting range is [0.0, 1.0], which is the normalized frame size. The default is 0.0.

- The similar property

minimumObjectSizehas been deprecated.

regionOfInterest: CGRect(The setting range is a blue line in gif.)- Set a range for trajectory detection.

- The setting range is [0.0, 1.0], which is the normalized frame size. Set an origin, width, and height with

CGRect. The default isCGRect (x: 0, y: 0, width: 1.0, height: 1.0).

request.objectMaximumNormalizedRadius = 0.5

request.objectMinimumNormalizedRadius = 0.1

request.regionOfInterest = CGRect(x: 0, y: 0, width: 0.5, height: 1.0)

Call a Handler

Set orientation to .right. because if you do not set it, captured images will be sent to the request handler with a 90 degree rotation, and you will have to rerotate it 90 degrees when drawing the trajectory from the acquired observations.

func captureOutput(_ output: AVCaptureOutput, didOutput sampleBuffer: CMSampleBuffer, from connection: AVCaptureConnection) {

do {

let requestHandler = VNImageRequestHandler(cmSampleBuffer: sampleBuffer, orientation: .right, options: [:])

try requestHandler.perform([request])

} catch {

// Handle the error.

}

}

Process the Results

Convert the coordinates of the acquired trajectories to the coordinate system of UIView of the origin in the upper because one of Vision of the origin is the lower left.

func completionHandler(request: VNRequest, error: Error?) {

if let e = error {

print(e)

return

}

guard let observations = request.results as? [VNTrajectoryObservation] else { return }

for observation in observations {

// Convert the coordinates of the bottom-left to ones of the upper-left

let detectedPoints: [CGPoint] = observation.detectedPoints.compactMap {point in

return CGPoint(x: point.x, y: 1 - point.y)

}

let projectedPoints: [CGPoint] = observation.projectedPoints.compactMap { point in

return CGPoint(x: point.x, y: 1 - point.y)

}

let equationCoefficients: simd_float3 = observation.equationCoefficients

let uuid: UUID = observation.uuid

let timeRange: CMTimeRange = observation.timeRange

let confidence: VNConfidence = observation.confidence

}

//Call a method to draw the trajectory

}

Match the Results to UIView

Convert to the UIView angle of view in order to draw the trajectories correctly because the converted coordinates are normalized values.

func convertPointToUIViewCoordinates(normalizedPoint: CGPoint) -> CGPoint {

// Convert normalized coordinates to UI View's ones

let convertedX: CGFloat

let convertedY: CGFloat

if let rect = panGestureRect {

// If ROI is setting

convertedX = rect.minX + normalizedPoint.x*rect.width

convertedY = rect.minY + normalizedPoint.y*rect.height

}else {

let videoRect = previewView.videoPreviewLayer.layerRectConverted(fromMetadataOutputRect: CGRect(x: 0.0, y: 0.0, width: 1.0, height: 1.0))

convertedX = videoRect.origin.x + normalizedPoint.x*videoRect.width

convertedY = videoRect.origin.y + normalizedPoint.y*videoRect.height

}

return CGPoint(x: convertedX, y: convertedY)

}

How to Use the App

- Fix a device (iPhone or iPad) with a tripod.

- Launch the app and the recorded video will be displayed.

- When trajectories are detected in the video, the curves are drawn.

- Specify a trajectory detection range by dragging with your finger.

- Tap with your finger to cancel the detection range.

In Closing

The flow of the trajectory detection implementation is similar to other features of the Vision framework, so it is easy to understand. However, it is difficult to implement the video recording settings and coordinate conversion because it is also necessary to understand the framework of AVFoundation and UIKit to some extent. The following references were very helpful. If this document make a mistake, I would appreciate it if you could point it out.