- Competition Title: Steel Plate Defect Prediction

- Goal: Predict defects in steel plates using machine learning techniques

- Approach:

- Utilized XGBoost, CatBoost, and LightGBM

- Ensembled the best performing models.

- Achieved an accuracy of 0.88905 on the test dataset

- Conducted comprehensive data cleaning to address inconsistencies and errors.

- Implemented custom functions for efficient cleaning of the columns.

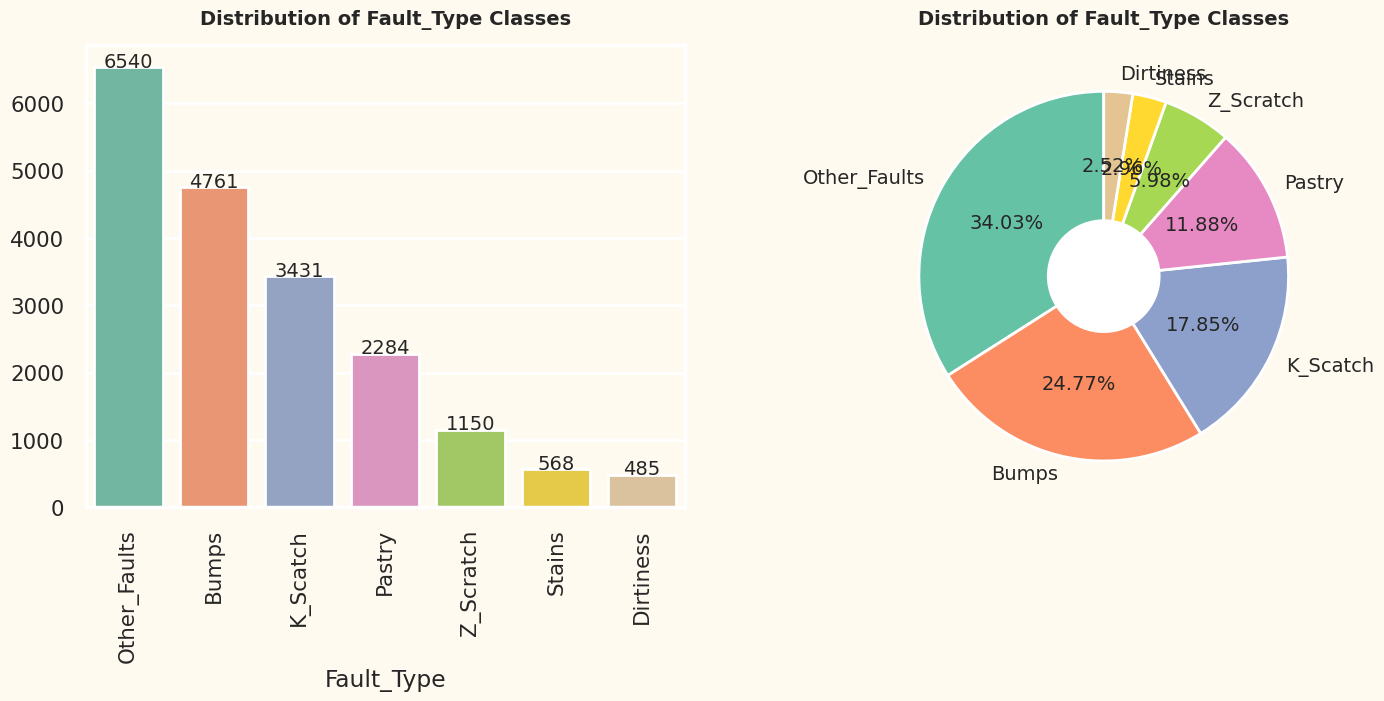

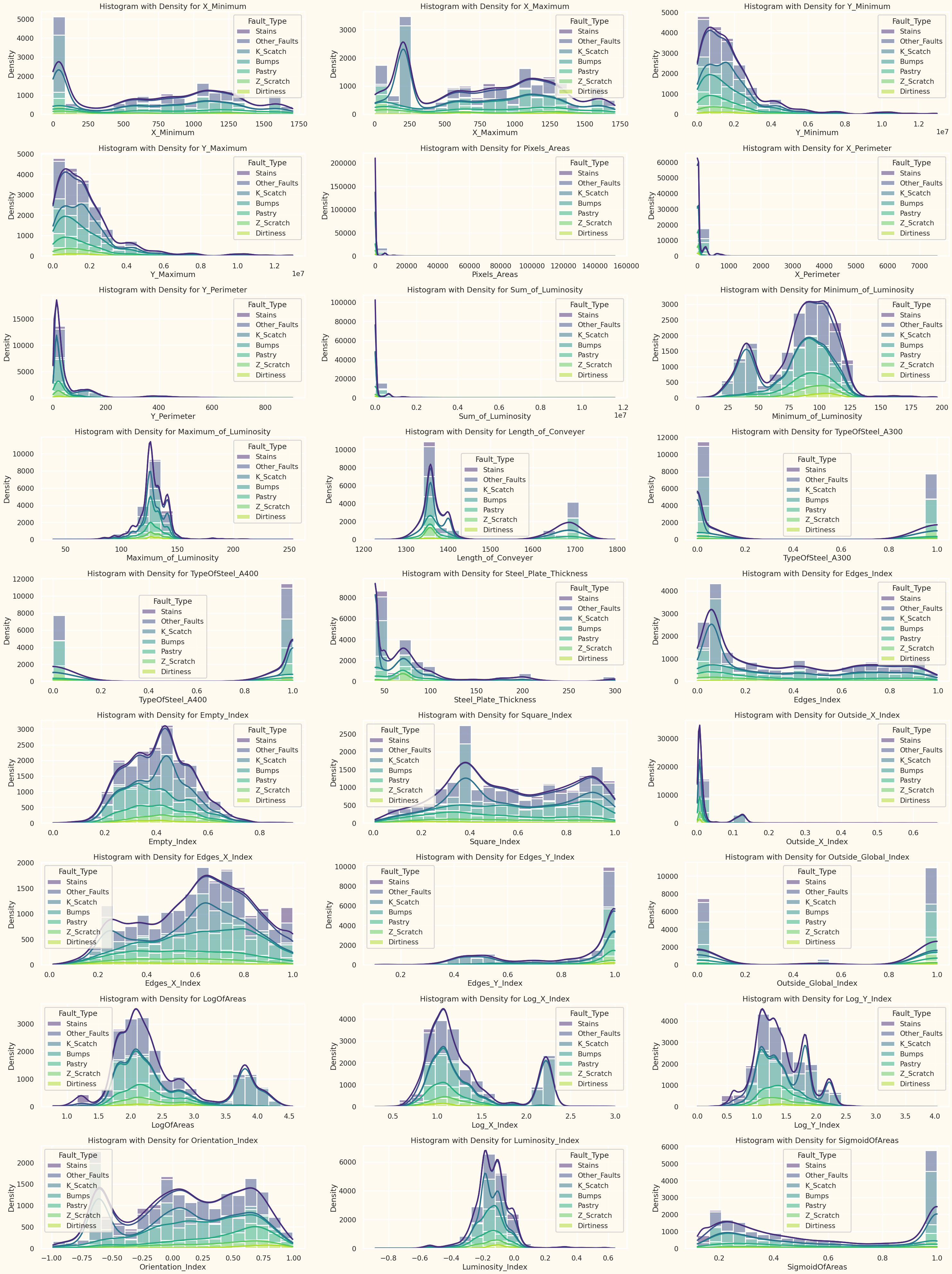

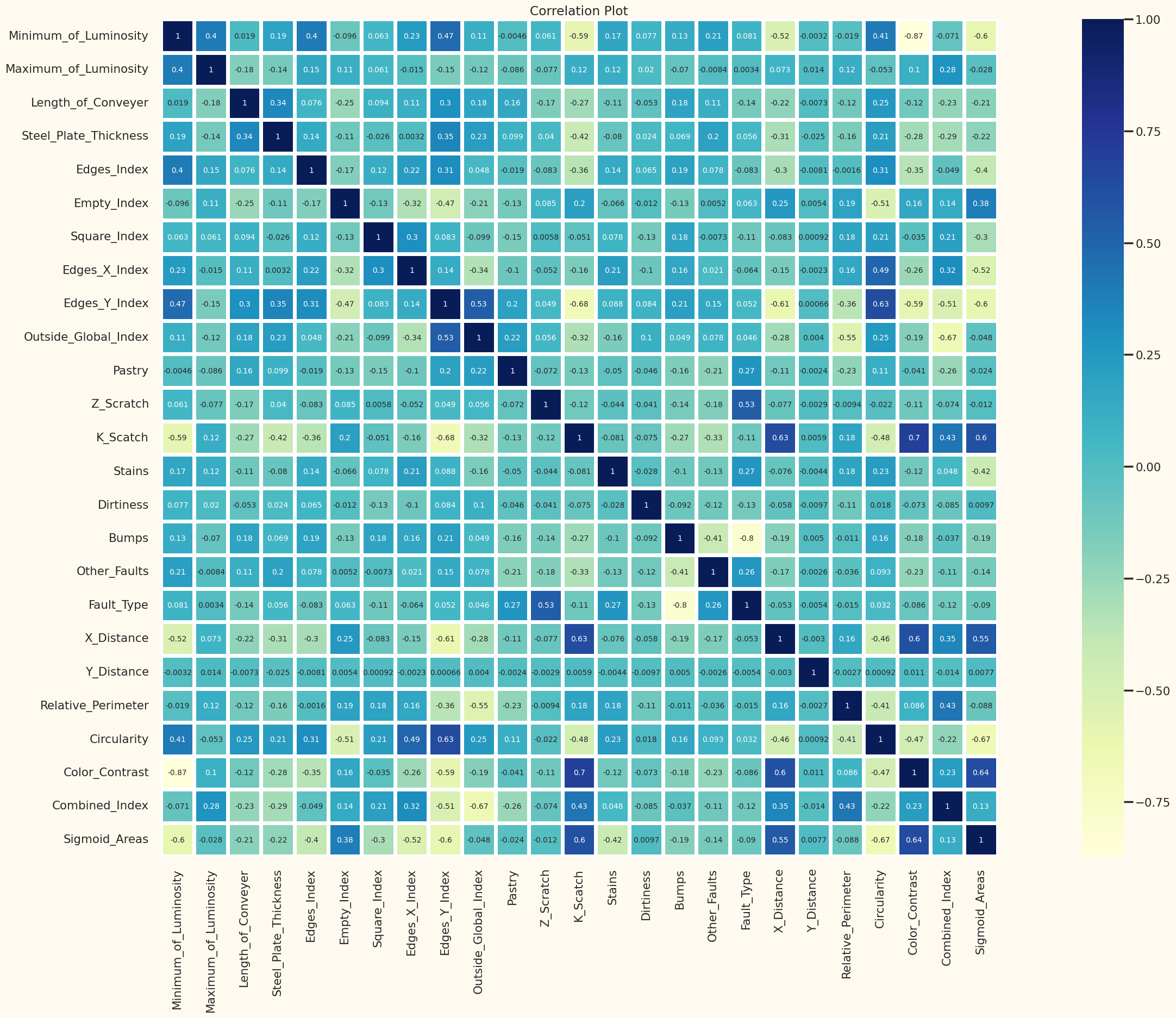

Insights were derived through various visualizations:

To find the optimal hyperparameters for the XGBoost model, I used a manual tuning approach. I started with a set of default parameters and then iteratively adjusted the values of the hyperparameters one by one, evaluating the model's performance after each change.

xgb_best_params_for_y7 = {

'max_depth': 4,

'n_estimators': 663,

'gamma': 0.6429564571848232,

'reg_alpha': 0.3267006339507057,

'reg_lambda': 0.04658361960102192,

'min_child_weight': 6,

'subsample': 0.9939674566310442,

'colsample_bytree': 0.1435958193323451,

'learning_rate': 0.24960789830790053

}-

Description: The Voting Classifier is an ensemble learning method that combines the predictions of multiple base classifiers to make final predictions.

-

Components:

- Estimators: Base classifiers are provided as tuples containing a unique name and the trained model object.

- Voting Method: Determines how final predictions are computed ('hard' or 'soft').

- Flatten Transform (Optional): Flattens the output of each classifier before combining.

-

Considerations:

- Diversity of Base Models

- Computational Complexity

- Interpretability

-

Example Usage:

from sklearn.ensemble import VotingClassifier

ensemble_model_for_y7 = VotingClassifier(

estimators=[

('xgb', xgb_model_for_y7),

('catboost', cb_model_for_y7),

('LGBM', lgbm_model_for_y7)

],

voting='soft',

flatten_transform=True

)

ensemble_model_for_y7.fit(X_train, y_train)