This is the code and data repository for our work Action4D: Online Action Recognition in the Crowd and Clutter published in CVPR 2019.

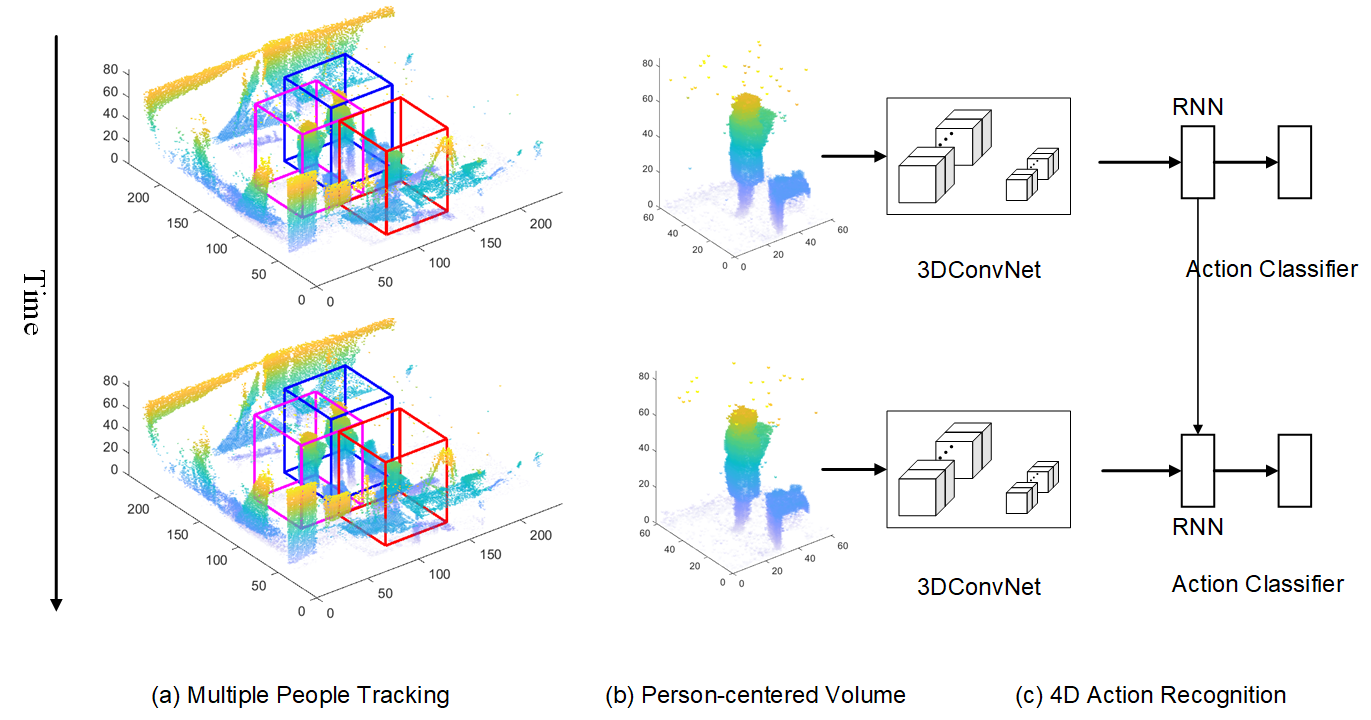

We propose to tackle action recognition using a holistic 4D “scan” of a cluttered scene to include every detail about the people and environment. In this work, we tackle a new problem, i.e., recognizing multiple people’s actions in the cluttered 4D representation. Our method is invariant to camera view angles, resistant to clutter and able to handle crowd.

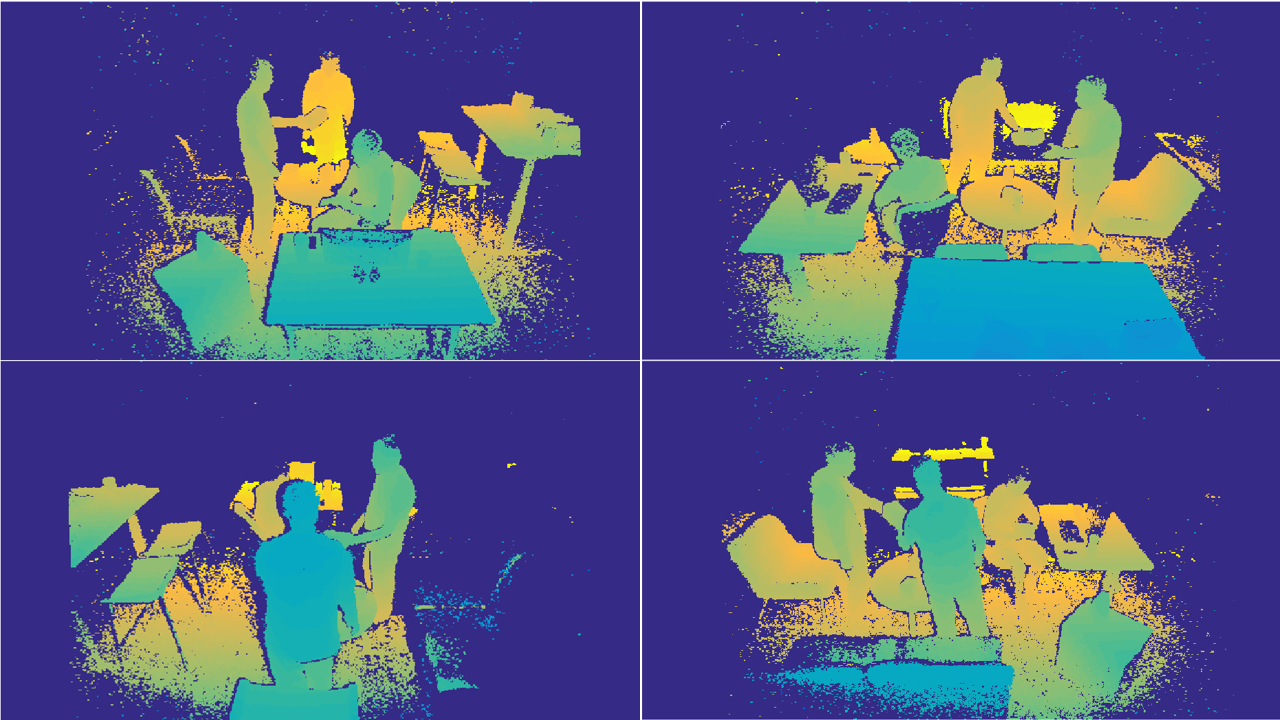

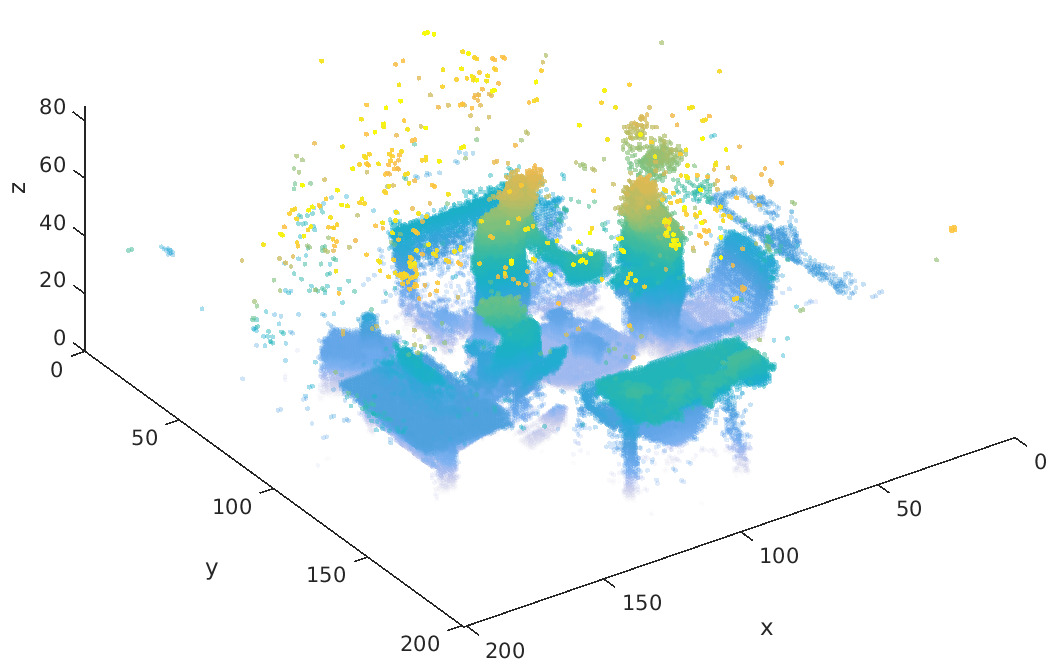

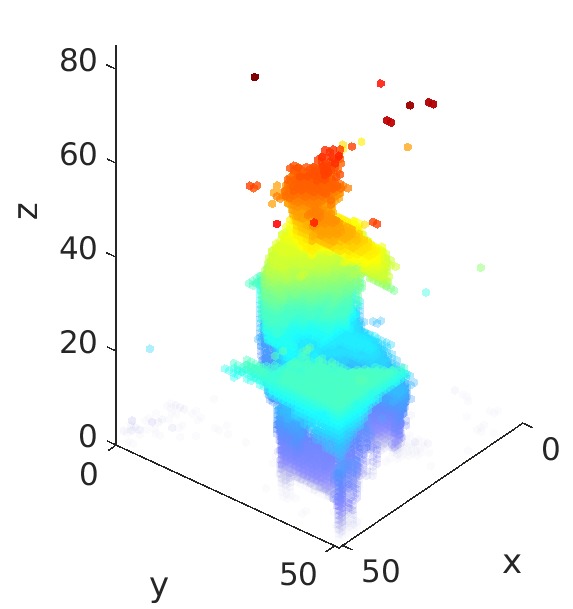

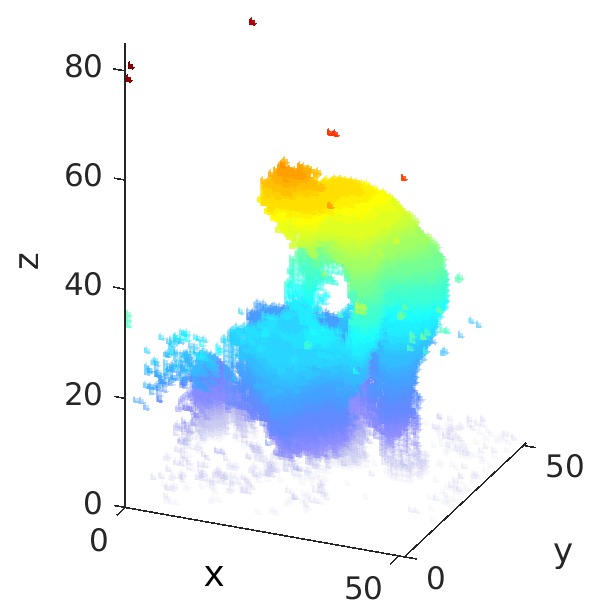

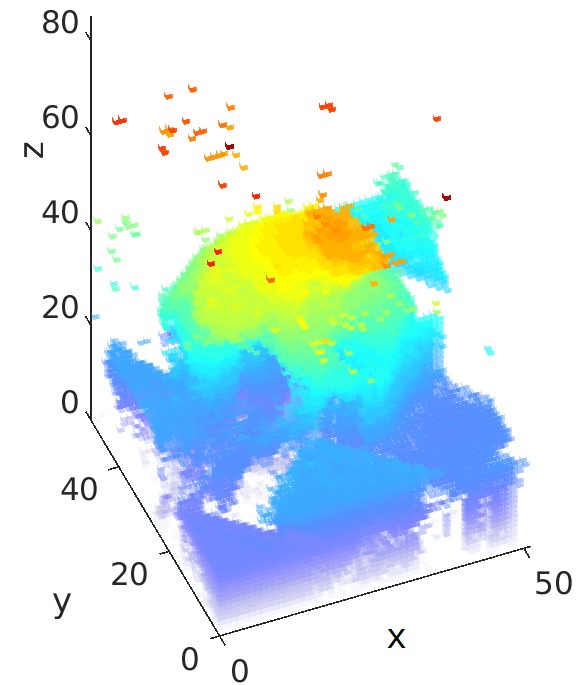

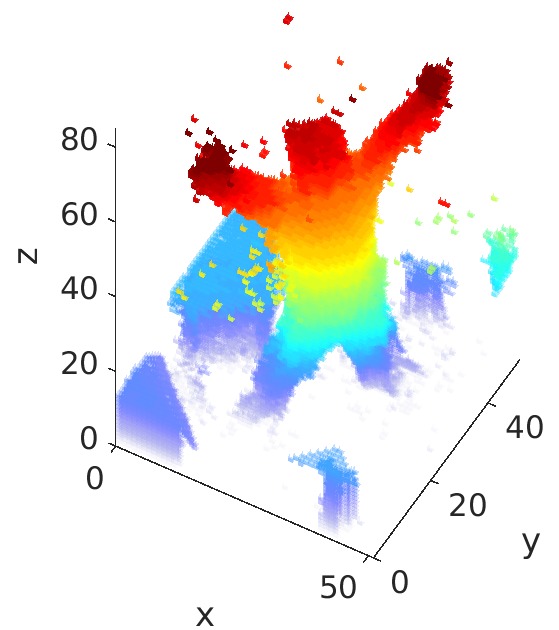

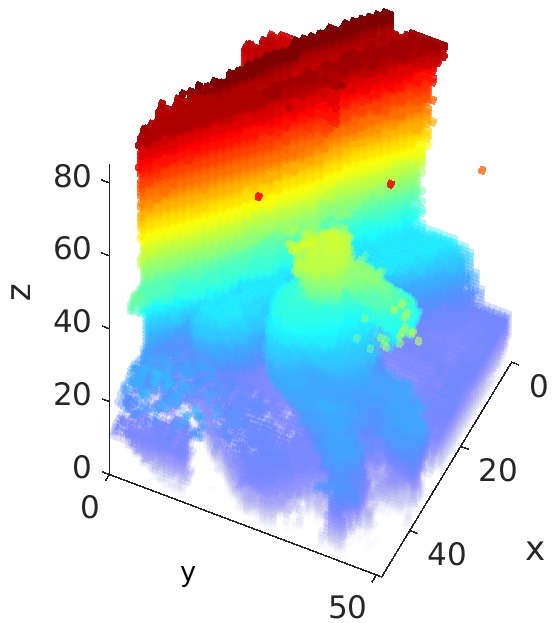

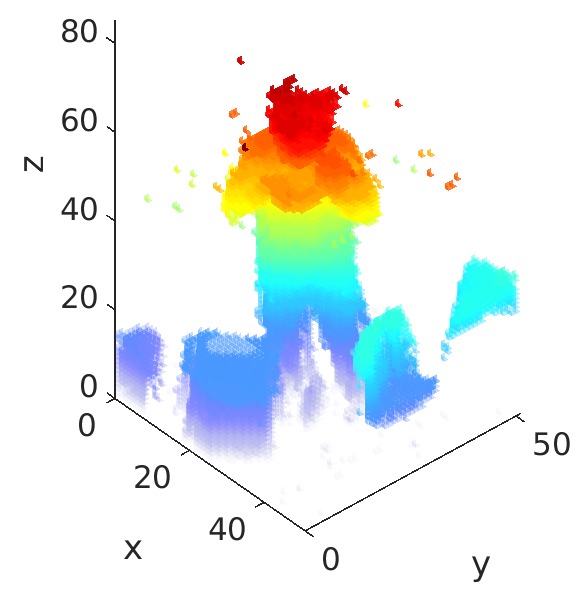

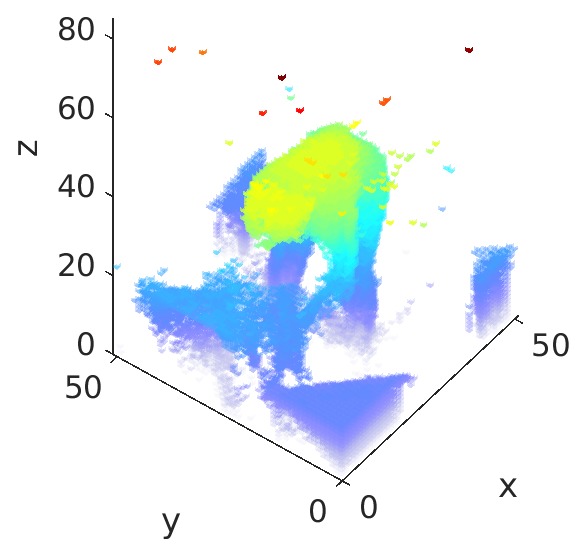

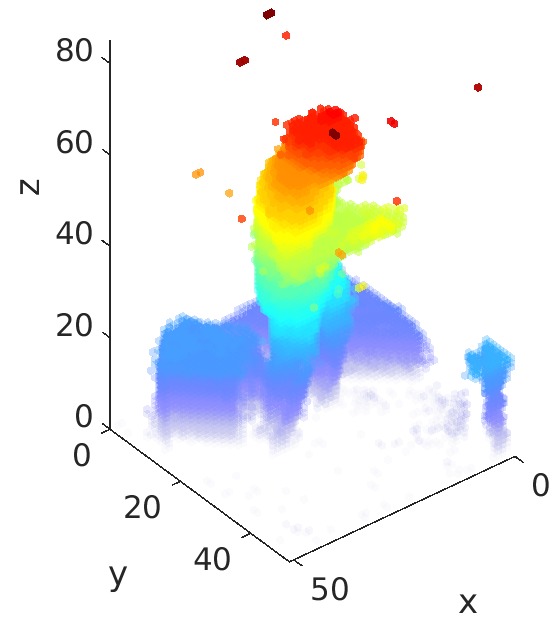

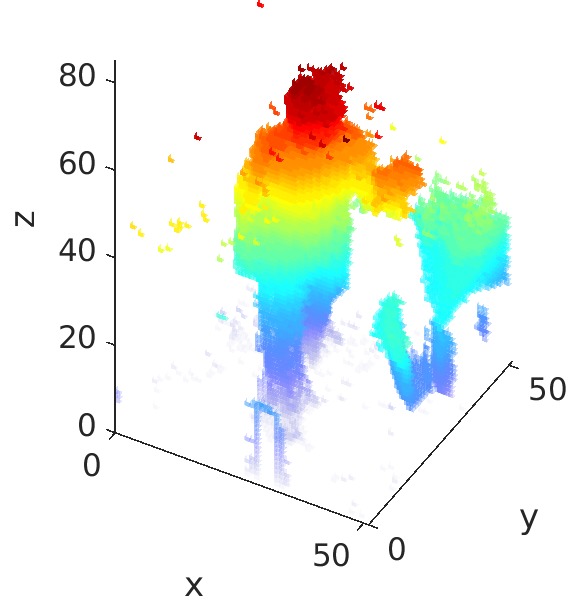

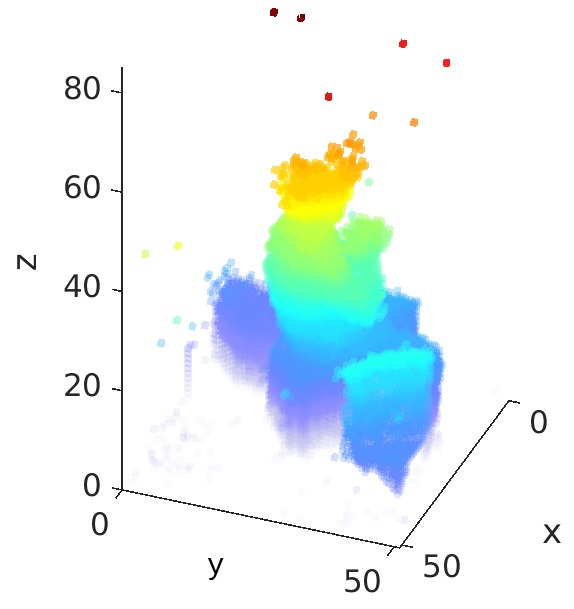

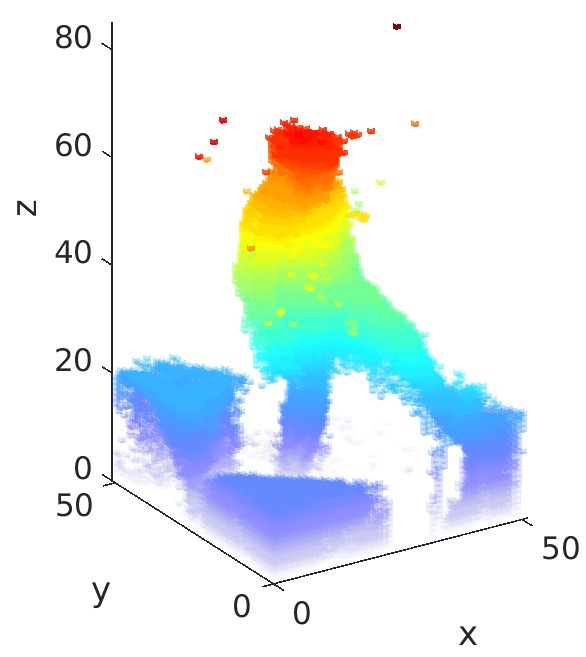

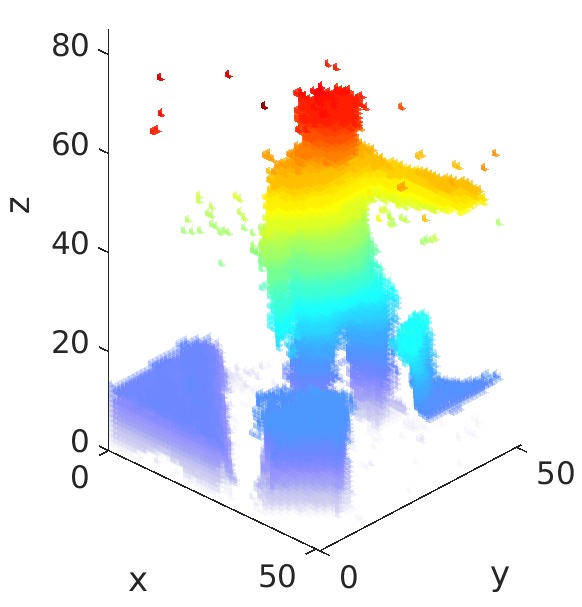

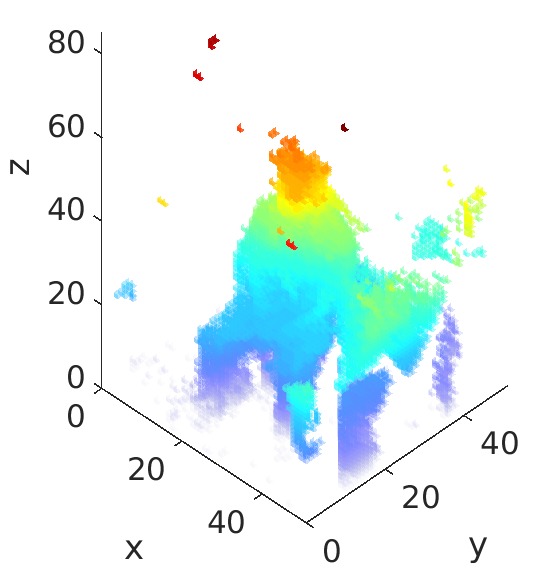

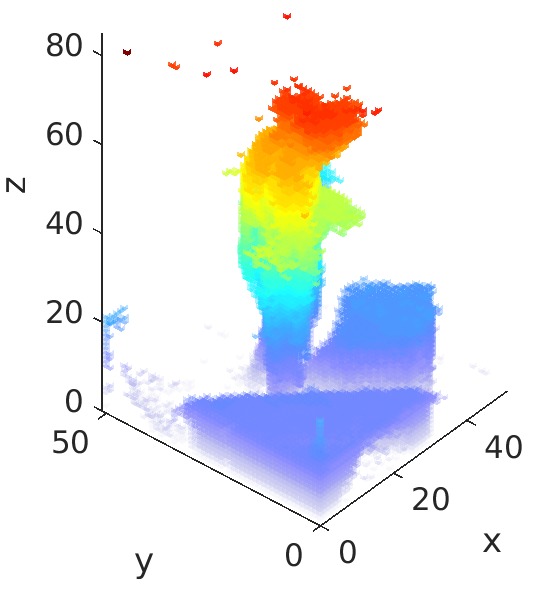

We capture the whole scene using multiple depth cameras. Then, we reconstruct a holistic scene by fusing multiple calibrated depth images. The following figure shows an example.

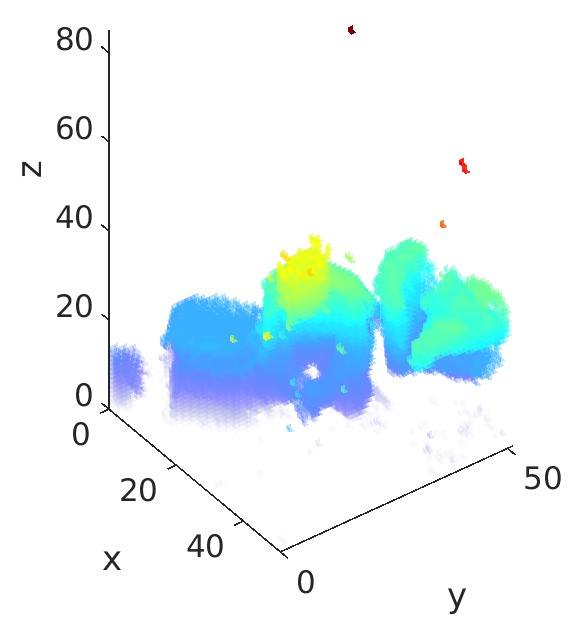

Our tracker works on the top-down view of the scene. The following figure shows some of our tracking results. We show different colors for different tracked person.

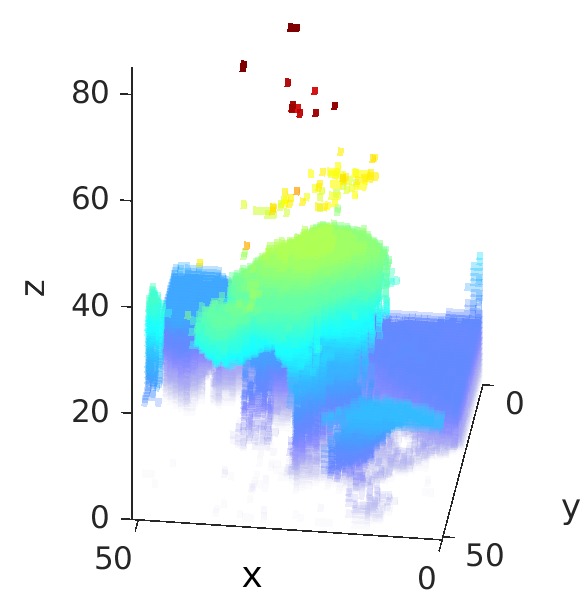

The cropped volumes of each subject has been shared in data folder.

For test1 and test3, we also shared the training and validating file lists.

All data files are saved using compressed npz format. The dataloader is going to load directly from the tar file into system memory. Please check the data_loader.py for details.

PyTorch >= 0.4.1

- We use Horovod to enable distributed training. However, the code can also be running on a single machine.

python train_dist.py \

--data_dir_val test3_cross_env/data_shell_f9_val.tar \

--data_dir test3_cross_env/train.tar \

--list_fn test3_cross_env/train.lst \

--list_fn_val test3_cross_env/val.lst \

--batch_size 24

@InProceedings{action4d,

author = {You, Quanzeng and Jiang, Hao},

title = {Action4D: Online Action Recognition in the Crowd and Clutter},

booktitle = {CVPR},

year = {2019}

}