Proxy for page

ivibe opened this issue · comments

Hi!

Could someone tell me, whether there's a possibility to set proxy not only for a chromium instance, but also for a page?

So the current solution is:

const browser = await puppeteer.launch({ args: [ '--proxy-server=127.0.0.1:9876' ] });

Desired solution in my case is something like this:

const page = await browser.newPage({ args: [ '--proxy-server=127.0.0.1:9876' ] });

With proxy per page there's a possibility to run a single chrome instance, but use different proxies depending on page.

Thanks in advance!

You can use request interception to forward requests from each page to the correct proxy.

@JoelEinbinder could you show an example how can I forward request through SOCKS proxy using request interception?

@ivibe unfortunately, this is not possible for SOCKS proxy, you'll have to launch a separate browser instance for this case.

Out of curiosity, why would you need this?

It's a pity.

My use case is web-scraping. Web-servers can block IPs or the proxy server can become inactive, that's why relatively often I need to change proxy.

Of course, I would like to avoid a perfomance hit related to launching many instances of chromium. Is there any chance, that such functionality (i.e. dynamic changing proxy) will be implemented in future chromium releases?

+1

This feature would be useful for me too, as I'm currently forced to launch multiple chromium instances if I need to access multiple URLs via different proxies. To add to what @ivibe suggested for use-cases, this could also be useful if you need to access resources behind firewalls with no common proxy that can pass through both. Alternatively, this would be useful if you wanted to test or screenshot your web application from multiple sources - e.g. if page content changes based on the visitor's IP's geolocation.

If there is a way to workaround this as suggested by @JoelEinbinder, perhaps the SOCKS requirement could be alleviated by setting up a proxy in the middle to allow an HTTP proxy interface to the SOCKS connection. (e.g. https://superuser.com/questions/423563/convert-http-requests-to-socks5)

unfortunately, this is not possible for SOCKS proxy, you'll have to launch a separate browser instance for this case.

What are the supported proxy for this case?

+1

we have exact same use case. this will be a very useful feature.

if we can set http proxy per page that would be great.

I think you can capture every request to use http(s) proxy!

Socks proxy affect to the whole browser(all tabs), you only run different browser(different userDataDir) instance to do.

@fhmd4k even if we consider only regular http(s) proxy, that would be nice to see an example of using it through capturing requests

Hi, I'm working around on this issue and I'm already able to make this work with HTTP websites. For HTTPS websites I'm still facing some issues.

It may sound a bit hacky and complex... hmm... that's because it really is! But hey, it works.

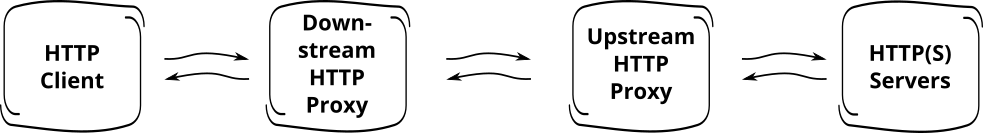

The idea is to create a local Downstream Proxy that parses the address of the Upstream Proxy from the headers of the page's requests.

(image credits: https://www.fedux.org/articles/2015/04/11/setup-a-proxy-with-ruby.html)

You can use something like this per page:

page.setExtraHTTPHeaders({proxy_addr: "200.11.11.11", proxy_port: 999});

// 200.11.11.11:999 is the address of your final proxy you want to use (the Upstream Proxy).You should start chrome using --proxy-server=downstream-proxy-address.

Then, your custom Downstream Proxy should extract those proxy headers and forward the request for the proper Upstream Proxy.

For HTTPS requests, the issue I'm facing is to intercept the CONNECTION requests when the secure communication tunnel is being created. In this case the proxy headers are not sent by Chrome and I'm figuring out another way of transmitting the proxy information to the Downstream Proxy without needing to hack chrome(/chromium) itself.

The Downstream Proxy should be a very lightweight process running in your operation system. For reference, the proxy I've built consumes about 20MB of system's memory. I won't share the proxy code for now because it currently exposes some security risks for my application.

I could be wrong, but I believe SOCKS5 is already supported: http://www.chromium.org/developers/design-documents/network-stack/socks-proxy

--proxy-server="socks5://myproxy:8080"

--host-resolver-rules="MAP * ~NOTFOUND , EXCLUDE myproxy"

@tzellman that sets a single proxy for chrome and not for each page (tab) of chrome.

@barbolo I believe this workaround applies to most headless browsers. We have set up similar stack with PhantomJS:

client => haproxy => phantomjs => server

Same story, works great for HTTP resources but fails to route HTTPS as there is no access to additional headers, querystring, nothing... We are even considering SSL termination but that's just soooo much hacking to achieve such a simple thing :/

Did you have any luck with working around HTTPS requests?

@gwaramadze Yes, I've found some ways of making this scheme work with HTTPS and I'll share how I'm currently doing it.

Like I've said in the previous comment, the custom headers with the proxy information were ignored by Chrome when communicating with the downstream proxy server. However the user-agent header was being transmitted.

The first approach I tried was to encapsulate the proxy information in a JSON string sent as the user-agent header. For example, I would change the Chrome user-agent for each tab to look like this:

var userAgent = JSON.stringify({

"user-agent" : "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_13_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/62.0.3202.94 Safari/537.36",

"proxy-addr" : "111.111.111.111",

"proxy-port" : "9999",

});

page.setUserAgent(userAgent);That way I can intercept the user-agent in the Downstream Proxy and parse the Proxy attributes from it.

The problem is that the user-agent is also encrypted in the connection and sent directly to the final HTTP server. It's impossible to intercept it and fix it before sending it to the HTTP server. So the final HTTP server would receive a bizarre user agent string that would include your proxy connection information. If that is not a problem for you, that will work. But for me it could be a problem.

So what I ended up doing was to create a list with thousands of user agent strings and for each new tab:

-

Choose a user agent string from the list and set it in as the page user agent

-

Send a request to the downstream proxy specifying that requests with this user agent string should use a proxy i was also specifying.

-

Send a new request with this tab

-

In the downstream proxy, find which proxy should be used based on the user agent string.

That's how I'm doing it now. The steps 2 and 4 implies in reprogramming the downstream proxy.

Another approach that should work is to make changes to the source of chromium network to allow other headers to be transmitted. But that would be more maintenance work in the long term.

@barbolo Thanks, this is quite interesting hack. I wouldn't want to meddle with user agents too much as they might be checked by anti-scraping algorithms.

@gwaramadze yes. That's why I'm using the other approach. For instance, you have thousands of real chrome user agents available for recent versions of the chrome browser.

Is this feature in active development? Got the same issue and I guess the Use-Case is widely spread.

+1

I have same use case ! waiting for a solution !

+1

+1 Even I have similar use case. Waiting for the Solution with capability to set Proxy per page.

I don't think Puppeteer has anything to do with this issue. The problem is with Chrome, which doesn't provide any API to configure proxy.

You can either use a workaround like I've suggested above or you can build Chromium with a modified Network Stack, which I don't see as a good option.

I'm using request interception to forwarding request:

async newPage(browser) {

let page = await browser.newPage();

await page.setRequestInterception(true);

page.on('request', async interceptedRequest => {

const resType = interceptedRequest.resourceType();

if (['document', 'xhr'].indexOf(resType) !== -1) {

const url = interceptedRequest.url();

const options = {

uri: url,

method: interceptedRequest.method(),

headers: interceptedRequest.headers(),

body: interceptedRequest.postData(),

usingProxy: true,

};

const response = await this.fetch(options);

interceptedRequest.respond({

status: response.statusCode,

contentType: response.headers['content-type'],

headers: response.headers,

body: response.body,

});

} else {

interceptedRequest.continue();

}

});

return page;

}

fetch(options) {

// let baseUrl = options.baseUrl || request.globals.baseUrl;

let isHttps;

if (options.uri.startsWith('https')) {

isHttps = true;

} else if (options.uri.startsWith('http')) {

isHttps = false;

}

if (options.usingProxy || process.env.NODE_ENV === 'production') {

options.agentClass = isHttps ? Sock5HttpsAgent : Sock5HttpAgent;

options.agentOptions = {

socksHost: 'localhost', // Defaults to 'localhost'.

socksPort: 9050 // Defaults to 1080.

}

}

options.resolveWithFullResponse = true;

return request(options);

}

Please note that In my case I just forward document and xhr request and ignore baseUrl of request options and I use request-promise-native instead of request. You can replace the proxy settings in function fetch.

@flyxl I used your code in project to forward all request to proxy, but it introduced some 502 error from server. sure directly add proxy config in launch options works fine.

I guess the problem is triggered by resorted request order, and conflict to servers logical.

I used crawlera and it works perfect.

https://support.scrapinghub.com/support/solutions/articles/22000220800-using-crawlera-with-puppeteer

This will make a every request to both different pages and resources with different proxies. I highly recommend it

Hi @orangebacked,

Crawlera is a great tool. I also use it.

However when setRequestInterception is enabled, https request doesn't work.

I think i'm facing the same problem as @barbolo with https..

@alexandreawe my approach is working fine. It's not very hard to setup a local proxy that accesses the HTTP headers sent through Chrome requests. If someone needs help, I may write an explanation on how to build it.

@barbolo Would be very interesting (if it's not too much trouble to write up).

@barbolo I'm interested in your solution, too! Maybe anyproxy would be a possibility http://anyproxy.io/en/#how-does-it-work

For anyone who's interested in @barbolo's approach, here is my solution using the proxy-chain module:

const puppeteer = require('puppeteer');

const ProxyChain = require('proxy-chain');

const ROUTER_PROXY = 'http://127.0.0.1:8000';

const uas = [

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.139 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_5) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36',

];

const proxies = ['http://127.0.0.1:24000', 'http://127.0.0.1:24001'];

(async () => {

const browser = await puppeteer.launch({

headless: false,

args: [`--proxy-server=${ROUTER_PROXY}`],

});

const page1 = await browser.newPage();

const page2 = await browser.newPage();

try {

await page1.setUserAgent(uas[0]);

await page1.goto('http://www.whatsmyip.org/');

} catch (e) {

console.log(e);

}

try {

await page2.setUserAgent(uas[1]);

await page2.goto('http://www.whatsmyip.org/');

} catch (e) {

console.log(e);

}

await browser.close();

})();

const server = new ProxyChain.Server({

// Port where the server the server will listen. By default 8000.

port: 8000,

// Enables verbose logging

verbose: false,

prepareRequestFunction: ({

request,

username,

password,

hostname,

port,

isHttp,

}) => {

let upstreamProxyUrl;

if (request.headers['user-agent'] === uas[0]) upstreamProxyUrl = proxies[0];

if (request.headers['user-agent'] === uas[1]) upstreamProxyUrl = proxies[1];

return { upstreamProxyUrl };

},

});

server.listen(() => {

console.log(`Router Proxy server is listening on port ${8000}`);

});Here's solution to this issue:

https://hackernoon.com/tips-and-tricks-for-web-scraping-with-puppeteer-ed391a63d952

@lukaslevickis It looks like they are solving using this approach: #678 (comment)

The problem is that your proxy information included in the USER-AGENT header will be passed to the final server. That can be a problem for some use cases.

I'm using request interception to forwarding request:

async newPage(browser) { let page = await browser.newPage(); await page.setRequestInterception(true); page.on('request', async interceptedRequest => { const resType = interceptedRequest.resourceType(); if (['document', 'xhr'].indexOf(resType) !== -1) { const url = interceptedRequest.url(); const options = { uri: url, method: interceptedRequest.method(), headers: interceptedRequest.headers(), body: interceptedRequest.postData(), usingProxy: true, }; const response = await this.fetch(options); interceptedRequest.respond({ status: response.statusCode, contentType: response.headers['content-type'], headers: response.headers, body: response.body, }); } else { interceptedRequest.continue(); } }); return page; } fetch(options) { // let baseUrl = options.baseUrl || request.globals.baseUrl; let isHttps; if (options.uri.startsWith('https')) { isHttps = true; } else if (options.uri.startsWith('http')) { isHttps = false; } if (options.usingProxy || process.env.NODE_ENV === 'production') { options.agentClass = isHttps ? Sock5HttpsAgent : Sock5HttpAgent; options.agentOptions = { socksHost: 'localhost', // Defaults to 'localhost'. socksPort: 9050 // Defaults to 1080. } } options.resolveWithFullResponse = true; return request(options); }Please note that In my case I just forward document and xhr request and ignore baseUrl of request options and I use request-promise-native instead of request. You can replace the proxy settings in function fetch.

This method does not work with images. To get images to work, just use node-fetch instead of request-promise-native and send back like this:

let body = await response.buffer();

return interceptedRequest.respond({

status: response.status,

contentType: response.headers.get('content-type'),

headers: response.headers.raw(),

body,

});

You can use request interception to forward requests from each page to the correct proxy.

Would it be possible for you to give a quick example of how this is done? Been trying to find a solution for this for a while now but struggling to find a method that works for me.

Thanks

For anyone who's interested in @barbolo's approach, here is my solution using the proxy-chain module:

const puppeteer = require('puppeteer'); const ProxyChain = require('proxy-chain'); const ROUTER_PROXY = 'http://127.0.0.1:8000'; const uas = [ 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.139 Safari/537.36', 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_5) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36', ]; const proxies = ['http://127.0.0.1:24000', 'http://127.0.0.1:24001']; (async () => { const browser = await puppeteer.launch({ headless: false, args: [`--proxy-server=${ROUTER_PROXY}`], }); const page1 = await browser.newPage(); const page2 = await browser.newPage(); try { await page1.setUserAgent(uas[0]); await page1.goto('http://www.whatsmyip.org/'); } catch (e) { console.log(e); } try { await page2.setUserAgent(uas[1]); await page2.goto('http://www.whatsmyip.org/'); } catch (e) { console.log(e); } await browser.close(); })(); const server = new ProxyChain.Server({ // Port where the server the server will listen. By default 8000. port: 8000, // Enables verbose logging verbose: false, prepareRequestFunction: ({ request, username, password, hostname, port, isHttp, }) => { let upstreamProxyUrl; if (request.headers['user-agent'] === uas[0]) upstreamProxyUrl = proxies[0]; if (request.headers['user-agent'] === uas[1]) upstreamProxyUrl = proxies[1]; return { upstreamProxyUrl }; }, }); server.listen(() => { console.log(`Router Proxy server is listening on port ${8000}`); });

This is good approach but it's not working when I open https:// page. Because then setUserAgent is useless because proxy-chain can't intercept https. So how can I pass some info to identificate request in prepareRequestFunction ? Or maybe there is some alternative to proxy-chain library that does the same but supports https interception ?

For anyone who's interested in @barbolo's approach, here is my solution using the proxy-chain module:

const puppeteer = require('puppeteer'); const ProxyChain = require('proxy-chain'); const ROUTER_PROXY = 'http://127.0.0.1:8000'; const uas = [ 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.139 Safari/537.36', 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_5) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36', ]; const proxies = ['http://127.0.0.1:24000', 'http://127.0.0.1:24001']; (async () => { const browser = await puppeteer.launch({ headless: false, args: [`--proxy-server=${ROUTER_PROXY}`], }); const page1 = await browser.newPage(); const page2 = await browser.newPage(); try { await page1.setUserAgent(uas[0]); await page1.goto('http://www.whatsmyip.org/'); } catch (e) { console.log(e); } try { await page2.setUserAgent(uas[1]); await page2.goto('http://www.whatsmyip.org/'); } catch (e) { console.log(e); } await browser.close(); })(); const server = new ProxyChain.Server({ // Port where the server the server will listen. By default 8000. port: 8000, // Enables verbose logging verbose: false, prepareRequestFunction: ({ request, username, password, hostname, port, isHttp, }) => { let upstreamProxyUrl; if (request.headers['user-agent'] === uas[0]) upstreamProxyUrl = proxies[0]; if (request.headers['user-agent'] === uas[1]) upstreamProxyUrl = proxies[1]; return { upstreamProxyUrl }; }, }); server.listen(() => { console.log(`Router Proxy server is listening on port ${8000}`); });

when i tried this approach in my laptop, the user-agent, for https request, sent to proxy-server started by proxy-chain is browser default user-agent, instead of the specific user-agent set for page.

==updated====

This way works for puppeteer@1.13.0. But not working for puppeteer@1.14.0, puppeteer@1.15.0

I'm using request interception to forwarding request:

async newPage(browser) { let page = await browser.newPage(); await page.setRequestInterception(true); page.on('request', async interceptedRequest => { const resType = interceptedRequest.resourceType(); if (['document', 'xhr'].indexOf(resType) !== -1) { const url = interceptedRequest.url(); const options = { uri: url, method: interceptedRequest.method(), headers: interceptedRequest.headers(), body: interceptedRequest.postData(), usingProxy: true, }; const response = await this.fetch(options); interceptedRequest.respond({ status: response.statusCode, contentType: response.headers['content-type'], headers: response.headers, body: response.body, }); } else { interceptedRequest.continue(); } }); return page; } fetch(options) { // let baseUrl = options.baseUrl || request.globals.baseUrl; let isHttps; if (options.uri.startsWith('https')) { isHttps = true; } else if (options.uri.startsWith('http')) { isHttps = false; } if (options.usingProxy || process.env.NODE_ENV === 'production') { options.agentClass = isHttps ? Sock5HttpsAgent : Sock5HttpAgent; options.agentOptions = { socksHost: 'localhost', // Defaults to 'localhost'. socksPort: 9050 // Defaults to 1080. } } options.resolveWithFullResponse = true; return request(options); }Please note that In my case I just forward document and xhr request and ignore baseUrl of request options and I use request-promise-native instead of request. You can replace the proxy settings in function fetch.

Hello everyone, sorry, but I'm new to using a puppeteer. I think I understand that Sock5HttpsAgent (Sock5HttpAgent) indicates the proxy. But I do not understand what this function returns request(options) ? I get an error message, this function was not found.

How should this work? Someone can explain to me or give an example.

@Trahtenberg It's "request" library. https://github.com/request/request

You need to install it: npm i request

And add it to your script: var request = require('request');

And then: request(options) will return (error, response, body)

I have more question))) how to specify a proxy with authorization using code @flyxl?

@Trahtenberg I think you don't really need options.agentClass, options.agentOptions etc. In request library you can set proxy like this: options.proxy = "https://login:password@host:port";

P.S. I usually use it with: options.tunnel = true; options.strictSSL = false;

function fetch(options) {

// let baseUrl = options.baseUrl || request.globals.baseUrl;

let isHttps;

if (options.uri.startsWith('https')) {

isHttps = true;

} else if (options.uri.startsWith('http')) {

isHttps = false;

}

options.tunnel = true;

options.strictSSL = false;

options.proxy = "https://1111:22222@12.123.12.123:3000";

options.resolveWithFullResponse = true;

return request(options);

}

I have err:

(node:5188) UnhandledPromiseRejectionWarning: RequestError: Error: tunneling socket could not be established, cause=write EPROTO 8952:e

rror:1408F10B:SSL routines:ssl3_get_record:wrong version number:openssl\ssl\record\ssl3_record.c:252:

@Trahtenberg If you have working proxy and correct login and password then try to add:

process.env.NODE_TLS_REJECT_UNAUTHORIZED = "0"; at the start of the script;

Proxy works, I checked when I started the browser. Added as you said, again the error:

(node:1668) UnhandledPromiseRejectionWarning: RequestError: Error: tunneling socket could not be established, cause=write EPROTO 5108:e

rror:1408F10B:SSL routines:ssl3_get_record:wrong version number:openssl\ssl\record\ssl3_record.c:252:

Check the protcol: options.proxy = "https://1111:22222@12.123.12.123:3000"; Maybe its http not https.

yes you are right, thank you!

And again a new problem)))

when specifying a proxy when starting the browser, the connection to the server is established. If you specify in accordance with the recommendations @flyxl and @elpaxel then the connection to the server hangs, that is, it does not connect. I do not get status. If you connect to http:// then everything works well, and if to https:// (only some can work), the connection hangs. I to change the headers, but it did not help.

const request = require('request-promise');

const puppeteer = require('puppeteer');

//const Sock5HttpAgent = require('socks5-http-client/lib/Agent');

//const Sock5HttpsAgent = require('socks5-https-client/lib/Agent');

//process.env.NODE_TLS_REJECT_UNAUTHORIZED = "0";

async function newPage(browser,proxyn) {

let page = await browser.newPage();

await page.setRequestInterception(true);

page.on('request', async interceptedRequest => {

const resType = interceptedRequest.resourceType();

if (['document', 'xhr'].indexOf(resType) !== -1) {

const url = interceptedRequest.url();

const options = {

uri: url,

method: interceptedRequest.method(),

headers: interceptedRequest.headers(),

body: interceptedRequest.postData(),

usingProxy: true,

};

const response = await fetch(options,proxyn);

interceptedRequest.respond({

status: response.statusCode,

contentType: response.headers['content-type'],

headers: response.headers,

body: response.body,

});

} else {

interceptedRequest.continue();

}

});

return page;

}

function fetch(options,proxyn) {

options.tunnel = true;

options.strictSSL = false;

options.proxy = "http://"+proxyn;

options.resolveWithFullResponse = true;

return request(options);

}

let proxys = [

'95.141.35.254:3128',

];

let agents = [

'Mozilla/5.0 (Windows NT 6.3; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/71.0.3578.98 Safari/537.36',

];

(async() => {

const browser = await puppeteer.launch({

headless: false,

//args: ['--proxy-server=95.141.35.254:3128'],

});

let flag = 0;

for(let i = 0; i<1; i++){

if (flag == proxys.length){

flag = 0;

}

//let page = await browser.newPage();

let page = await newPage(browser,proxys[await flag]);

await page.setUserAgent(agents[await flag]);

const headers = {

//'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3',

//'Accept-Encoding': 'gzip, deflate',

//'Accept-Language': 'ru-RU,ru;q=0.9,en-US;q=0.8,en;q=0.7',

//'Cache-control': 'max-age=0',

//'referrer':'https://google.com/',

};

await page.setExtraHTTPHeaders(headers);

let resp = await page.goto('https://google.com');

console.log((await resp.status()));

await page.waitFor(100000);

page.close();

flag++;

}

})();

The approach I've been using for a while and is scaling/working quite fine for me is to implement a Man In The Middle attack. I use the flag --disable-web-security and run a MITM Proxy that intercept requests and responses.

- Python - https://mitmproxy.org/

- Ruby - https://github.com/argos83/ritm

- Node - https://github.com/joeferner/node-http-mitm-proxy

I've been using a cluster of proxies based on Ruby's RITM. Fully scalable.

could you write a short example of how this works with the puppeteer?

In puppeteer, it can be as simple as passing a header with the upstream proxy. Something like that:

headers = {'mitm-upstream' => 'http://user:password@proxy_ip:proxy_port'}

page.setExtraHTTPHeaders(headers)Then patch RITM with something like this:

class Ritm::HTTPForwarder

private

def faraday_forward(request)

upstream_proxy = request.header.delete('mitm-upstream') # PATCH

req_method = request.request_method.downcase

@client.send req_method do |req|

req.options[:proxy] = upstream_proxy || @config.misc.upstream_proxy # PATCH

req.url request.request_uri

req.body = request.body

request.header.each { |name, value| req.headers[name] = value }

end

end

endYou should start puppeteer using the MITM proxy as the proxy server and disabling web security:

--proxy-server=mitm_proxy_server_ip:mitm_proxy_server_port --disable-web-securityAnd that's it. Enjoy.

I try to catch the request, but MITM does not listen. I use different code variants, but it didn’t work. MITM does not even display errors.

const puppeteer = require('puppeteer');

'use strict';

var Proxy = require('http-mitm-proxy');

var proxy = Proxy();

var port = 80;

proxy.onError(function(ctx, err) {

console.error('proxy error:', err);

});

proxy.onRequest(function(ctx, callback) {

console.log('REQUEST:', ctx.clientToProxyRequest.url);

return callback();

});

proxy.onResponse(function(ctx, callback) {

console.log('BEGIN RESPONSE');

return callback();

});

proxy.listen({ port: port });

console.log('listening on ' + port);

(async() => {

const browser = await puppeteer.launch({

headless: false,

//args: ['--proxy-server="64.193.63.12:80"'],

//args: ['--proxy-server=127.0.0.1:80','--disable-web-security'],

});

var page = await browser.newPage();

//await page.waitFor(5000);

var resp = await page.goto('https://google.com');

console.log((await resp.remoteAddress()));

})();

Can anyone give an answer, does the replacement of the proxy work for someone when creating a new page or not?

It's silly to make users go through setting up a downstream proxy to forward requests to an upstream proxy. I mean everything is possible with a proxy (socks or HTTP/S) but why not provide a builtin solution. Users will do whatever they want at the end. So make chromium, puppeteer more robust by making this functionality builtin. Sadly right now the most feasible solution is to setup a MITM proxy and forward requests to wherever you like. @Trahtenberg if MITM does not listen thats probably because the port is occupied.

It's silly to make users go through setting up a downstream proxy to forward requests to an upstream proxy. I mean everything is possible with a proxy (socks or HTTP/S) but why not provide a builtin solution. Users will do whatever they want at the end. So make chromium, puppeteer more robust by making this functionality builtin. Sadly right now the most feasible solution is to setup a MITM proxy and forward requests to wherever you like. @Trahtenberg if MITM does not listen thats probably because the port is occupied.

Thank you, but I still do not understand how to change the proxy through MITM so I have to restart the browser with the new proxy ...(((

try this, use https-proxy-agent or http-proxy-agent to proxy request for per page

If an extension can do this, why can't puppeteer? Granted extensions don't run on headless, but still?

@j-bruce what extension can do this?

There are lots of proxy switching extensions in the chrome web store

They're probably not working as you would expect. I'm reading the FAQ of a popular proxy extension and it seems to be an interface to configure PAC:

Hi @barbolo, thanks for your efforts.

Is there any updates about this? Do you have a working example?

At this momment, #678 (comment) is the closest to a "working example" I can provide. I'd like to have more time to share something better, but I'm not sure it'll be possible anytime soon.

The MITM server I'm using is based on RITM (Ruby). I've added some capabilities to it (like caching static resources and changing requests/responses to make my use cases faster). It's been working for a couple of months now and it's serving hundreds of puppeteer sessions concurrently.

My compay (https://infosimples.com/) provides web automation/scraping, and some of our work is done through puppeteer. Changing proxies for each page is a must for us.

@barbolo Does the MITM solution also works for https connections?

@GM-Alex Sure it works

https://www.chromestatus.com/feature/4931699095896064

I think this issue is related to this feature. The https proxy needs to send connect, but it was limited.

I modified your code to use the username field.

const puppeteer = require('puppeteer');

const ProxyChain = require('proxy-chain');

const PROXY_PORT = 8003;

const ROUTER_PROXY = `http://127.0.0.1:${PROXY_PORT}`;

const proxies = ['http://127.0.0.1:7890', 'http://username:password@127.0.0.1:7891'];

const server = new ProxyChain.Server({

// Port where the server the server will listen. By default 8000.

port: PROXY_PORT,

// Enables verbose logging

verbose: false,

prepareRequestFunction: ({

request,

username,

password,

hostname,

port,

isHttp,

}) => {

return {

requestAuthentication: username === null,

upstreamProxyUrl: Buffer.from(username || '', 'base64').toString('ascii')

}

},

});

server.listen(() => {

console.log(`Router Proxy server is listening on port ${PROXY_PORT}`);

});

(async () => {

const browser = await puppeteer.launch({

headless: false,

args: [`--proxy-server=${ROUTER_PROXY}`],

});

const content1 = await browser.createIncognitoBrowserContext();

const page1 = await content1.newPage();

const content2 = await browser.createIncognitoBrowserContext();

const page2 = await content2.newPage();

try {

await page1.authenticate({ username: Buffer.from(proxies[0]).toString('base64'), password: '' })

await page1.goto('https://httpbin.org/get');

} catch (e) {

console.log(e);

}

try {

await page2.authenticate({ username: Buffer.from(proxies[1]).toString('base64'), password: '' })

await page2.goto('https://httpbin.org/get');

} catch (e) {

console.log(e);

}

await page1.waitFor(10000);

await content1.close();

await content2.close();

await browser.close();

server.close()

})();However, due to the limitations of identity authentication, it is only valid for the new Incognito context.

EDIT:

It's possible with puppeteer-page-proxy.

It supports setting a proxy for an entire page, or if you like, it can set a different proxy for each request.

First install it:

npm i puppeteer-page-proxy

Then require it:

const useProxy = require('puppeteer-page-proxy');Using it is easy;

Set proxy for an entire page:

await useProxy(page, 'http://127.0.0.1:8000');If you want a different proxy for each request,then you can simply do this:

await page.setRequestInterception(true);

page.on('request', req => {

useProxy(req, 'socks5://127.0.0.1:9000');

});Then if you want to be sure that your page's IP has changed, you can look it up;

const data = await useProxy.lookup(page);

console.log(data.ip);It supports http, https, socks4 and socks5 proxies, and it also supports authentication if that is needed:

const proxy = 'http://login:pass@127.0.0.1:8000'Repository:

https://github.com/Cuadrix/puppeteer-page-proxy

In your project folder:

npm i puppeteer-page-proxyNow simply require it and use it:

const puppeteer = require('puppeteer'); var useProxy = require('puppeteer-page-proxy'); (async () => { let site = 'https://ipleak.net'; let proxy1 = 'http://host:port'; let proxy2 = 'https://host:port'; let proxy3 = 'socks://host:port'; const browser = await puppeteer.launch({headless: false}); const page1 = await browser.newPage(); await useProxy(page1, proxy1); await page1.goto(site); const page2 = await browser.newPage(); await useProxy(page2, proxy2); await page2.goto(site); const page3 = await browser.newPage(); await useProxy(page3, proxy3); await page3.goto(site); })();Repository:

first of, thx <3

secondly I couldnt try to figure out whether its possible or not, to use proxy per incognito pages

@mintyloco Just create a new incognito browser context and use it:

const context = await browser.createIncognitoBrowserContext();

const page = await context.newPage();

await useProxy(page, 'http://host:port');@Cuadrix this is GREAT, exactly what I've been looking for, THANKS!

However, is there any way to add username/password authentication? I wanted to use it, but it doesn't have that feature yet, so I can't :(

@darkguy2008 Could you try and see if page.authenticate works?

Edit: page.authenticate doesn't work. Use this format instead: protocol://login:pass@host:port where protocol is the type of the proxy, e.g https

You can use https://github.com/gajus/puppeteer-proxy to set proxy either for entire page or for specific requests only, e.g.

import puppeteer from 'puppeteer';

import {

createPageProxy,

} from 'puppeteer-proxy';

(async () => {

const browser = await puppeteer.launch();

const page = await browser.newPage();

const pageProxy = createPageProxy({

page,

proxyUrl: 'http://127.0.0.1:3000',

});

await page.setRequestInterception(true);

page.once('request', async (request) => {

await pageProxy.proxyRequest(request);

});

await page.goto('https://example.com');

})();To skip proxy simply call request.continue() conditionally.

Using puppeteer-proxy Page can have multiple proxies.

When i use 'puppeteer-proxy', the requests fail, even tho my proxy is valid.

What's the error that you are getting?

Chromium tracking issue: https://bugs.chromium.org/p/chromium/issues/detail?id=1090797

Anybody having issues with Puppeteer-page-proxy?

I'm getting the following error:

dist/source/create.js:155

yield item;

^^^^^

SyntaxError: Unexpected strict mode reserved word

at createScript (vm.js:80:10)

at Object.runInThisContext (vm.js:139:10)

at Module._compile (module.js:617:28)

Anybody having issues with Puppeteer-page-proxy?

I'm getting the following error:dist/source/create.js:155 yield item; ^^^^^ SyntaxError: Unexpected strict mode reserved word at createScript (vm.js:80:10) at Object.runInThisContext (vm.js:139:10) at Module._compile (module.js:617:28)

What Node.js version?

Node needed to be updated. It's working fine

厉害了,python啥时候有?

wow,python no support

EDIT:

It's possible with puppeteer-page-proxy.

It supports setting a proxy for an entire page, or if you like, it can set a different proxy for each request.

Repository:

https://github.com/Cuadrix/puppeteer-page-proxy

Is this library still working for you?

@Nisthar

AFAIK puppeteer-page-proxy lib has some issues.

Personally I tried to use it with my proxy, but I have had problems, for example, going to https://whatismyipaddress.com/ (and other similar links) to simply get the proxy IP address in the proxied page. It fails also when Google sends reCaptcha while scraping (and not only with Google).

Instead everything works fine using the standard puppeteer launch arg '--proxy-server'.

The lib seems to be not actively maintained, even answering the issues.

You can use proxy per context, that in the end it's going to be pretty similar

https://pptr.dev/next/api/puppeteer.browsercontextoptions

It is likely that we will never support proxy per page given that it is possible to set it per browser context which is cheap to create for each page (unless it gets supported in the browsers for other reasons). Therefore, closing this issue as not planned.