REST API for managing recipes. Built with Python, Django REST Framework and Docker using Test Driven Development (TDD). Deployed on AWS EC2.

AWS EC2 Instance is temporarily stopped to save costs.

http://ec2-34-254-224-163.eu-west-1.compute.amazonaws.com/api/docs

- Features

- Documentation

- Entity-Relationship Diagram

- Technologies Used

- Local Usage

- Local Development Environment Setup (Ubuntu 22.04)

- GitHub Actions

- Deployment (AWS EC2)

- Useful Commands

-

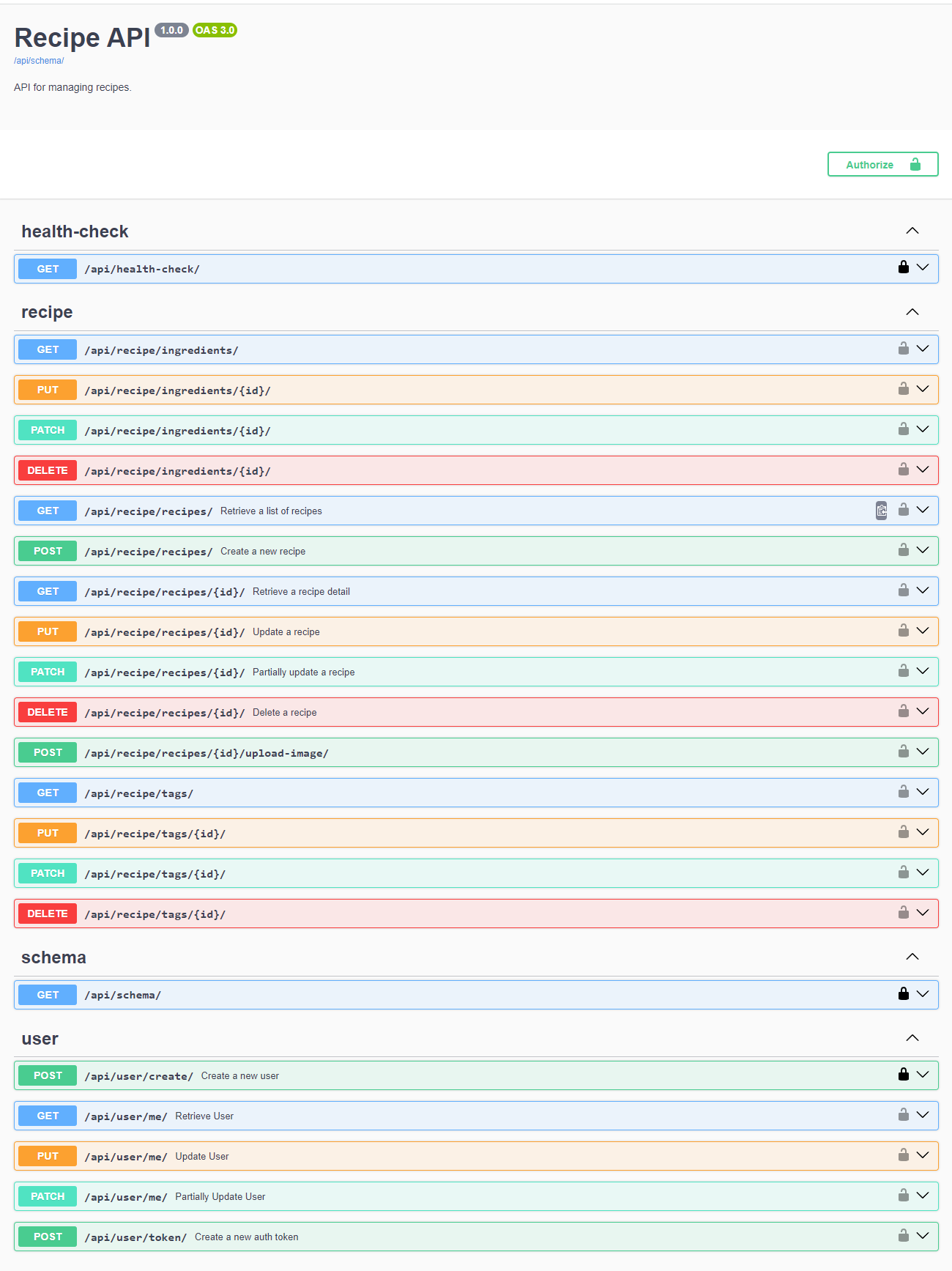

Django UI admin panel

<host>/admin/ -

Token-based authentication

-

User management

- Create user

- Update user (auth required)

- Get user detail (auth required)

-

Recipe management (auth required)

- Recipe API

- View list of recipes

- View detail of specific recipe

- Create recipe

- Update recipe

- Delete recipe

- Tags API

- View list of tags

- Create tags

- Tags can be created when creating a recipe

- Tags can be created when updating a recipe

- Update tag

- Tags can be updated when updating a recipe

- Tag can be updated using the Tags API endpoint

- Delete tag

- Ingredients API

- View list of ingredients

- Create ingredients

- Ingredients can be created when creating a recipe

- Ingredients can be created when updating a recipe

- Update ingredient

- Ingredients can be updated when updating a recipe

- Ingredient can be updated using the Ingredients API endpoint

- Delete ingredient

- Recipe Image API

- Upload image to recipe

- Recipe API

-

Filter recipes by tags and ingredients

- How to use:

<host>/api/recipes/?tags=<comma_separated_tag_ids><host>/api/recipes/?ingredients=<comma_separated_ingredient_ids>

- How to use:

The API documentation is created using drf-spectacular.

- Swagger UI:

/api/docs/ - ReDoc:

/api/redoc/ - OpenAPI schema:

/api/schema/

- Create new user or use existing one

- To get a token, send a

POSTrequest to<host>/api/user/token/with the following payload:{ "email": "<user_email>", "password": "<user_password>" } -

- Copy the token from the response

- Click on the Authorize button in the top right corner

- Enter the

Token <token>in the Value field of the tokenAuth (apiKey) section

-

- Save the token in the local storage

- Add the

Authorization: Token <token>header to the request every time you make a request to the API

- Django - Python web framework

- Django REST Framework - Django toolkit for building web APIs

- flake8 - Python linting tool

- psycopg2 - PostgreSQL database adapter for Python

- drf-spectacular - OpenAPI schema generation for Django REST framework

- VS Code - IDE

- Docker - Containerization platform

- Docker Compose - Tool for defining and running multi-container Docker applications

- Docker Hub - Container image registry

- Clone the GitHub repository

$ git clone

- Build the docker image

$ docker compose build

- Run the containers

$ docker compose up

-

Install Docker Engine

-

Install Docker Compose V2

https://docs.docker.com/compose/install/linux/#install-using-the-repository

-

Manage Docker as a non-root user(grand the user access to the docker command without needing to use sudo)

-

Clone the GitHub repository

$ git clone git@github.com:FlashDrag/recipe-app-api.git <path_to_local_dir>

- Create requirements.txt file and add the following:

Django>=3.2.4,<3.3 djangorestframework>=3.12.4,<3.13 - Create an empty

appfolder - Configure linting with flake8

- Create

requirements.dev.txt. Dev requirements are only needed for development and testing in the local environment.flake8>=3.9.2,<3.10 - Create

.flake8file insideappfolder and add the following:[flake8] exclude = migrations, __pycache__, manage.py, settings.py

- Create

- Create Dockerfile file and add the following:

# python image from docker hub

# alpine is an efficient and lightweight linux distro for docker

FROM python:3.11.6-alpine3.18

# maintainer of the image

LABEL maintainer="linkedin.com/in/pavlo-myskov"

# tells python to run in unbuffered mode.

# The output will be printed directly to the terminal

ENV PYTHONUNBUFFERED 1

# copy requirements.txt from local machine into docker image

COPY ./requirements.txt /tmp/requirements.txt

# copy the dev requirements file from local machine into docker image

COPY ./requirements.dev.txt /tmp/requirements.dev.txt

# copy the app folder from local machine into docker image

COPY ./app /app

# set the working directory.

# All subsequent commands will be run from this directory

WORKDIR /app

# Expose port 8000 from the container to outside world(our localhost)

# It allows us to access the port from our web browser

EXPOSE 8000

# set default environment variable

ARG DEV=false

# RUN - is a command to execute when building the image

# python -m venv /py - creates a virtual environment in the /py directory

# ... --upgrade pip - upgrades pip

# .. /tmp/requirements.txt - installs all the requirements

# rm -rf /tmp - removes the temporary directory

# adduser - creates a user inside the docker image(best practice to not run as root)

# --disabled-password - disables the password for the user

# --no-create-home - does not create a home directory for the user

# --django-user - name of the user

# chown -R django-user:django-user /app - changes the ownership of the /app directory to django-user

RUN python -m venv /py && \

/py/bin/pip install --upgrade pip && \

/py/bin/pip install -r /tmp/requirements.txt && \

if [ "$DEV" = "true" ] ; \

then /py/bin/pip install -r /tmp/requirements.dev.txt ; \

fi && \

rm -rf /tmp && \

adduser \

--disabled-password \

--no-create-home \

django-user && \

chown -R django-user:django-user /app

# update PATH environment variable to include the /py/bin directory

# so that we can run python commands without specifying the full path

ENV PATH="/py/bin:$PATH"

# specify the user that we're switching to

USER django-user

- Create

.dockerignorefile and add the following:

# Git

.git

.gitignore

# Docker

.docker

# Python

app/__pycache__/

app/*/__pycache__/

app/*/*/__pycache__/

app/*/*/*/__pycache__/

.env/

.venv/

venv/- Build the docker image (optionally, as we're going to use docker-compose)

$ sudo service docker start $ docker build . - Create docker-compose.yml file and add the following:

# version of docker-compose syntax

version: '3.9'

# define services

services:

# name of the service

app:

build:

# path to the Dockerfile

context: .

# override the default environment variable

args:

- DEV=true

# port mapping. Maps port 8000 on the host to port 8000 on the container

ports:

- '8000:8000'

# volumes to mount. Mounts the app directory on the host to the /app directory on the container.

# Maps directory in the container to the directory on the local machine

volumes:

- ./app:/app

# `- ./app:/home/django-user/app` - for dev container development

# command to run when the container starts

command: >

sh -c 'python manage.py runserver 0.0.0.0:8000'

environment:

- DEBUG=1- Build the docker image

$ docker compose build

- Create a Django project

$ docker compose run --rm app sh -c "django-admin startproject app ." - Start the Django development server

$ docker compose up

- Add PostgreSQL database to docker-compose.yml file

# ...

services:

app:

# specifies that the app service depends on the db service

# also, it ensures that the db service is started before the app service

depends_on:

- db

# ...

# allows to connect to the database from the app service,

# must match the credentials in the db service

environment:

# hostname of the database - the name of the service in docker-compose.yml file

- DB_HOST=db

- DB_NAME=devdb

- DB_USER=devuser

- DB_PASS=postgres

# add db service,

# `app` service will use `db` as a hostname to connect to the database

db:

# docker hub image to use

image: postgres:13-alpine

volumes:

- dev-db-data:/var/lib/postgresql/data/

environment:

- POSTGRES_DB=devdb

- POSTGRES_USER=devuser

- POSTGRES_PASSWORD=postgres

ports:

- '5432:5432'

# named volumes

volumes:

dev-db-data:

dev-static-data:- Run the containers to create the database

$ docker compose up- Add

psycopg2dependencies to Dockerfile

# ...

RUN python -m venv /py && \

/py/bin/pip install --upgrade pip && \

# install psycopg2 dependencies

apk add --update --no-cache postgresql-client && \

apk add --update --no-cache --virtual .tmp-build-deps \

build-base postgresql-dev musl-dev && \

# ...

rm -rf /tmp && \

# delete the .tmp-build-deps

apk del .tmp-build-deps && \

# ...- Add

psycopg2package to requirements.txt file

psycopg2>=2.8.6,>2.9

- Add database configuration to settings.py file

# Database

DATABASES = {

'default': {

'ENGINE': 'django.db.backends.postgresql',

'NAME': os.environ.get('DB_NAME'),

'USER': os.environ.get('DB_USER'),

'PASSWORD': os.environ.get('DB_PASS'),

'HOST': os.environ.get('DB_HOST'),

}

}

- Add database credentials to .env file. See the See the .env.example file for reference.

- Rebuild the docker image

$ docker compose up --buildAllows to wait for the database to be available before running the Django app.

- Create a new core app

$ docker compose run --rm app sh -c "python manage.py startapp core"- Create a new management module with commands subdirectory inside core app

$ mkdir -p app/core/management/commands && touch app/core/management/__init__.py && touch app/core/management/commands/__init__.py- Create a new wait_for_db.py file inside commands subdirectory

$ touch app/core/management/commands/wait_for_db.py- Configure wait_for_db.py file. See the file for reference.

- Add wait_for_db command to docker-compose.yml file

# ...

services:

app:

# ...

# command to run when the container starts

command: >

sh -c "python manage.py wait_for_db &&

python manage.py migrate &&

python manage.py runserver 0.0.0.0:8000"

- Clean up the containers

$ docker compose down- Run the containers (optionally, for testing) It is better first time to migrate the database after the CustomUser model has been created.

$ docker compose up- Create a Custom User Model and a Custom User Manager. See the file for reference.

- Add

AUTH_USER_MODEL = 'core.User'to settings.py file - Make and apply migrations

$ docker compose run --rm app sh -c "python manage.py makemigrations core"

$ docker compose run --rm app sh -c "python manage.py wait_for_db && python manage.py migrate"- Remove the db volume In case, if you applied initial migrations before creating the Custom User Model. It will clean up the database.

$ docker volume rm recipe-app-api_dev-db-dataIt allows to generate OpenAPI schema for the API.

- Add

drf_spectacularpackage to requirements.txt file - Add

rest_frameworkanddrf_spectacularto INSTALLED_APPS in settings.py file - Add

REST_FRAMEWORK = {'DEFAULT_SCHEMA_CLASS': 'drf_spectacular.openapi.AutoSchema',}to settings.py file - Add url patterns to urls.py file

from drf_spectacular.views import (

SpectacularAPIView,

SpectacularSwaggerView

)

# ...

urlpatterns = [

# ...

path('api/schema/', SpectacularAPIView.as_view(), name='api-schema'),

path(

'api/docs',

SpectacularSwaggerView.as_view(url_name='api-schema'),

name='api-docs',

)

]- Rebuild the docker image

- Add image handling dependencies (jpeg-dev, zlib zlib-dev) to Dockerfile

- Create static and media directories and set permissions

# ...

RUN python -m venv /py && \

# ...

apk add --update --no-cache postgresql-client jpeg-dev && \

# ...

build-base postgresql-dev musl-dev zlib zlib-dev && \

# ...

adduser \

# ...

django-user && \

mkdir -p /vol/web/media && \

mkdir -p /vol/web/static && \

# set ownership of the directories to django-user

chown -R django-user:django-user /vol && \

# set permissions, so that the user can make any changes to the directories

chmod -R 755 /vol

- Add

Pillowpackage to requirements.txt file - Add new volumes to docker-compose.yml file

# ...

services:

app:

# ...

volumes:

- ./app:/app

- dev-static-data:/vol/web

# ...

volumes:

dev-db-data:

dev-static-data:

- Update settings.py file

STATIC_URL = '/static/static/'

MEDIA_URL = '/static/media/'

MEDIA_ROOT = '/vol/web/media'

STATIC_ROOT = '/vol/web/static'

- Update urls.py file

from django.conf.urls.static import static

from django.conf import settings

# ...

if settings.DEBUG:

urlpatterns += static(

settings.MEDIA_URL,

document_root=settings.MEDIA_ROOT,

)

- Rebuild the docker image

- Add

recipe_image_file_pathfunction andimagefield to models.py file

Choose one of the following options:

Allows to develop the application inside a container using VSCode.

Since you've mounted your app codebase as a Docker volume you can develop your application directly on your local machine and see the changes reflected in the container without having to rebuild the container.

- Create virtual environment using virtualwrapper

$ mkvirtualenv recipe-app-api

- Install requirements

$ pip install -r requirements.txt

- Install dev requirements

$ pip install -r requirements.dev.txt

- All development dependencies you can add to

requirements.dev.txtfile. - Make sure the all dependencies including python version on your local machine match the dependencies in the container to avoid any issues.

DockerHub is a platform that allows us to pull Docker images down to our local machine and push Docker images up to the cloud.

- Docker Hub account https://hub.docker.com/

- Create New Access Token

-

Go to https://hub.docker.com/ > Account Settings > Security > New Access Token

-

Token Description:

recipe-app-api-github-actions -

Access permissions:

read-only -

Don't close token, until you've copied and pasted it into your GitHub repository

-

Warning: Use different tokens for different environments

-

To use access token from your Docker CLI client(at the password prompt use the access token as the password)

$ docker login -u <username>

-

-

Create new repo if not exists

-

Add Docker Hub secrets to GitHub repository for GitHub Actions jobs

Settings > Secrets > Actions > New repository secret

-

DOCKERHUB_USER:<username> -

DOCKERHUB_TOKEN:<access_token>

-

-

Create a config file at

.github/workflows/checks.yml

---

name: Checks

on: [push]

jobs:

test-lint:

name: Test and Lint

runs-on: ubuntu-22.04

steps:

- name: Login to Docker Hub

uses: docker/login-action@v3

with:

username: ${{ secrets.DOCKERHUB_USER }}

password: ${{ secrets.DOCKERHUB_TOKEN }}

- name: Checkout

uses: actions/checkout@v4

- name: Test

run: docker compose run --rm app sh -c "python manage.py wait_for_db && python manage.py test"

- name: Lint

run: docker compose run --rm app sh -c "flake8"- AWS account

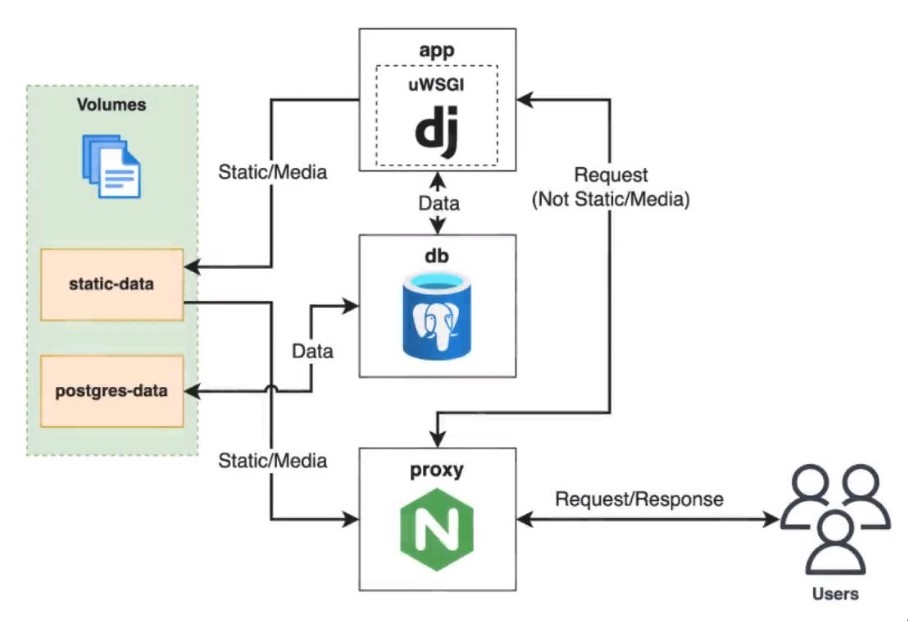

- AWS CLI

- nginx. Web server that can be used as a reverse proxy to the Django app

- uWSGI or Gunicorn. Web server gateway interface that can be used to serve the Django app

- Docker Compose

- Update Dockerfile.

COPY ./scripts /scripts

COPY ./app /app

RUN ...

# linux-headers - required for uWSGI

build-base postgresql-dev musl-dev zlib zlib-dev linux-headers && \

# ...

chmod -R 755 /vol && \

# make the scripts dir executable

chmod -R +x /scripts

# update PATH environment variable

ENV PATH="/scripts:/py/bin:$PATH"

# ...

# run the script that will run the uWSGI server

CMD ["run.sh"]

- Create

scriptsdirectory and addrun.shfile

#!/bin/sh

set -e

python manage.py wait_for_db

python manage.py collectstatic --noinput

python manage.py migrate

uwsgi --socket :9000 --workers 4 --master --enable-threads --module app.wsgi

- Add

uwsgipackage to requirements.txt file

uwsgi>=2.0.19<2.1

- Create

proxydirectory - Add

default.conf.tplfile

server {

listen ${LISTEN_PORT};

location /static {

alias /vol/static;

}

location / {

uwsgi_pass ${APP_HOST}:${APP_PORT};

include /etc/nginx/uwsgi_params;

client_max_body_size 10M;

}

}

- Add

uwsgi_paramsfile

https://uwsgi-docs.readthedocs.io/en/latest/Nginx.html#what-is-the-uwsgi-params-file

uwsgi_param QUERY_STRING $query_string;

uwsgi_param REQUEST_METHOD $request_method;

uwsgi_param CONTENT_TYPE $content_type;

uwsgi_param CONTENT_LENGTH $content_length;

uwsgi_param REQUEST_URI $request_uri;

uwsgi_param PATH_INFO $document_uri;

uwsgi_param DOCUMENT_ROOT $document_root;

uwsgi_param SERVER_PROTOCOL $server_protocol;

uwsgi_param REMOTE_ADDR $remote_addr;

uwsgi_param REMOTE_PORT $remote_port;

uwsgi_param SERVER_ADDR $server_addr;

uwsgi_param SERVER_PORT $server_port;

uwsgi_param SERVER_NAME $server_name;

- Add

run.shfile

#!/bin/sh

set -e

envsubst < /etc/nginx/default.conf.tpl > /etc/nginx/conf.d/default.conf

nginx -g 'daemon off;'

- Add Dockerfile to the

proxydirectory

# nginx image

FROM nginxinc/nginx-unprivileged:1-alpine

LABEL maintainer="linkedin.com/in/pavlo-myskov"

# copy the files from local machine into docker image

COPY ./default.conf.tpl /etc/nginx/default.conf.tpl

COPY ./uwsgi_params /etc/nginx/uwsgi_params

COPY ./run.sh /run.sh

# LISTEN_PORT - port that nginx will listen on

ENV LISTEN_PORT=8000

# APP_HOST - hostname where the uWSGI server is running

ENV APP_HOST=app

# APP_PORT - port where the uWSGI server is running

ENV APP_PORT=9000

# set root user to run the commands below as root

USER root

RUN mkdir -p /vol/static && \

chmod 755 /vol/static && \

touch /etc/nginx/conf.d/default.conf && \

chown nginx:nginx /etc/nginx/conf.d/default.conf && \

chmod +x /run.sh

VOLUME /vol/static

# switch to the nginx user

USER nginx

CMD ["/run.sh"]

- Add

.envfile to the project root directory. See the .env.example file for reference.

- Add

docker-compose-deploy.ymlfile in the project root directory

version: "3.9"

services:

app:

build:

context: .

restart: always

volumes:

- static-data:/vol/web

environment:

- DB_HOST=db

- DB_NAME=${DB_NAME}

- DB_USER=${DB_USER}

- DB_PASS=${DB_PASS}

- SECRET_KEY=${DJANGO_SECRET_KEY}

- ALLOWED_HOSTS=${DJANGO_ALLOWED_HOSTS}

depends_on:

- db

db:

image: postgres:13-alpine

restart: always

volumes:

- postgres-data:/var/lib/postgresql/data

environment:

- POSTGRES_DB=${DB_NAME}

- POSTGRES_USER=${DB_USER}

- POSTGRES_PASSWORD=${DB_PASS}

proxy:

build:

context: ./proxy

restart: always

depends_on:

- app

ports:

- 80:8000

# use the following port mapping for docker-compose-deploy.yml file local testing

# - 8000:8000

volumes:

- static-data:/vol/static

volumes:

postgres-data:

static-data:

SECRET_KEY = os.environ.get('SECRET_KEY', 'changeme')

DEBUG = bool(int(os.environ.get('DEBUG', 0)))

ALLOWED_HOSTS = []

ALLOWED_HOSTS.extend(

filter(

None,

os.environ.get('ALLOWED_HOSTS', '').split(','),

)

)

-

Create IAM user

- Go to IAM > Users

- Create user:

- User name:

recipe-app-api-user - Uncheck

Programmatic access - Attach existing policies:

- AdministratorAccess

- Create user

- User name:

-

Upload SSH key to AWS EC2

- Go to ~/.ssh directory on your local machine

- Generate new SSH key

$ ssh-keygen -t rsa -b 4096 # Enter file in which to save the key. $ /home/<user>/.ssh/aws_id_rsa

- Get public key from local machine (you can use the existing one that used for GitHub SSH connection)

$ cat ~/.ssh/aws_id_rsa.pub - Go to EC2 > Key Pairs

- Click Action > Import key pair:

- Key pair name:

recipe-api-wsl2-key - Paste public key from local machine

- Import key pair

- Key pair name:

-

Create EC2 instance

- Go to EC2 > Instances or EC2 Dashboard

- Click Launch Instance:

- Name:

recipe-api-dev-server - Number of instances:

1 - Amazon Machine Image (AMI):

Amazon Linux 2 AMI - Instance type:

t2.micro(Free tier eligible) - Key pair login:

recipe-api-wsl2-key - Firewall:

- Check

Allow SSH traffic from Anywhere - Check

Allow HTTP traffic from the internet

- Check

- Configure storage:

- 1 x 8 GiB gp2

- Launch instance

- Name:

-

Connect to the EC2 instance using SSH from local machine

- Go to EC2 > Instances

- Select the instance

- Copy the Public IPv4 address

- Open the terminal on your local machine

- Start the SSH agent

$ eval $(ssh-agent)

- Add the SSH key to the SSH agent

$ ssh-add ~/.ssh/aws_id_rsa - Connect to the EC2 instance

$ ssh ec2-user@<public_ipv4_address>

-

Connect EC2 to GitHub using SSH (on EC2 instance terminal)

- Generate new SSH key

$ ssh-keygen -t ed25519 -b 4096 # Leave the file name and passphrase empty - Get public key

$ cat ~/.ssh/id_ed25519.pub - Go to GitHub repository > Settings > Deploy keys

- Add deploy key:

- Title:

server - Key: Paste generated public key from EC2 instance

- Allow write access:

uncheck - Add key

- Title:

- Generate new SSH key

-

Configure Amazon Linux 2023 EC2 instance (make sure you're connected to the EC2 instance)

- Update the system

$ sudo dnf update -y

- Install git

$ sudo dnf install git -y

- Install Docker

$ sudo dnf install docker -y

https://linux.how2shout.com/how-to-install-docker-on-amazon-linux-2023/

https://cloudkatha.com/how-to-install-docker-on-amazon-linux-2023/

- Enable and start Docker service

# allows to automatically start Docker service on system boot $ sudo systemctl enable docker $ sudo systemctl start docker

- Verify Docker Running Status

$ sudo systemctl status docker

- Add ec2-user to the docker group

# gives the ec2-user permission to run Docker commands $ sudo usermod -a -G docker ec2-user - Relogin to the EC2 instance

$ exit $ ssh ec2-user@<public_ipv4_address>

- Install Docker Compose

$ sudo curl -L https://github.com/docker/compose/releases/latest/download/docker-compose-$(uname -s)-$(uname -m) -o /usr/local/bin/docker-compose

- Apply executable permissions to the binary

# makes the docker-compose binary executable $ sudo chmod +x /usr/local/bin/docker-compose

- Update the system

-

Clone and configure the project

- Copy the SSH clone URL from GitHub repository

- Clone the project

$ git clone git@github.com:FlashDrag/recipe-app-api.git

- Create

.envfile from.env.examplefile from the project root directory$ cd recipe-app-api $ cp .env.example .env - Update

.envfile$ nano .env # --- DB_NAME=recipedb DB_USER=recipeuser DB_PASS=set_your_password DJANGO_SECRET_KEY=changeme DJANGO_ALLOWED_HOSTS=public_ipv4_address- To generate a new secret key see the Generate Django Secret Key section.

- To get the Public IPv4 DNS of the EC2 instance go to

EC2 > Instance > Networking > Public IPv4 DNS

-

Run services

# -d flag allows to run the containers in the background, so that we can see the output of the containers

$ cd recipe-app-api

$ docker-compose -f docker-compose-deploy.yml up -d- Open the browser and go to the Public IPv4 DNS address of the EC2 instance. Make sure that the HTTP protocol is used in the address bar.

Note: If you reboot the EC2 instance, you need to change allowed hosts and recreate the containers, since the ip addresses of the EC2 instance will change after rebooting.

- Connect to the EC2 instance using SSH from local machine

- Go to EC2 > Instances

- Select the instance

- Copy the Public IPv4 address

- Open the terminal on your local machine

- Start the SSH agent

$ eval $(ssh-agent)

- Add the SSH key to the SSH agent

$ ssh-add ~/.ssh/aws_id_rsa - Connect to the EC2 instance

$ ssh ec2-user@<public_ipv4_address>

- Verify Docker Running Status

$ sudo systemctl status docker

- Change allowed hosts in

.envfile to the new Public IPv4 DNS address$ cd recipe-app-api $ nano .env # --- DJANGO_ALLOWED_HOSTS=new_public_ipv4_address

- Re-run the containers

$ docker-compose -f docker-compose-deploy.yml up -d

- Open the browser and go to the new Public IPv4 DNS address of the EC2 instance. Make sure that the HTTP protocol is used in the address bar.