This is tensorflow(unofficial) implementation for the method in

Code mainly based on [SFMLearner](https://github.com/tinghuiz/SfMLearner) and [SuperPoint](https://github.com/rpautrat/SuperPoint)Digging into Self-Supervised Monocular Depth Prediction

Clément Godard, Oisin Mac Aodha, Michael Firman and Gabriel J. Brostow

Update: fix testing bug for input data normalization, thanks @JiatianWu

Current pretrained model result is slightly low than paper.

If you find this work useful in your research please consider citing author's paper:

@article{monodepth2,

title = {Digging into Self-Supervised Monocular Depth Prediction},

author = {Cl{\'{e}}ment Godard and

Oisin {Mac Aodha} and

Michael Firman and

Gabriel J. Brostow},

journal = {arXiv:1806.01260},

year = {2018}

}

| model_name | abs_rel | sq_rel | rms | log_rms | δ<1.25 | δ<1.25^2 | δ<1.25^3 |

|---|---|---|---|---|---|---|---|

| mono_640x192_nopt(paper) | 0.132 | 1.044 | 5.142 | 0.210 | 0.845 | 0.948 | 0.977 |

| mono_640x192_nopt(ours) | 0.139 | 1.1293 | 5.4190 | 0.2200 | 0.8299 | 0.9419 | 0.9744 |

mono_640x192_pt(paper) |

0.115 | 0.903 | 4.863 | 0.193 | 0.877 | 0.959 | 0.982 |

| mono_640x192_pt(ours) | 0.120 | 0.8702 | 4.888 | 0.194 | 0.861 | 0.957 | 0.982 |

This codebase was developed and tested with Tensorflow 1.6.0, CUDA 8.0 and Ubuntu 16.04.

Assuming a fresh Anaconda distribution, you can install the dependencies with:

conda env create -f environment.yml

conda activate tf-monodepth2In order to train the model using the provided code, the data needs to be formatted in a certain manner.

For KITTI, first download the dataset using this script provided on the official website, and then run the following command

python data/prepare_train_data.py --dataset_dir=/path/to/raw/kitti/dataset/ --dataset_name='kitti_raw_eigen' --dump_root=/path/to/resulting/formatted/data/ --seq_length=3 --img_width=416 --img_height=128 --num_threads=4First of all, set dataset/saved_log path at monodepth2_kitti.yml

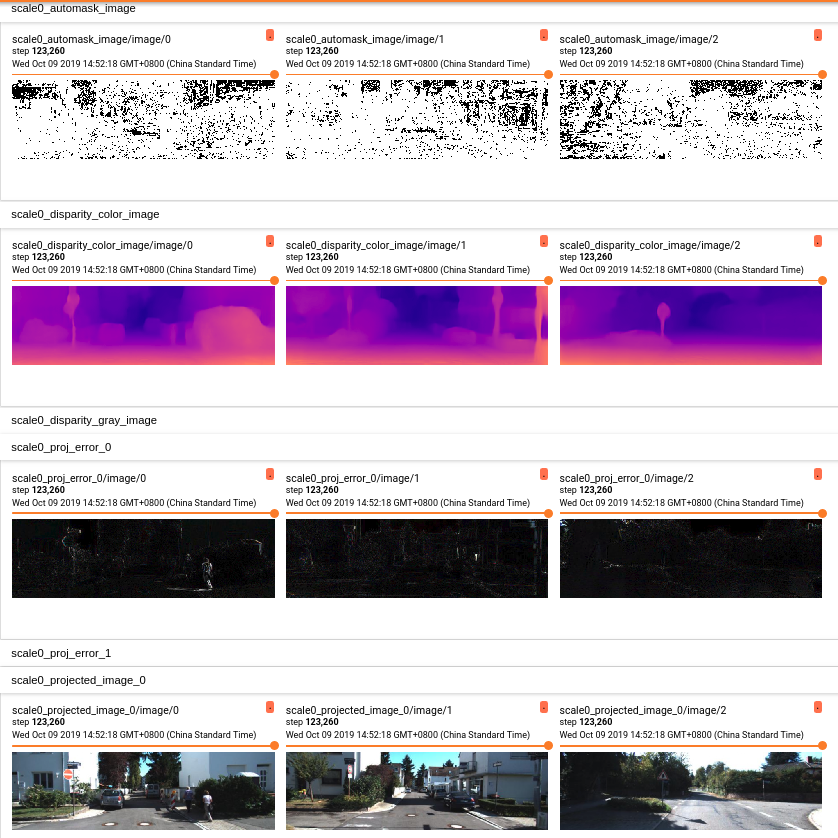

python monodepth2.py train config/monodepth2_kitti.yml your_saved_model_namepython monodepth2.py test config/monodepth2_kitti.yml your_pretrained_model_name

Get pretrained model download link from Eval Result chart

- First we need to save predicted depth image into npy file

python monodepth2.py eval config/monodepth2_kitti_eval.yml your_pretrained_model_name depth

Save destination should be setted in monodepth2_kitti_eval.yml.

- Then we use evaluation code to compute error result:

cd kitti_eval

python2 eval_depth.py --kitti_dir=/your/kitti_data/path/ --pred_file=/your/save/depth/npy/path/ --test_file_list=../data/kitti/test_files_eigen.txt

Note: please use python2 to execute this bash.

kitti_eval code from Zhou's SFMLearner

Pose evaluation code to be completed.

-

Monodepth2

-

SfmLearner

-

SuperPoint

-

resnet-18-tensorflow

- Auto-Mask loss described in paper

- ResNet-18 Pretrained Model code

- Testing part

- Evaluation for pose estimation

- stereo and mono+stereo training