Nhat-Tan Bui · Dinh-Hieu Hoang · Minh-Triet Tran · Gianfranco Doretto . Donald Adjeroh . Brijesh Patel . Arabinda Choudhary . Ngan Le

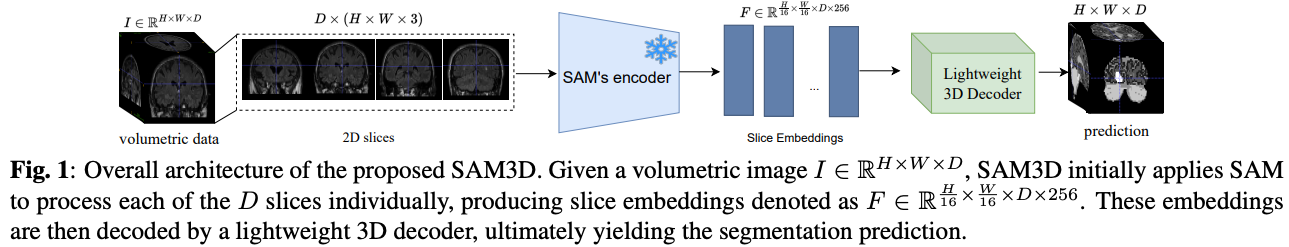

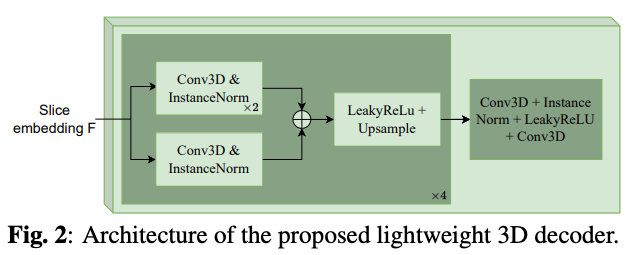

Image segmentation remains a pivotal component in medical image analysis, aiding in the extraction of critical information for precise diagnostic practices. With the advent of deep learning, automated image segmentation methods have risen to prominence, showcasing exceptional proficiency in processing medical imagery. Motivated by the Segment Anything Model (SAM)—a foundational model renowned for its remarkable precision and robust generalization capabilities in segmenting 2D natural images—we introduce SAM3D, an innovative adaptation tailored for 3D volumetric medical image analysis. Unlike current SAM-based methods that segment volumetric data by converting the volume into separate 2D slices for individual analysis, our SAM3D model processes the entire 3D volume image in a unified approach. Extensive experiments are conducted on multiple medical image datasets to demonstrate that our network attains competitive results compared with other state-of-the-art methods in 3D medical segmentation tasks while being significantly efficient in terms of parameters.

- To validate the effectiveness of our model, we conduct the experiments benchmark in four datasets: Synapse, ACDC, BRaTs, and Decathlon-Lung. We follow the same dataset preprocessing as in UNETR++ and nnFormer. Please refer to their repositories for more details about organizing the dataset folders. Alternatively, you can download the preprocessed dataset at Google Drive.

- The pre-trained SAM model can be downloaded at its original repository. Put the checkpoint in the ./checkpoints folder.

- The Synapse weight can be downloaded at Google Drive.

- The ADCD weight can be downloaded at Google Drive.

- The BraTS weight can be downloaded at Google Drive.

- The Lung weight can be downloaded at Google Drive.

conda create --name sam3d python=3.8

conda activate sam3d

pip install -r requirements.txt

The code is implemented based on pytorch 2.0.1 with torchvision 0.15.2. Please follow the instructions from the official PyTorch website to install the Pytorch, Torchvision and CUDA versions.

bash training_scripts/run_training_synapse.sh

bash training_scripts/run_training_acdc.sh

bash training_scripts/run_training_lung.sh

bash training_scripts/run_training_tumor.sh

bash evaluation_scripts/run_evaluation_synapse.sh

bash evaluation_scripts/run_evaluation_acdc.sh

bash evaluation_scripts/run_evaluation_lung.sh

bash evaluation_scripts/run_evaluation_tumor.sh

- The pre-computed maps and scores of the Synapse dataset can be downloaded at Google Drive.

- The pre-computed maps and scores of the ACDC dataset can be downloaded at Google Drive.

- The pre-computed maps and scores of the BraTS dataset can be downloaded at Google Drive.

- The pre-computed maps and scores of the Lung dataset can be downloaded at Google Drive.

@article{sam3d,

title={SAM3D: Segment Anything Model in Volumetric Medical Images},

author={Nhat-Tan Bui and Dinh-Hieu Hoang and Minh-Triet Tran and Gianfranco Doretto and Donald Adjeroh and Brijesh Patel and Arabinda Choudhary and Ngan Le},

journal={arXiv:2309.03493},

year={2023}

}

A part of this code is adapted from these previous works: UNETR++, nnFormer, nnU-Net and SAM.

If you have any questions, please feel free to create an issue on this repository or contact us at tanb@uark.edu / hieu.hoang2020@ict.jvn.edu.vn.