Authors: Chin-Lun Fu, Chun-Yao Chang, Kuei-Chun Kao, Nanyun (Violet) Peng

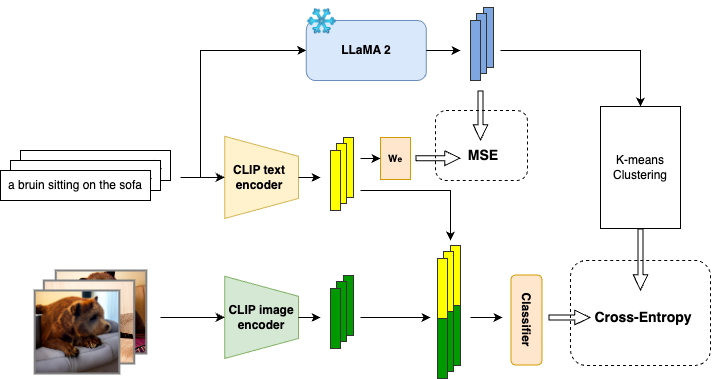

In this study, we introduces KKLIP, a novel approach designed to enhance the quality of CLIP by incorporating a new knowledge distillation (KD) method derived from Llama 2. Our method comprises three objectives: Text Embedding Distillation, Concept Learning, and Contrastive Learning.

We use CC15M as our dataset. You can download all datasets from the website.

cd pre-train

python train-klip.py

python text_eval.py